UX Roundup: Early Facebook UX | Hybrid UI for Generative UI | Eyetracking | Slack UX | Small-Language GPT | AI UX Courses | Jakob Live | Better AI Songs

Summary: Julie Zhou recalls working on Facebook’s early user interface designs | Tools for generative UI need a hybrid UI | Early eyetracking research | Slack won by user-centered design | It may cost much more to use GPT in small languages | 4 recommended courses on AI for UX | Live webinar with Jakob hosted by the UX Design Institute | Suno release 3 improves the quality of AI-generated songs

UX Roundup for March 22, 2024. (Midjourney)

Lessons from Facebook’s Early User Interface Design

ADPList hosted a great fireside chat with Julie Zhou from Sundial. The full recording is now available on YouTube (70 min. video).

Zhou was previously VP of design for Facebook. When she joined Facebook in 2006, she became part of a startup that was constantly reshaping itself. With only 100 people, the environment was chaotic, thrilling, and lacked the structure you’d expect in a conventional company. This period was marked by a profound sense of freedom and innovation, where everybody was committed to coding and bringing a big dream to life. The lack of systems and rules, while sometimes problematic, fostered an environment of unbridled creativity and learning.

As Facebook grew, so did the complexity and scale of its operations. This transformation brought about a sense of loss for the simplicity of the early days but also opened up new opportunities for personal and professional growth. Zhou transitioned from coding to leading and managing teams, setting design standards, and overseeing product iterations, many of which failed but provided invaluable lessons. Reflecting on these years, it’s clear that every phase of growth at Facebook taught her more about herself and how to navigate change and uncertainty.

Initially, the far-reaching impact of designing major Facebook features like the News Feed or the Like button was beyond Zhou’s imagination. Her role in these innovations felt ordinary within the company’s ecosystem, surrounded by peers who shared similar skills and dreams. However, witnessing these features influence billions of lives worldwide was a humbling experience that reshaped her understanding of the significance of UX design. This realization was both surprising and enlightening, underscoring the power of collaboration and timing in the tech industry.

Reflecting on the acquisition of Instagram and other milestones, Zhou came to appreciate the unpredictable nature of innovation and its capacity to transform not just the company, but the entire digital landscape. Her time at Facebook was marked by constant learning, driven by the realization that UX efforts could have unintended yet profound effects on global communication and relationships.

Since Facebook had no predefined rules, leadership meant fostering a culture of innovation, encouraging experimentation, and embracing failures as stepping stones to success. Zhou’s management philosophy evolved to focus on empowering team members, facilitating open communication, and nurturing a shared belief in the mission. This approach not only propelled projects forward but also cultivated a sense of ownership and camaraderie among the team.

The most significant lessons in management came from confronting the challenges of simultaneously scaling products and teams. Developing design standards, guiding product iterations, and leading a team through Facebook’s rapid expansion taught her the importance of adaptability, clear communication, and setting a vision that resonates with the team. Reflecting on these experiences, Zhou recognized that effective management is less about dictating actions and more about guiding and supporting your team toward a common goal.

There are many more exciting nuggets in the full session, but that segment about Zhou’s early Facebook experience should be a lesson to us all. Randomness and unpredictability are part of any groundbreaking UX work; the more so now that we’re designing with AI, which is inherently nondeterministic. Control what you can control, and design systems to cope with any outcome of those many issues you can’t control.

Albert Einstein said that, “God doesn’t play dice with the universe,” in 1926 when he rejected quantum mechanics theories that rely on randomness. These theories are now mainstream physics. Leaving aside arguments about fundamental physics, UX must embrace and celebrate unpredictability. In your career, build on your good luck and move on from any bad luck rather than dwelling on the supposed unfairness of life. In design, AI throws us another rolling pair of dice, which is exactly why it’s ideal for UX ideation. (Midjourney)

Hybrid UI for Generative UI

In a discussion about the new Galileo AI for Generative UI, Markus Paulini questioned whether it’s actually faster to design a UI by editing prompts to tweak the resulting UIs until it matches your expectations or to work with pre-designed templates the old-school way.

While iteration is core to the use of AI, I don’t think you should re-edit the prompt many times to finetune the prototype. Just do a few rounds with the AI tool, then export to Figma and do the finetuning there. Figma export is indeed one of the much-touted features of Galileo.

Imagine doing carpentry without access to the wood: all you can do it to specify in prose what the result should be like. UI design is much more delicate than carpentry, so it absolutely needs direct manipulation to supplement prose-based prompting. (Midjourney)

Moving the design from one tool to another through a one-way export is obviously a short-term fix. The long-term solution is for Galileo AI to get a hybrid UI that combines some prompting and some direct manipulation (and other GUI techniques). You absolutely don’t want to use natural language for specifying every last detail of the UI. The articulation barrier makes this much too cumbersome.

A hybrid UI for AI is my silver wish for AI. (The gold wish is proper UX methodology in the development of the major AI platforms.)

Jakob Nielsen’s silver medal for UX improvements to AI will be awarded to good implementations of hybrid UI that combine prompting with GUI control. (The gold medal is for good use of the UX design process to improve AI usability.) (Midjourney)

Early Eyetracking Research

Fascinating article reviewing early eyetracking research, going back to 1925 (99 years ago) for the most primitive study that was conducted by simply having an observer watch the participant’s eye and note every half second whether he or she looked to the left or the right. This non-computerized approach allowed Dr. Howard K. Nixon to estimate that advertisements with images of people attracted 26% more attention than advertisements featuring images of objects.

Of course, with a split-screen approach, you can only perform very coarse eyetracking: did the person look at the left or the right image first?

Only 12 years later, more elaborate research became possible, using a camera instead of another human to observe the user’s eye. Herman F. Brandt applied for a patent on a “camera for recording eye movements” on October 4, 1937. Much earlier than my eyetracking patents! (E.g., US6734845, filed September 1997.)

(Hat tip to Carl Zetie for alerting me to that article.)

Eyetracking has a surprisingly rich history. (Midjourney)

Slack Won Through User-Centered Design

The enterprise communication platform Slack is now 10 years old, and The Verge has an interesting retrospective about the history of this group-chat product. The article points out that there were many competitors in the enterprise chat space, from Skype Chat and Gchat to Yammer.

Why did Slack win? A major reason was that the company embraced user-centered design from the beginning. Ali Rayl was the head of customer experience and was part of the major decisions.

The Slack team tested the main competing products to see what they did poorly, which is a great use of competitive usability research. One finding was that they failed at keeping a user’s place when he or she switched between mobile and desktop. So Slack added this feature, and became much more usable as a result, which helped them win.

The specific feature is less interesting, because it’s a simple example of seamless omnichannel design, which is now commonplace. (For example, after watching the first half of a show on Netflix on your TV, you can continue on your mobile the next day, and it’ll play from the spot you paused on the TV.) While seamless transitions are a well-known guideline today, they were less common 10 years ago. My two points are that Slack thought of this useful feature after a competitive study and that Slack prioritized designing for usability. That’s why they won.

I don’t have access to screenshots of enterprise chat services from 10 years ago, so I made some new ones with Galileo AI, which is a tool for AI-generated UI design. If we move from a desktop chat (left) to the mobile app (right), we should not be dumped back at the home screen with miscellaneous fell-good chat. We should stay in the project-specific chat we were viewing on the desktop. Slack noticed this problem in competitive testing and fixed it. (Don’t blame Galileo for the bad usability of these two screenshots. I deliberately prompted it to create designs that I could use for this example.)

GPT 42% More Expensive in Small Languages

We all know that ChatGPT and most other large language models work better in English and Chinese than in smaller languages, simply because of the immensely larger volume of training data in the two big languages.

This is already a disadvantage for users and companies in countries that use other languages that are under-represented in the training data. Many efforts like Mistral with French and Falcon with Arabic aim to correct this situation. But French and Arabic are also fairly big languages, so what about truly small languages like my native Danish (only spoken by 6 million people)?

Efforts are underway to improve AI performance with small languages. But for now, people are stuck with ChatGPT and the like, if they want good underlying AI.

Jakob Styrup Brodersen conducted an interesting experiment comparing the use of GPT in English and Danish. He discovered that the OpenAI tokenizer requires 42% more tokens to convert Danish text into AI-speak compared to the tokens needed for the equivalent English text.

Tokenization is the method used by large language models to break up a prompt or documents into smaller pieces (“tokens”) that can be processed by the computer. (DallE)

AI tokenization may seem a very geeky and esoteric concept. However, it has two practical implications:

ChatGPT Plus (the paid version available to us peons, as opposed to the enterprise model) has a token window of only 8,000 tokens. As a conversation extends beyond this limit, GPT simply forgets the initial information. It only remembers the last 8,000 tokens, which is all it relies on to formulate subsequent responses to the user’s prompts. This means that Danish users have about a third less GPT context available when working with AI, compared to their English-speaking colleagues. This again means that English-speaking businesses have a substantial competitive advantage in terms of the quality of AI-assisted knowledge work.

While ChatGPT itself charges a flat monthly fee, the use of GPT-4 through an API (as done by most other AI tools) is charged on a per-token basis. This means that speakers of a small language like Danish will pay OpenAI 42% more money for the same service, compared to English-speaking businesses. Again, a major competitive advantage for English-speaking companies.

Bada boom. This is not a tiny advantage for companies in the US and UK, compared with the EU, the Middle East, and most of Asia. If Brodersen’s results generalize to other languages, there’s a 42% penalty on AI use in businesses operating in any language other than English. (Chinese-speaking companies likely escape this penalty if using homegrown AI tools.)

Companies operating in small languages must spend much more money on AI than English-speaking companies. (Midjourney)

4 Recommended Courses on AI for UX

We are finally starting to see several good, in-depth courses on how to use AI in UX work. It was clear to me a year ago (after the release of ChatGPT 4) that these courses were needed, but the people I advised to create early courses on AI for UX didn’t bother doing so. Thus, UX practitioners were left to their own devices during the first year of AI. It’s better now!

Here are 4 courses I like, sorted by price:

Ioana Teleanu (Senior AI Product Designer at Miro): AI for Designers. (6-week online course, requires $22/month membership, and since the course takes 6 weeks, we can estimate the cost at $44 if you remember to cancel once you’re done. They’re probably counting on most people to forget to cancel, but since you read my newsletter, you’re smart.)

Greg Nudelman (Distinguished Designer at Sumo Logic, but more important, one of the few Big Thinkers about AI & UX): UX for AI, A Framework for Product Design. (In-person, prices vary by location, but $300 at the upcoming UX Lisbon conference, May 22 when you buy as an addon to the conference registration.) Nudelman also has a specialized course on AI in Search UX ($495, in-person, Virginia, April 25), but I am not counting this as one of my 4 recommended courses because search design is too specialized for most UX professionals.

Alexandra Jayeun Lee (Director of Research and Data Science at Microsoft): AI for UX Research. (In-person, March 27, New York City, $995.)

Yuval Keshtcer (Founder, UX Writing Hub): The AI Design Academy. (8-week online course, $999.)

Even though I like all 4 courses, Nudelman’s and Lee’s in-person events are hard to get to for most people. Realistically, the choice comes down to Teleanu and Keshtcer. Teleanu’s is so cheap that it’s a no-brainer, but I think you should take both. There are no firm answers yet in AI, so getting two perspectives will be useful. And AI is so important that getting a good grip now is important. (I wrote in June 2023 that UX needs a sense of urgency about AI, and but most UX’ers are still not moving fast enough.)

I recently discussed a set of free LinkedIn short courses on AI. I know you can’t beat free, but I still think you should consider one of the more in-depth courses above.

In-person vs. online courses? Both are good options, but the good in-person courses on AI and UX are not presented often enough. (Ideogram)

Live Webinar with Jakob Hosted by the UX Design Institute: This Wednesday

The UX Design Institute is hosting a live event with me about current topics in UX this Wednesday, March 27 at

12pm USA Pacific time

3pm USA Eastern time

4pm São Paulo

7 PM London/Dublin/Lisbon

8pm Paris/Berlin

9pm Athens

11pm Dubai

Participation is free, but advance registration is required.

Suno Release 3 Improves the Quality Of AI-Generated Songs

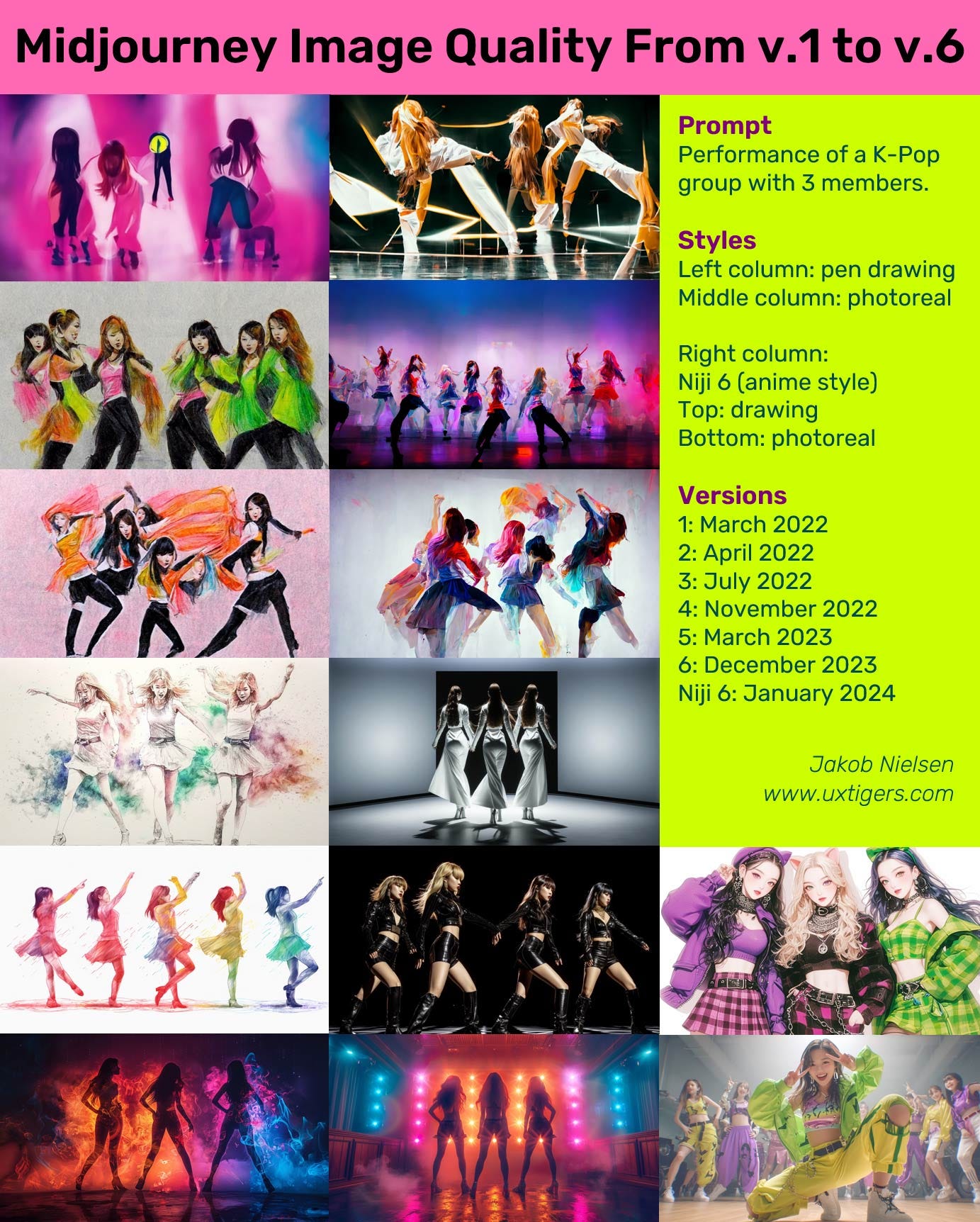

Suno’s release 3 launched yesterday. The net has been raving about the improvements in song quality. I do think the songs are better than before, but I am not overwhelmed by the step up, compared, for example, to the improvements in image quality Midjourney has given us with each new release.

Here are four songs about two of my 10 usability heuristics: Two were created with Suno v.2 and two were created with v.3.

Songs about Jakob Nielsen’s usability heuristic number 5: “Error Prevention,” created with Suno v.2:

First song about error prevention (Suno v.2):

Second song about error prevention (Suno v.2):

Songs about Jakob Nielsen’s usability heuristic number 6: “Recognition Rather than Recall” (also known as the 3R’s of usability), created with Suno v.3:

First song about the 3R’s (Suno v.3):

Second song about the 3R’s (Suno v.3):

(See the full list of all Jakob Nielsen’s 10 Usability Heuristics.)

We can create better and better music with AI. Still not at the level of an expensive band, but exciting for educational purposes and to add a dimension to multi-media authorship. (Midjourney)

For comparison, the leap in image quality between versions of Midjourney seems more impressive to me:

In Midjourney's office hours yesterday, they said that the improvement in image quality from the current v.6 to the forthcoming v.7 will be bigger than the improvement from v.5 to v.6. This was the company itself talking, so take this claim with a grain of salt. But you can see in the above screenshots from v.1 to v.6 that their progress has been huge in the past, so I somewhat believe them.

The bad news: it seems to be slow going to get v.7 out the door, so we’ll have to wait.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today. Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (26,790 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched). Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)

Zhuo's articles have inspired me a lot throughout the years. (And by the way, I believe it's a typo but her last name should be Zhuo instead of Zhou 😬. )