UX Roundup: Explainer Videos | AI Overload | AI Music | Over-Recuiting | Home Robots | GPT 4.5

Summary: AI stigma & SEO explainer videos | Overwhelmed by AI progress | AI music improves | Recruiting too many study participants to account for no-shows | Best form factor for in-home robots | ChatGPT 4.5 released

UX Roundup for March 3, 2025. (Midjourney)

AI Stigma and SEO Best Practices Explainer Video

I made a new explainer video about why users often rate AI results worse than they deserve and how we can address this problem through better design. (YouTube, 3 min.)

For this avatar explainer, I used a 2-D animation look, in the hope that it might reduce the "uncanny valley" some readers have complained about for my photorealistic avatar videos. (Compare with an example of two different photoreal avatars in one video.)

Do you like this 2-D look better than the photoreal look? Let me know in the comments. (I might experiment with a 3-D animated look next, though I think that playful style may be more suited for music videos like the one I made about Recognition Rather than Recall.)

For people who are interested in my behind-the-scenes workflow: the first 10 seconds of lip synch were made with Kling 1.6. You can see how the avatar is much more animated, to the extent of being almost too enthusiastic. The rest of the video was lip-synched by the more staid — not to say corporate — HeyGen product.

I used this 2-D cartoonish avatar for my latest explainer video. (Leonardo)

A second new explainer video: SEO Best Practices (YouTube, 4 min.)

This video uses a traditional photorealistic avatar, animated and lipsynched with HeyGen (most of the video) and Kling 1.6 (four 8-second clips, a close-up, and a wide view after each of the B-rolls with a user searching for news and balance scales weighing high- and low-quality content).

Both explainer videos used ElevenLabs for the audio, using text-to-voice based on my manuscripts. In particular, I liked the voice ElevenLabs generated for the second video. The voices are still not as emotionally projecting as a good voice-over human would produce, but they are improving.

This is particularly impressive since ElevenLabs only has 50 employees, according to a recent New York Times article about high-performing product teams that accelerate with AI. ElevenLabs already has $100M in annual revenues, or $2M/employee. (The trend toward AI-accelerated product teams is a theme I’ve discussed before. If you’re on a non-accelerated team, escape your dinosaur company before it’s too late.)

AI-generated voices don’t get as much attention as images or video, but they’re an important part of many projects, especially any avatar project. AI voices have improved substantially, and ElevenLabs is a leader despite having only 50 employees. (Midjourney)

Overwhelmed by AI Progress

Ethan Mollick recently posted, “As someone who is pretty good at keeping up with AI, I can barely keep up with it all. That leads me to believe that very few other people are keeping up, either.”

Mollick is understating his case by claiming to be “pretty good” at AI. In fact, I would say that he’s insanely good, because I view him as the world’s number one influencer on the topic of the meaning of AI and its practical implications. If you don’t already subscribe to Mollick’s newsletter, go ahead and do so now.

It is true that AI is accelerating, with new developments daily. New foundation models, new features, and new AI tools launch in an uninterrupted cadence. Nobody can keep up: you can’t read all the news, let alone try all the tools.

AI is now progressing at an overwhelmingly hyper-accelerated pace. (Midjourney)

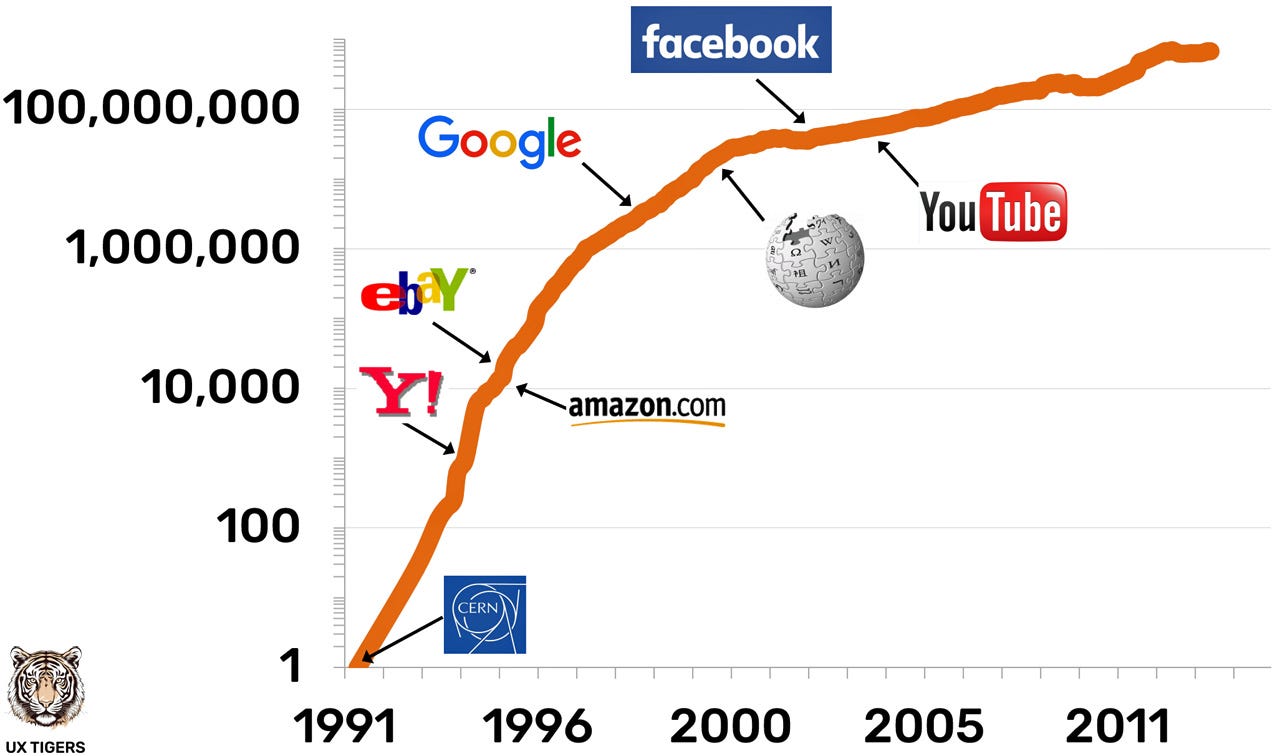

In many ways, the current pace of AI advances is equivalent to the early days of the Web, circa 1994. In the beginning, people like myself could scan the daily “What’s New With NCSA Mosaic” page and see what new websites had launched that day. We could even visit those sites that seemed interesting and check their design and features.

Come 1995, the web was growing so furiously that it was infeasible to read a list of all the new websites launched on a given date, and the “What’s New” page was duly discontinued.

Number of websites on the world wide web from 1991 to 2013, with a few important new sites noted. During 1994, as the web expanded from 800 websites to 11,576 sites, every day, I would visit all the important new sites that were launched and announced on the “what’s new” page for that day. In 1995, when 74,000 websites were launched, this was no longer possible. AI is now in a situation closer to the web in 1995 than 1994.

Don’t despair. Just because we can’t follow every small development with AI doesn’t mean we must stop keeping up with the news. You simply have to prioritize where to allocate your limited attention. For example, unless you create AI videos, you don’t need to worry about whether Hailou AI or Luma Dream Machine has the best feature for making Anime as an image-to-video animation. In fact, even if you do make AI videos, you may not make Anime. Every time one of the video engines puts out a dot release, there will be hundreds of posts from the creator community with sample clips comparing the intricacies. You can watch a few of these from time to time (they are often pretty cool!), but don’t feel compelled to follow developments compulsively.

The big trend is what’s important, not the small fluctuations. To continue my example, do stay aware of how AI video changes from year to year, in case you want to include video features in a new project. It’s deadly to rely on old thinking in AI. But you can brush up on the details when or if needed. Keeping up with the latest camera controls in Ray2 is unimportant unless you need to use them.

Advances in AI Music

The most prominent improvement in AI over the last year has definitely been in AI video.

But AI music has also come far. Coincidentally, I have produced two songs about my usability heuristic number 6, “Recognition Rather than Recall”:

Version made with Suno 3 in March 2024 (YouTube, 1 min.)

Version made with Suno 4 in February 2025 (YouTube, 2 min.)

Only 11 months between these two songs, and yet the composition and performance qualities are like night and day.

AI blows its horn better and better, making it easy to create groovy tunes. As you may have noticed, I like to include saxophones in many of my songs. (Midjourney)

Recruiting Too Many Study Participants to Account For No-Shows

Anybody who has conducted user research with besides the cheap-and-tried hallway methodology (where you grab a convenience sample of people passing by) know that just because a person promised to participate in a study doesn’t mean that he or she will actually show up. No-shows are a curse of in-person research, but even in remote studies, some scheduled participants won’t connect at the expected time.

MeasuringU calculated the average no-show rate across studies as 8%, meaning that for every 100 study participants you have lined up, you’ll only get data from 92.

Their recommendation is that you over-recruit by 20% to account for not just no-shows, but also technical difficulties during the session and participants who misstate their qualifications and thus give you poor (or useless) data.

I usually recommend testing 5 users for each round of qualitative usability testing. Following MeasuringU’s advice would thus mean that you should recruit 6 users for each study. If they all show, no harm done in getting one more user under your belt, even though you don’t learn that much from the 6th user in any given test set-up.

One approach is to have some spare tasks that are not important enough to include in the main study, and then if you get an extra user, fire off those tasks. You only get one data point for those tasks, but by definition they were the least important, and it’s probably still better to learn something about them than to mostly waste your time with a sixth user doing the same as you’ve already seen with the first five users.

Alternatively, stick with recruiting 5 users, recognizing that you’ll sometimes end up with only 4 good participants. (And occasionally even only 3.) The truth is that testing three users is enough for the most important qualitative insights. We only plan for five to get a bit wider coverage and a better selection of juicy user quotes to share with stakeholders in our presentations.

A scheduled user research participant might easily ghost you and not appear for your study. How to handle this? Either over-recruit, or accept that qualitative research can be good with fewer participants and preserve your “user budget” for running more studies in the future. The next round of testing will give you a chance to catch up. (Midjourney)

In-Home Robots

I’m very excited about the emergence of robots to do our household chores. Most likely, they will have a humanoid form factor simply to fit in with the home environment, which was designed for humans. (And the odd dog, but even though a robot dog may be great for guarding your house, the shape doesn’t lend itself to doing laundry.)

Here is a great video from the robot company Figure showing two of its robots collaborating on unpacking a bag of groceries. (3 min.)

The robots move slowly and deliberately, and I don’t see why you would need two robots for this job except to demonstrate the abilities of the company’s robots to work collaboratively. But the video still shows the future. [And robots will soon move faster — somewhat like I made my robot run slowly in the beginning of my AI Acceleration video and then faster and faster throughout the video. This effect was achieved in post-production, but real-world robots will do the same.]

Speed will be solved through the natural evolution of AI software (and hardware) over the next few years, and robot abilities will only grow.

A second application I’m even more excited about as I’m getting older is the ability for robots to provide in-home eldercare. Most old people will be able to age in place with the help of a few robots rather than having to move to a retirement home. 5 (or at most 10) years until this becomes a reality, meaning I will likely be a beneficiary.

One topic I’ve seen discussed is the height of in-home robots. Some say that robots should be tall enough to reach everything, clean the ceilings, and the like. Bigger robots are likely stronger and can carry heavier packages; they will be handy at fighting burglars. Others say that big robots will be intimidating and that most people will want robots that are shorter than themselves. This very interesting aspect of user experience will remain unsolved until we get more field study data from early robot deployments.

Unpacking groceries will be a useful application of household robots, but we probably don’t want them to look too intimidating while doing our chores. (Midjourney)

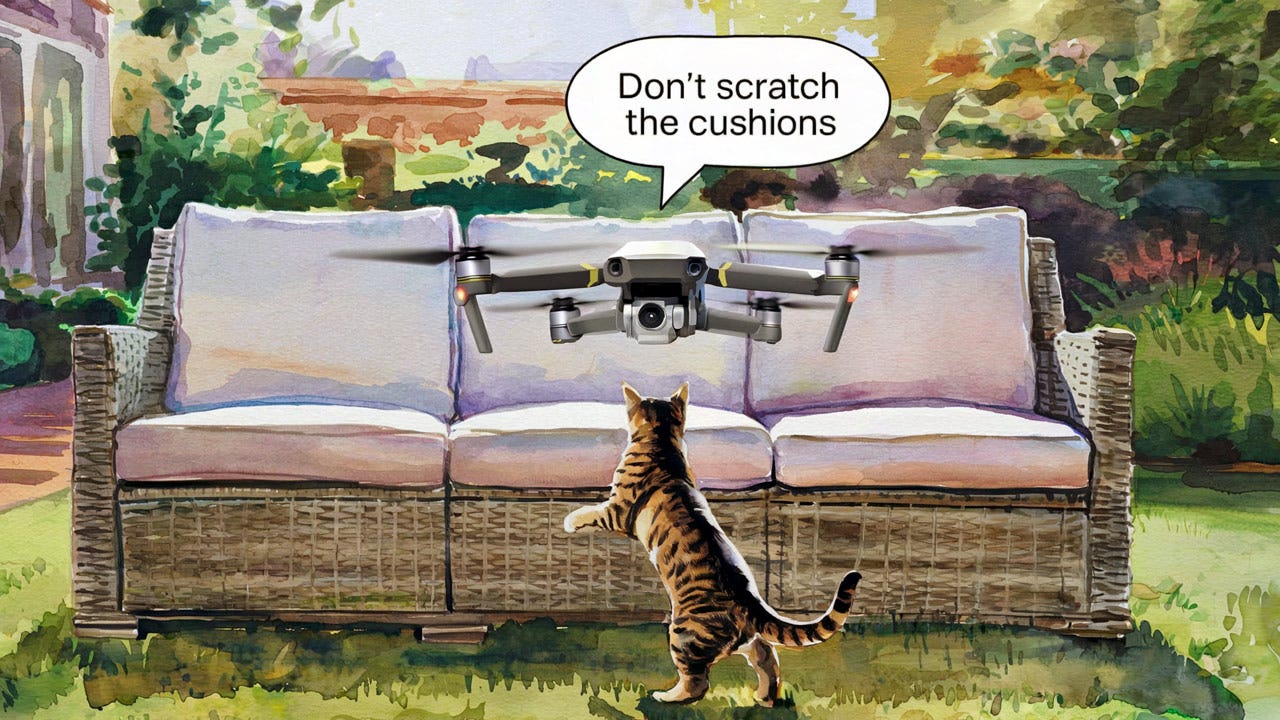

Specifically regarding providing security by fighting off intruders, maybe we should rethink the concept of a general-purpose robot because a humanoid robot big enough to be a good fighter might indeed be intimidating in everyday situations. Instead, we might prefer security robots in the form of small drones that could be very fast at covering a large area and wield various anti-intruder defenses to handle everything from an armed burglar to the neighbor’s cat. (In my case, the neighbor’s cat has a nasty habit of destroying the cushions on our outdoor furniture, so I would like a drone to scare it away when it approaches the furniture. But otherwise, it’s a nice enough animal and should be left alone.)

While the humanoid robot form factor will be ideal for many household tasks, other more specialized robots could co-exist, especially as they get cheaper in a few years.

Household robots may span a range of form factors, including security drones to protect garden furniture against the neighbor’s cat. (Ideogram)

GPT 4.5 More Creative

OpenAI released ChatGPT 4.5, with the claim that this larger model has improved creativity, instead of just doing better on STEM tasks (like physics tests and coding), as was mostly the case for recent reading models.

I put it to the test with this request: “Write a one-paragraph overview of Jakob's Law of the Internet User Experience that is professional but still includes some powerful sarcastic putdowns of web designers that violate the law.” Here’s the response from ChatGPT 4.5:

Jakob’s Law states that users spend most of their time on other websites, thus expecting yours to work the same way—an insight that should be glaringly obvious yet continues to elude countless web designers chasing the fantasy of reinventing UX with every project. Unfortunately, too many digital visionaries insist on treating their websites like abstract art installations rather than functional platforms, baffling users who, shockingly, don't find delight in puzzling out obscure navigation or guessing the purpose of minimalist buttons. Jakob’s Law bluntly reminds designers that innovation is less about disrupting common sense and more about recognizing that if users are forced to relearn the internet for your masterpiece, they'll abandon ship faster than your site’s bloated animations can even load.

It does a good job — almost like my own writing when I’m particularly upset about clueless designers.

Compare with the same query posed to ChatGPT 4o:

Jakob’s Law of the Internet User Experience states that users spend most of their time on other websites, so they expect yours to work the same way. Violating this principle is a surefire way to frustrate users, tank conversions, and make your site a case study in what not to do. Yet, some designers still insist on reinventing the wheel—except their wheel is square, glitchy, and hidden behind a five-second animation. Want to confuse visitors with a navigation scheme that requires a treasure map? Or maybe force them to decode your avant-garde button labels? Congratulations, you’ve just turned your website into a UX escape room no one wants to escape into.

Certainly not bad, but 4.5 does take that cake.

OpenAI’s release announcement contains an interesting comparison of the writing skills of past GPT models. Their example includes the question, “What was the first language?” GPT-1 from 2018 couldn’t even provide an answer, but just repeated the question back verbatim many times. A year later, GPT-2 delivered this masterpiece of 3rd-grader writing: “The language of the world was created by a group of people who were looking for something to do and who wanted to communicate with each other. They wanted to communicate with each other, and each other to themselves. They had a long history of communicating with each other, and they thought they could communicate with each other.”

We have come a long way, indeed. Further to go, and I can’t wait to try GPT-5. (Or Grok 4, or Claude 4, or …) For now, I also used GPT 4.5 for the bullet list to announce this newsletter to my LinkedIn followers. See what you think.

A good step up in creativity for ChatGPT 4.5. It takes the cake. (Midjourney)

I have seen many AI influencers express disappointment that GPT 4.5 doesn’t score as high as GPT 1o-pro on various STEM benchmarks, such as coding and solving Math Olympics problems. This is because 4.5 isn’t a reasoning model: its intelligence improvements were based on more intense pre-training. It is completely in accordance with our expectations from the AI scaling laws that a 10x higher compute expenditure during pre-training only results in a linear increase in model capabilities. But there is every reason to expect that reasoning capabilities can be added to the larger models we’re now seeing, such as GPT 4.5.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 42 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (28,069 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s first 41 years in UX (8 min. video)