10 Foundational Insights for UX

Summary: UX has come a long way since its early beginnings at Bell Labs in the 1940s. As we enter Year 2 of the AI revolution, it’s clear that the core principles of UX design are not being replaced but are evolving with AI integration.

In this article, I will discuss 10 foundational insights in user experience (UX). These insights are numbered from one to ten, but this numbering does not indicate priority or importance. Rather, it roughly follows a chronological sequence, with earlier numbers generally corresponding to earlier historical developments. However, in a few cases, I grouped closely related topics together for coherence.

Before AI vs. After AI

Before discussing the 10 principles, let’s consider the impact of artificial intelligence (AI) on UX. AI represents a fundamental shift in user experience, and I perceive three distinct changes brought about by this technology.

The first change pertains to the UX profession itself. AI will revolutionize how we conduct UX work by dramatically boosting our productivity, enhancing our deliverables, and expanding our capabilities through generative user interface creation. This transformation will be particularly pronounced in teamwork scenarios, as AI’s benefits extend beyond individual productivity gains.

The increased efficiency enabled by AI will likely result in smaller teams achieving greater effectiveness. In the next five to ten years, tasks that currently require a team of 100 people might be accomplished by just 10 individuals. This shift carries significant implications, such as a reduction in management hierarchy. Instead of multiple layers of management, a single manager will suffice for a small super-team.

The pancaking of UX will eliminate much existing bureaucracy, leading to more efficient operations and significantly faster product delivery. Consequently, we'll be able to better serve users by iterating and delivering solutions faster than ever before.

From a career development perspective, this evolution in UX work is crucial. Professionals must position themselves effectively within these super-efficient product delivery teams. While it may take about five years to establish these new working methods fully, those who engage in this process during this transition period will develop “superpowers” in product delivery. Therefore, individuals not currently part of AI-accelerating product team risk falling behind in the future landscape of UX.

The second major change brought about by AI relates to the user interfaces we deliver to users or customers. These interfaces will incorporate a substantial AI component, a topic I'll explore in more depth, mainly under insights numbers 4 and 10.

The third change concerns UX principles — this article’s focus. Interestingly, I believe this area will see the least drastic change. The underlying principles of UX are rooted in human behavior and cognition, not machine functionality. This is why many of the examples and discoveries I’ll discuss, despite being historical, remain highly relevant today and will continue to be crucial in the coming years. Even as we witness significant shifts in team structures, product delivery methods, and the products' nature, users will remain fundamentally unchanged: they will still be human.

1. Empirical Data from Representative Users

🌟 Bell Labs, 1947.

The first of our 10 fundamental insights is the need for empirical studies and data derived from observing real users. This insight emerged from Bell Labs in the 1940s, a remarkably early period in computer history. Bell Labs, the research arm of the telephone companies in the United States, was the world’s premier high-tech laboratory for decades, responsible for numerous groundbreaking inventions from radio telescopes to cellular telephony. Later, I worked at Bell Communications Research, the branch of Bell Labs owned by the regional Bell operating companies.

(All the UX insights we think are “obvious” today originally had to be discovered by pioneers who did not know the current well-established UX process. In this article, I aim to credit the pioneers who made the first major contribution to each of the 10 insights.)

One of the first Bell Labs insights was the necessity of studying users. While this may seem obvious to UX professionals today, it was a revolutionary concept at the time. This understanding forms the foundation of modern UX and stems from the fundamental recognition that “you are not the user.”

When I say “you,” I mean anyone on a design team. These individuals possess extensive knowledge about their products and technology in general. It’s natural to assume that since we’re all human, we can accurately judge what works well for other humans. However, this assumption is flawed.

It’s impossible to simply erase your knowledge and pretend you don’t know what you do. Your understanding of your company, product, and technology informs how you interpret what you see on a screen. Consequently, something that seems obvious to you might be entirely opaque to an outside customer who lacks that background knowledge.

Watching the user perform tasks with your design and taking notes about what works well or poorly. That’s been the basis for the empirical approach to design ever since Bell Labs hired John E. Karlin in 1947. (Midjourney)

To illustrate this point, let’s examine one of Bell Labs’ early projects: the design of the touch-tone telephone. This innovation replaced the rotary dial telephone with push buttons, allowing for faster number entry. However, the layout of these buttons needed to be designed.

Bell Labs tested many designs with real users, having them enter phone numbers. I'll highlight three of these designs:

The design users preferred: a simple layout that appealed to testers aesthetically.

The fastest design: the layout we use today, which proved most efficient for entering phone numbers.

The design with the lowest initial error rate: a layout mimicking the familiar rotary dial.

3 of the possible layouts for the pushbutton telephone keypad that were tested by the Bell Labs Human Factors Department. Redrawn after the original figure in the Bell System Technical Journal July 1960 (R. L. Deininger: “Human Factors Engineering Studies of the Design and Use of Pushbutton Telephone Sets.”).

A crucial methodological insight from this study was that designing for users cannot be based solely on their stated preferences. Users preferred the aesthetically pleasing design, which was actually slower to use. Instead, Bell Labs implemented the fastest design in 1963.

This decision has had a lasting impact. For 60 years, phone companies worldwide have used this same design. It has even been adopted for virtual keypads on smartphones and other devices. I estimate this design has been used approximately 40 trillion times since its implementation.

By conducting empirical studies and choosing the fastest method for entering phone numbers, rather than relying on user preferences, Bell Labs’ early UX work has saved humanity an estimated one million person-years that would have been wasted on slower number entry.

This example underscores the importance of observing users rather than simply asking them what they want. People often don’t know what’s best for them — a realization that forms the cornerstone of modern UX practice. While this insight may seem self-evident today, it was a groundbreaking discovery made by the pioneering UX team at Bell Labs.

2. Business Value of UX

🌟 Bell Labs, 1950.

The next fundamental insight is the business value of UX, which also originates from the early days of Bell Labs.

I mentioned that the telephone keypad design has saved humanity one million person-years. This is commendable, but from a business perspective, why would the phone company care about humanity? The answer lies in the importance of designing phone numbers for speed.

To understand this, remember how telephony systems functioned in those early days. A household’s telephone was connected via wire to a “central office,” which housed enormous, expensive electromechanical switching equipment. When a customer picked up their phone, received a dial tone, and began entering the phone number, they occupied resources on that switch. The call would be placed only after entering the full phone number, freeing up those resources for another customer to make a call. Consequently, if people were slow at entering phone numbers, they tied up the central office switch for extended periods.

Slow entry of phone numbers would have required the phone company to build more switches to meet customer demand for making calls, costing millions of dollars. By implementing a better user interface design, they saved substantial money in provisioning central office equipment.

The phone company had numerous other examples of cost-saving through design. Although I worked there many years later — I'm certainly not old enough to have been involved in the 1940s work — I know they undertook many projects where even small time savings had significant financial impacts. For instance, improving the efficiency of directory assistance by just one second saved the U.S. telephone companies $7 million annually. (Directory assistance allowed customers to call an operator and ask for a person's phone number in another town, which the operator would then look up and provide.)

These improvements were implemented, demonstrating the substantial monetary value of better UX for the phone company. Since then, we’ve seen this principle extend to e-commerce companies and many other industries, all recognizing the financial benefits of superior UX design.

The power of user experience (UX) methods lies not just in creating better interfaces, but in its potential to generate substantial cost savings and extra sales.

UX creates tremendous business value by making better products that sell more and by improving the usability of enterprise tools, leading to increased employee productivity. Better usability is like going to work with an extra set of arms: you get more done in the same amount of time. (Leonardo)

3. Discount Usability

🌟 Jakob Nielsen, 1989.

Throughout my career, I’ve focused on maximizing the deployment of user-centered design on practical design projects. This led me to develop the concept of “discount usability” — fast and cheap ways to improve user interfaces. There’s a common saying in project management that you can have things good, fast, or cheap — but you can only pick two. The assumption is that if it’s good and fast, it’ll be expensive, and so on.

While this principle generally holds true, usability is a notable exception. By employing these fast and cheap methods that I and others have worked hard to develop, we can actually achieve all three: good, fast, and cheap. Here's why: the quality of the user experience in the final product is primarily driven by the number of design iterations you go through during the development process.

More iterations mean you can test out more design ideas, ultimately improving quality. So, how do you squeeze in more iterations when working with a fixed timeline before launch? The answer is simple: by making each iteration fast and cheap.

This is where discount usability really shines. It allows us to have it all — a rare and welcome scenario in our field. By embracing these methods, we can rapidly iterate, refine our designs, and deliver high-quality user experiences without breaking the bank or missing deadlines.

In essence, discount usability proves that in the world of UX, we can indeed have our cake and eat it, too. It’s a game-changer allowing us to deliver better products more efficiently, benefiting users and businesses alike.

Save money, move fast, and create great design. Discount usability methods allow us to have it all. (Ideogram)

4. Augmenting Human Intellect

🌟 Doug Engelbart, 1962.

Insight number four is augmented human intellect, which was Doug Engelbart’s main research goal. Engelbart is more widely known today as the inventor of the computer mouse, which is now standard on all desktop computers. Indeed, I once had the opportunity to handle Engelbart's original mouse from the 1960s, a big, clunky, heavy device quite different from today's small plastic versions. But the mouse was not his main project.

Engelbart invented the mouse as a side project because he wanted a quick way to point to things on the screen. It turns out that the mouse is indeed the best and fastest way to do this. However, his primary focus was on augmenting human intellect. By augmentation, he meant uplifting our intelligence, making us more insightful and able to handle more complex tasks.

Engelbart worked on this concept for decades and invented many interesting UI advances. However, his system was fundamentally impractical due to technical limitations. For many years since the 1960s, computers haven’t truly made us capable of more insightful work. They've enabled us to work faster, which is already very good. Word processors are superior to writing by hand, and Excel spreadsheets are far better for calculating numbers compared to using a calculator or doing mental math. So yes, computers have accomplished many great things, but they haven't augmented human intellect — until now, with the advent of AI.

AI is that augmentation device that Engelbart started working on so many years ago. It genuinely helps human intellect and enables us to generate better insights and be more creative. For example, it’s a huge boost for creativity and ideation. With AI, ideation is essentially free because it can generate so many ideas so quickly, and it’s continually improving.

Right now we are in year 2 of the AI era. Soon enough — maybe in five years — we will get more superhuman AI where the AI’s intelligence will be superior to humans in certain ways.

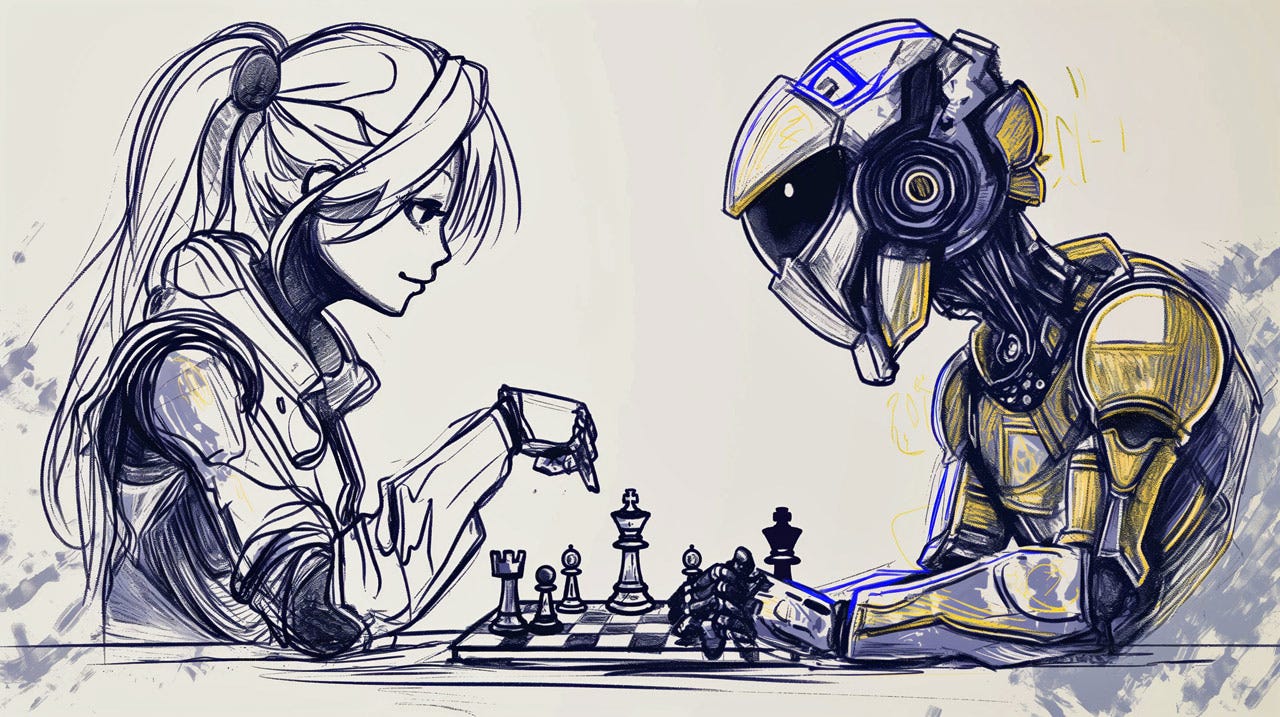

This superhuman intelligence will augment us even more because the optimal use will be a synergy, collaboration, or symbiosis between humans and AI superior to either alone. We know this from examples where AI already has superhuman intelligence in certain limited domains, such as playing chess or Go. Thirty years ago, whether a computer could beat a chess grandmaster or even the world champion was a big deal. For many years, they couldn’t, but since 1997, computers have beaten the world chess champion, and there’s no way a human can ever win in chess against a computer.

But it turns out that a human and AI together play even better. The synergy or symbiosis of humans and AI is superior to humans alone. It is also superior to AI alone because it results in more creative gameplay when you combine those two different ways of thinking.

Since IBM’s Deep Blue defeated Garry Kasparov in 1997, no human has been as good as AI at playing chess. But the combination of a human player and chess-playing AI is better than either alone, meaning that AI augments the human intellect in the domain of chess. (Midjourney)

The same is true in UX design. AI can generate a flood of ideas, but humans can be better at prioritizing them and figuring out how to combine them into a design strategy. So, synergy is really the way to use AI in UX. This idea goes all the way back to Engelbart’s concept of augmenting, not replacing, human intellect.

5. GUI/WIMP

🌟 Xerox PARC, 1974.

I'm crediting the graphical user interface (GUI) to Xerox PARC, as they were the main inventors, though many others were also involved. This innovation dates back to the 1970s. The Apple Macintosh commercialized the GUI a decade later, but in terms of research, invention, and ideas, it originated in the 1970s, primarily at Xerox PARC.

The graphical user interface is sometimes referred to as WIMP design, an acronym standing for Windows, Icons, Menus, and Pointing Device. On mobile devices, the pointing device tends to be a finger, but otherwise, it’s mainly that mouse Doug Engelbart invented.

Interestingly, each of these four WIMP elements could exist independently of the others. That's precisely what Doug Engelbart did: he invented the mouse but used it without icons or any visual graphics on the screen. It was just pure text, and he would click on the words he wanted. You could have icons without a mouse, too — just pretty pictures on the screen illustrating commands. Menus could exist without pictures or a mouse, where you’d simply type in a number corresponding to your desired menu item. In fact, many computers operated this way before the advent of the GUI.

But when you combine these elements, it’s almost magical. They reinforce each other, creating a much smoother interaction. As I mentioned earlier, the Apple Macintosh in 1984 marked the commercial launch of this idea. For the subsequent 40 years — a vast stretch of time in the tech business — the graphical user interface has been essentially the only way to design user interfaces effectively. It’s just that superior.

In a graphical user interface, we can see the items we want to interact with and touch or click them directly. (Midjourney)

However, with the arrival of AI in the last couple of years, we’ve lost a lot of that usability. AI interfaces tend to be command-line based, which is really a reversion to DOS and Unix — those terrible user interfaces we abandoned for good reason. As a result, there’s a significant usability deficit in current AI tools due to their departure from the graphical user interface.

I have a strong prediction: we will see a hybrid user interface in the future. AI won’t be purely chat-based, because chat is a clunky, awkward way of driving an interaction compared to clicking options or dragging sliders to adjust parameters. We're already seeing the beginnings of this in tools like Midjourney, which has started introducing such elements in its web-based interface. So GUI-AI is definitely on the horizon.

Don't get too sidetracked by the chat-based interface, which is a temporary solution that allowed companies to roll out AI quickly without building the proper UI they needed. I should also point out that these AI products have suffered terribly from a lack of good UX involvement. AI is currently built by brilliant engineers, and all credit to them for their technical prowess. But they don’t know user interfaces. They don’t know how to design for regular people, resulting in interfaces that are clunky and difficult to use.

I firmly believe the future of AI lies in the hybrid user interface. We'll reintroduce many of the graphical user interface elements that lead to superior usability, creating a more intuitive and efficient AI interaction experience.

6. Hypertext

🌟 Ted Nelson, 1965.

Hypertext was invented by Ted Nelson in 1965. As with many groundbreaking ideas, hypertext’s initial conception was ahead of its time. While insightful, it wasn’t immediately practical due to technological limitations of the era. (The first major commercial hypertext system was Apple’s HyperCard in 1987.)

Hypertext is essentially the technology underlying the web. At its core, hypertext connects pieces of information across an information space through a remarkably simple interaction technique: you click, you get. This straightforward principle forms the foundation of how the web functions. Every web page presents a list of links, and users navigate to another web page with a single click.

This elegant interaction technique has proven to be incredibly powerful. Today, it serves as the cornerstone for virtually every website. The ubiquitous “click and get” nature of hypertext has revolutionized how we interact with and consume information online.

Hypertext connects related documents (such as web pages) so that users can click on a link and instantly be transported across hyperspace. You click, you get. (Ideogram)

7. Information Foraging

🌟 Peter Pirolli & Stuart Card, 1995.

The rise of the web brought an incredible benefit: the ability to connect millions or billions of pieces of information through that single interaction technique of hypertext. However, this abundance of information also presented a significant challenge. The sheer volume of data is intimidating and overwhelming, making it easy for users to feel lost.

This leads us to an important question: How do people navigate these vast information spaces? In the past, when we were limited to storing perhaps a hundred documents on a floppy disk, this wasn’t an issue. But the web changed everything.

Enter the theory of information foraging, developed by Peter Pirolli and Stuart Card. This theory explains how humans move through large information spaces, and interestingly, it draws parallels with animal behavior in nature.

Pirolli and Card discovered that the way people navigate information spaces is remarkably similar to how animals hunt for food in the forest. Imagine a wolf in the woods, faced with two potential paths. How does it decide which way to go? It sniffs the trail, trying to catch the scent of its prey — let’s say rabbits. The wolf will follow the path with the strongest scent of rabbit, hoping it leads to its quarry.

A predator sniffs the trails to discover the best way to a tasty rabbit. Web users behave like wild animals and sniff the links on web pages to discern which lead to the most tasty information. (Midjourney)

Users behave similarly online, albeit metaphorically. They “sniff” the links, trying to discern what’s called “information scent.” This scent communicates to users that something valuable awaits at the other end of the link. It’s a crucial process because life is too short to click on every link on the internet. Users must be selective.

The way users select is by evaluating the links available to them and judging what they might find if they follow them. This means links must effectively communicate their destination — by emitting a strong information scent. The theory of information foraging explains this behavior remarkably well, providing valuable insights into how users interact with and move through large information spaces online.

8. Irrational Users

🌟 John M. Carroll, 1987.

Jack Carroll introduced the “paradox of the active user” in 1987. This insight challenges the assumption that people behave rationally when interacting with user interfaces.

Carroll found that users don’t behave in the rational ways we might have expected. For instance, when learning or understanding a user interface, people typically don't want to learn, read, or study help texts or manuals. Instead, they have a strong action bias — they jump the gun.

Users approach web applications or apps with a clear goal in mind. They want to get things done, not learn about the interface. This behavior might seem counterintuitive, especially to those of us who are interested in user interfaces, technology, and how things work. We might enjoy studying the intricacies of a system, but that’s not how most people behave.

The average user doesn’t care about computers, technology, or design. They care about solving their problem. They want to accomplish their task, and that’s their primary focus. So they just dive in and start using the product.

Users have a strong action bias: they jump the gun because they want to start moving through the UI. They don’t want to take time to learn or study anything. (Midjourney)

This behavior is irrational because, logically, users would benefit from spending a few minutes studying launch information or going through onboarding before using the product. They would make fewer errors, better understand the conceptual mental model, and save much more time in the long run. But that’s not how people behave in reality.

Carroll’s insight emphasizes that people are irrational in these circumstances due to their action bias. As UX professionals, we must design for the way people actually are, not for the way we wish they were or how we think they should logically behave.

Our job is to create interfaces that work well, given users’ natural tendencies, even if those tendencies seem irrational.

Unfortunately, the insight that users are irrational lies behind many dark design patterns. Evil designers can exploit, for example, false urgency to oppress users rather than help them.

9. SuperApps and Integrated UI

🌟 WeChat, 2011 (and integrated software like Lotus 1-2-3, 1983).

WeChat from China is the most famous superapp, bringing together essentially all the things you want to do with technology in one user interface.

This approach is great for usability because you don’t have to learn multiple interfaces. However, WeChat wasn’t the first to implement this concept. We can trace it back to integrated software products like Lotus 1-2-3. The core idea of a superapp is to create a single integrated experience rather than a fragmented one.

A key part of software integration is when different apps seamlessly exchange information and work together. (Ideogram)

Unfortunately, today we mostly have fragmented user experiences. This integrated concept is rarely implemented. Your phone has endless icons for different apps that don’t communicate with each other. You open one app for each task you want to accomplish, rather than having a single integrated user experience.

The web is similar. Millions of websites don’t talk to each other or exchange information. One site doesn’t know what you did on another, so you don’t accumulate an integrated user experience.

The lack of integration creates a fragmented user experience for both applications and websites. They don’t talk with each other! (Midjourney)

However, integration can be achieved. WeChat has done it on a large scale, and in earlier days, integrated software products on PCs did it on a smaller scale.

AI might be one of the drivers for better integration. Not the current generation of AI, but the next one, which is supposed to be agent-based. These AI agents may provide us with the glue or methods for integrating underlying individual features.

10. AI and Intent-Based Outcome Specification

🌟 ChatGPT 4, 2023.

For now, the final UX advance is AI, which I count as impacting the UX field starting in 2023 (the release of ChatGPT 4).

Of course, there were many AI products before, and we can trace AI research back almost 70 years, to when John McCarthy coined the term “artificial intelligence” in 1956. I attended my first AI conference in 1988, the Fifth Generation Computer Systems (FGCS) conference in Tokyo. AI has been a topic of academic research for a long time, but for most of that period, AI didn’t work or produce anything particularly useful.

ChatGPT 4 was the first AI product to function exceptionally well, and now we’re seeing even better ones emerge. AI has numerous implications for user interfaces, but the most significant is that it introduces a different interaction paradigm.

Computers have always relied on command-based interaction, where users tell the computer what steps to take. For example, “make this bigger,” “make this a fat line or a thin line,” “make this thing green,” and so on. You issue commands step by step, and the computer dutifully obeys.

The problem with this approach is that the computer does exactly as it's told. Often, we give it the wrong command — not what we actually want it to do, but what we think we want. Unfortunately, these can be different things. Following orders to the letter doesn’t lead to great usability, and it can be a tedious, long process to issue all the commands to achieve your goal.

With AI, we get intent-based outcome specification. We don't tell the AI to follow specific steps; instead, we tell it what result or outcome we want. For example, if you're using an image generation tool like Midjourney, you could say, “I want a monkey eating a banana while riding a bicycle in a circus act.” It will draw that. If you don’t like it, you can say, “Well, I want an elephant riding a bicycle.” Even though that has never existed, AI will still draw the picture.

“An elephant riding a bicycle.” (Midjourney)

No, I want my image to resemble a real photo of an elephant on a bicycle. Change your intent specification, and AI will change the outcome accordingly (Midjourney).

You simply express your intent — what you want — and specify the desired outcome. The AI figures out how to do it. You don't need to issue commands. This is a huge change, the first new interaction paradigm in about 60 years. Computers have always been based on commands, except for the very early days when you had to wire them. But ever since the early operating systems, user interfaces have been command-based. No longer. Now AI supports intent-based outcome specification — a major revolution in user interfaces.

AI makes user interfaces similar to a genie emerging from a magic lantern: you express your wish for what you want the outcome to be, and it’s the genie’s job to figure out how to make this happen. Intent-based outcome specification! (Midjourney)

Another exciting aspect of AI is how it’s empowering individualization in the user experience. The history of user interfaces has been one of ever-narrower specification of the users.

When I started in UX 41 years ago, one of the first things I learned was the importance of designing for the target audience. This concept goes back to the 1980s, maybe even earlier. It’s one of the oldest ideas in user experience: define your target audience and design for them, not for people who aren’t using your product.

In the 1990s, we took the next step with personas, each representing a different group within our target audience. We could then design features for each persona and prioritize them based on factors like profitability.

The next level beyond personas is to look at individual people. In the 2000s, the approach to this was personalization. Unfortunately, personalization doesn’t work well in practice (with a few exceptions, like TikTok).

AI will come to the rescue and create individualized user experiences through generative UI. Just as you can generate a picture of an elephant on a bicycle, you can generate a user interface. Currently, this is done at design time, and human designers need to review and refine the AI-generated designs. But remember, we’re only in year two of practical AI.

In a few years, generative UI will likely be able to create interfaces specifically for individual users on the spot, tailored to what that person needs right now.

Let me give you an example from e-commerce, specifically selling clothes or fashion online. The downside of online shopping is that you can't touch and feel the clothes. The solution? Photos. You provide photos of the outfit — a dress, a suit, a shirt — and customers can look at these images. Fashion websites don’t just show you a photo of a suit on a hanger; they show a model wearing it.

This is better than nothing, but the problem is that these models don’t look like the actual buying customer. It doesn’t show how this suit would look if I wore it, which is what I want to know. I want to buy something that will look good on me, not something that looks good on a fashion model.

In the future, we'll be able to use generative UI to create a photo on the spot of the individual shopper wearing that outfit. Then you can really judge how it’s going to look.

We’re not quite there yet, but Google Shopping has a feature called Virtual Try-On, a step in that direction. They have a wide spectrum of models with different looks, and use AI to map the outfit onto whichever model is the closest match to the shopper. It’s not perfect, but it's substantially better than we had in the past.

Individualization is one way AI will improve usability. In this specific example, I predict it will increase fashion sales and reduce returns, which are very expensive to process.

Timeline and Future Changes

This timeline chart shows one dot for each of the 10 UX insights in this article, illustrating when each came to be. We have a broad timeline stretching from the 1940s to the 2020s. Half of these insights emerged in the first 40 years, and half came in the second half of this period. Looking at this layout of dots representing fundamental advances in UX tells me one thing: there will be more dots. There will be more fundamental insights. We don’t know everything about UX yet.

The year each of the 10 fundamental insights in UX was developed. One dot per insight.

New insights will emerge in the next 40 years, probably even after that. What will these new insights be? I don't know. If I did, I’d tell you — and they wouldn’t be new. But there will be new, fascinating developments in UX.

I hope I’ve convinced you that the old insights are still important, despite whatever new shiny thing we’ll get in the future. We must still apply these established UX principles to current and future products. Each new insight about UX is cumulative, rather than replacing the previous insights. Our understanding of UX grows over time, advancing our ability to create great design.

Jakob’s Keynote About the 10 UX Insights

Watch the keynote presentation I gave for ADPList about the 10 UX insights. (YouTube, 65 min. video, including Q&A after the talk.)

Infographic to summarize this article. Feel free to reproduce, as long as you give the URL for this article as the source.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (27,123 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)

This article is amazing (and the AI images within it too!)

Great article, thanks! Regarding #10, as an example, Duolingo is using AI to personalise app notifications. See more here: https://youtu.be/9KqrnBiyBQ8?si=AndjLevZ7avJP-sl&t=217