2026 Predictions as a Comic Book and Poster Series

Summary: Short and easily digested versions of Jakob Nielsen’s 18 predictions for UX and AI in 2026: A 10-Page comic book and a series of 10 posters.

My 18 predictions for UX and AI in 2026 turned into a very long article of almost 10,000 words. I recommend reading the full thing, because details matter. But if you’re pressed for time, here are three fast ways of getting an overview:

Music video about AI & UX in 2026 (YouTube, 5 min.)

A 10-page comic strip

A series of 10 striking posters

I made all the visuals in this article with Nano Banana Pro.

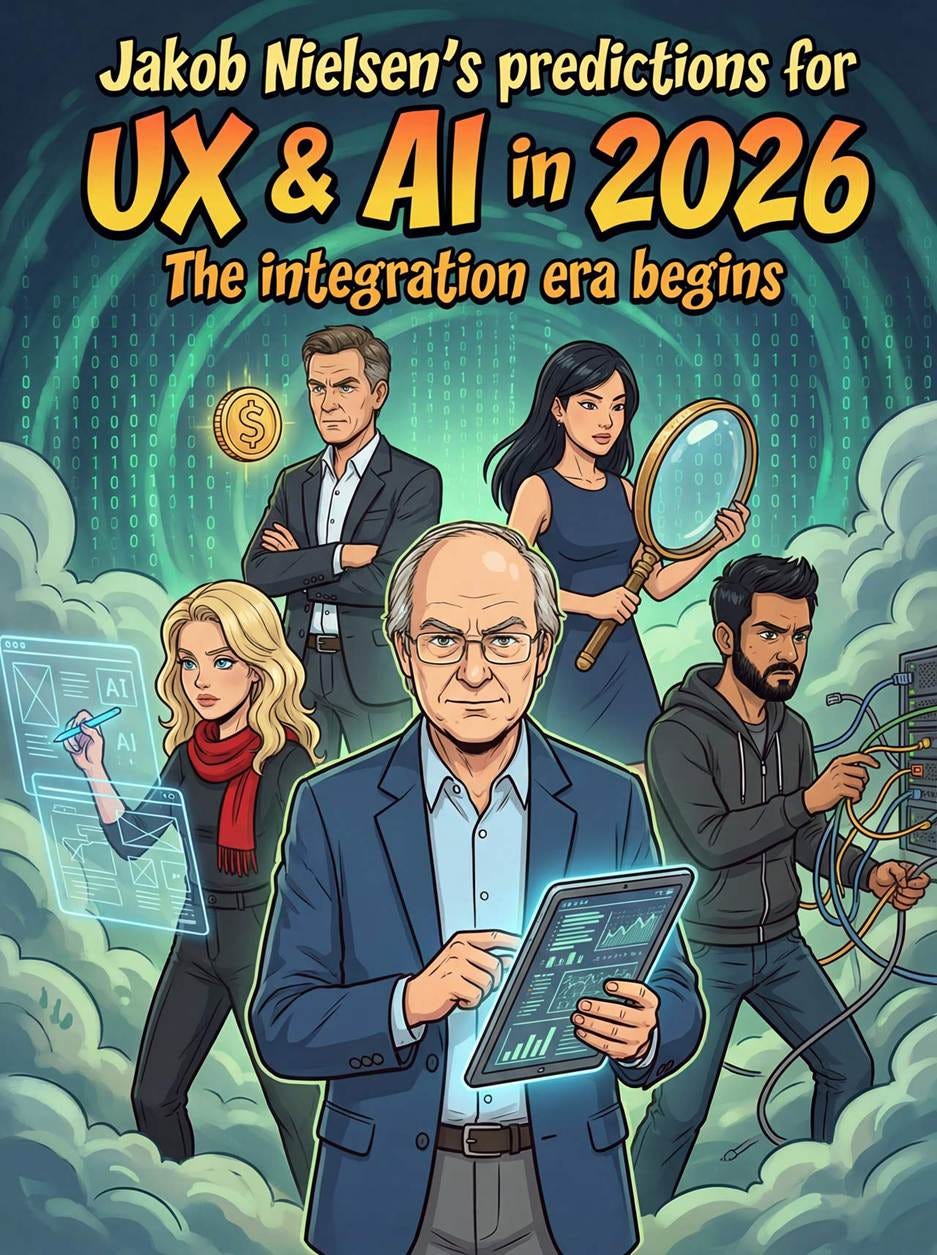

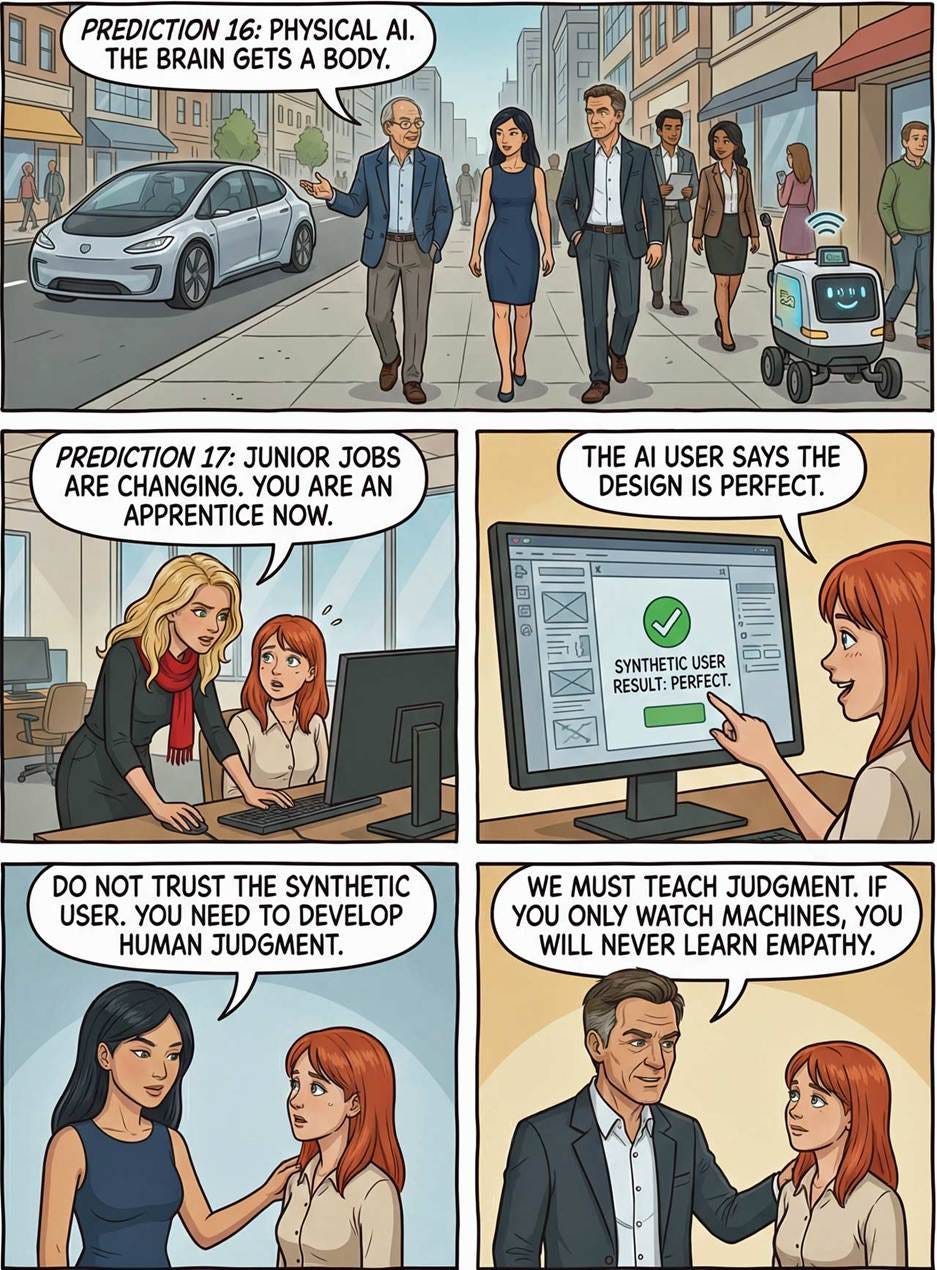

Comic Strip

I drew this comic strip with the four characters from the product team I have used for a few earlier strips:

Revisiting my predictions for 2025 (rather appropriate to use the same characters for the 2025 and 2026 predictions!)

It continues to be difficult to get Nano Banana Pro to obey character consistency across strips, even when uploading character reference sheets for all the main characters. I typically had to render 8–12 versions of each page to get one where all the characters looked right. Even the final versions published here have small flaws: for example, the team geek, Rahul, lost his beard between frames 1 and 5 of the last page.

In total, I needed to draw 120 images to get 10 publishable comic pages. If using Google’s API, this comic book would have cost me $16. A bargain! However, I accessed Nano Banana Pro through Higgsfield, where I’m currently benefiting from a Black Friday subscription deal (no longer available, sorry), so I only paid $4 to draw this comic book.

(As a comparison, a newly published comic book costs around $5, despite being mass-produced. Printed comic books usually give you 3x the pages of my comic book for that slightly higher price, but we are close to the day when it will be cheaper to get a fully personal comic book drawn just for you than it is to buy comics from a publisher.)

The following poster series was easier to draw since I wasn’t aiming for consistent posters, just cool ones. In total, I rendered 48 images from which I selected the 10 best to show you, meaning that the poster series cost me about $1.60.

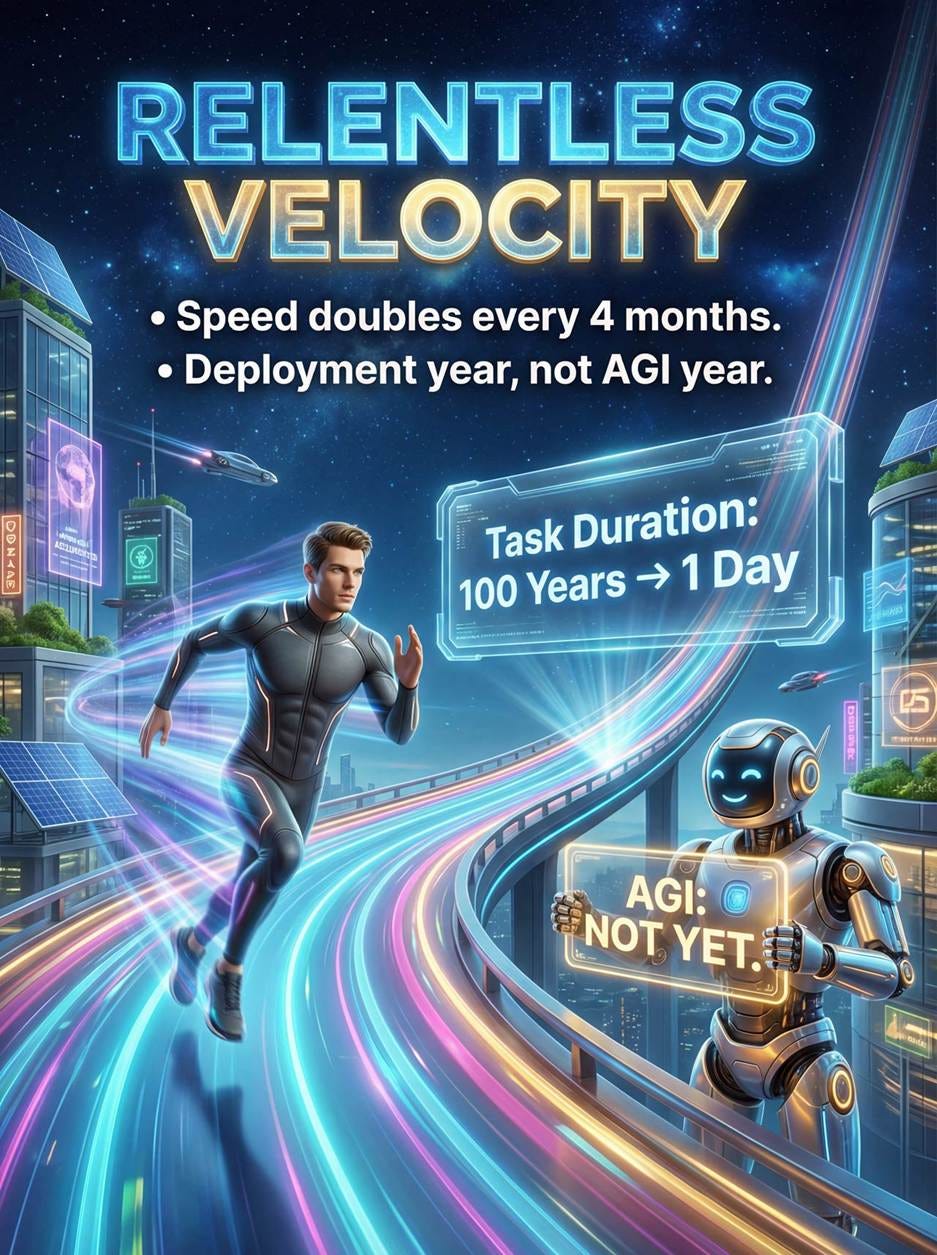

Poster Series

Prediction 1: Accelerating Relentless Change

AI task capabilities are doubling every four months. By late 2025, AI could autonomously perform tasks requiring nearly five hours of human expert time. By end of 2026, AI will likely handle tasks taking humans 39 hours. By 2030, AI may complete 100 person-year tasks in under a day, fundamentally transforming workflows across industries.

Prediction 2: AGI: No

Artificial General Intelligence will not arrive in 2026. Using Chollet’s definition (systems that efficiently acquire new skills for novel problems outside training data), AGI may not occur until 2035. However, superintelligence defined by task capabilities may arrive around 2030. Once superintelligence emerges, recursive self-improvement will accelerate progress dramatically, with AI potentially improving by a factor of 4,000 annually.

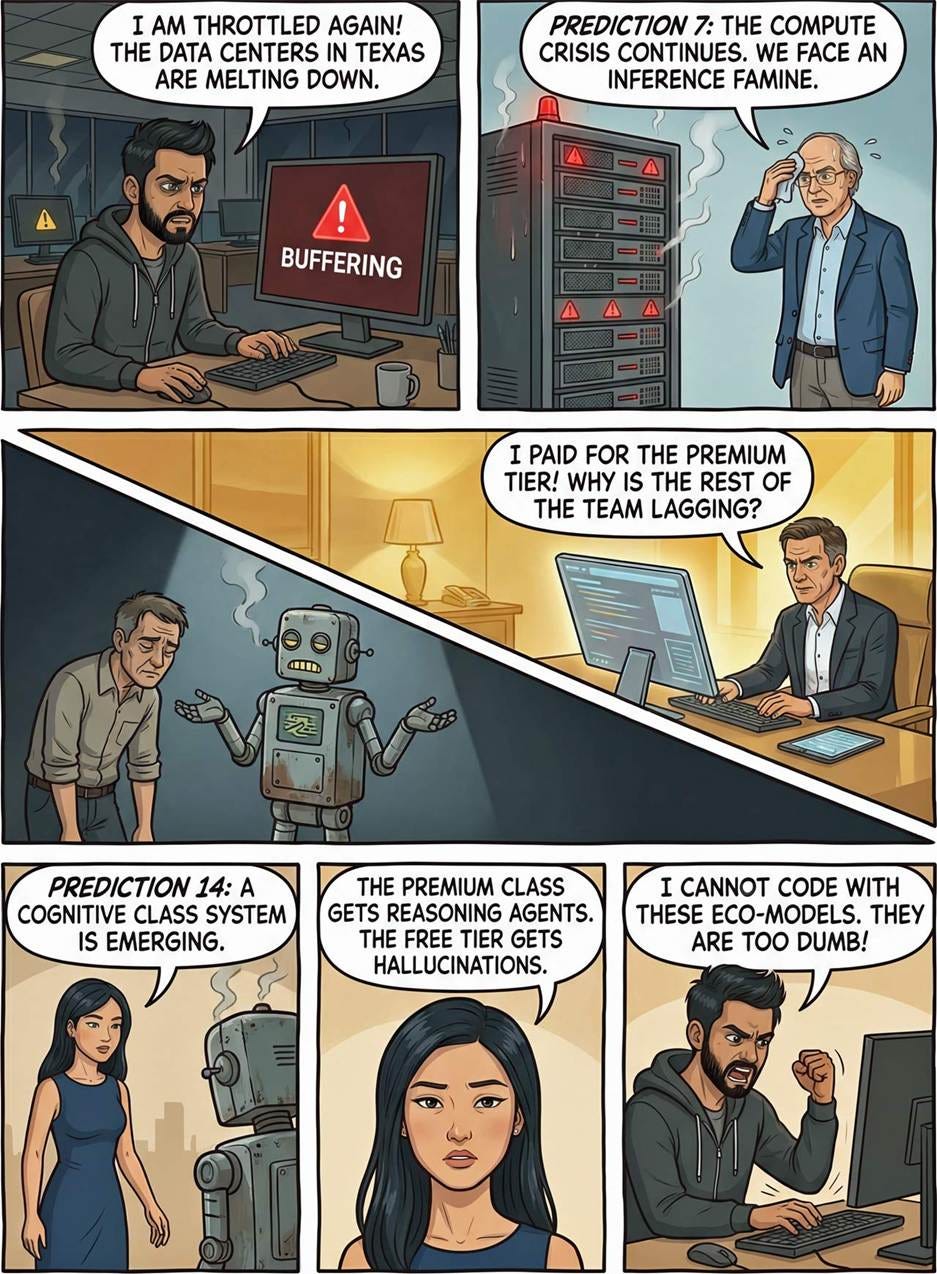

Prediction 7: Compute Crisis Continues

The compute crisis persists as an operating condition shaping what vendors can ship and price. Major investments in energy infrastructure (OpenAI’s 1.2-gigawatt Texas site, xAI’s 2-gigawatt Mississippi data center) prove demand exceeds supply. Jevons Paradox means more capable AI drives more usage. Premium compute becomes a luxury tier with waitlists and surge pricing. “Compute-aware product design” becomes unavoidable.

Prediction 14: The Two-Tier AI World

A cognitive class system emerges defined by subscription tiers, not education. A widening gap exists between professionals paying $200 monthly for high-reasoning models and masses relying on free, limited models. Premium users integrate AI into deep workflows; free-tier users experience hallucinations and conclude AI is overhyped. Ninety percent remain in the free tier, failing to develop the AI literacy required for the modern economy.

Prediction 4: No Moat for AI Labs

Any AI lab advantage remains temporary. Once one lab proves a capability, competitors quickly replicate it. By December 2026, whichever lab leads in any AI area will only be months ahead of second place. Practical implications: be prepared to switch providers frequently, or accept being slightly behind the frontier while enjoying subscription savings. Consider aggregator services for image and video generation.

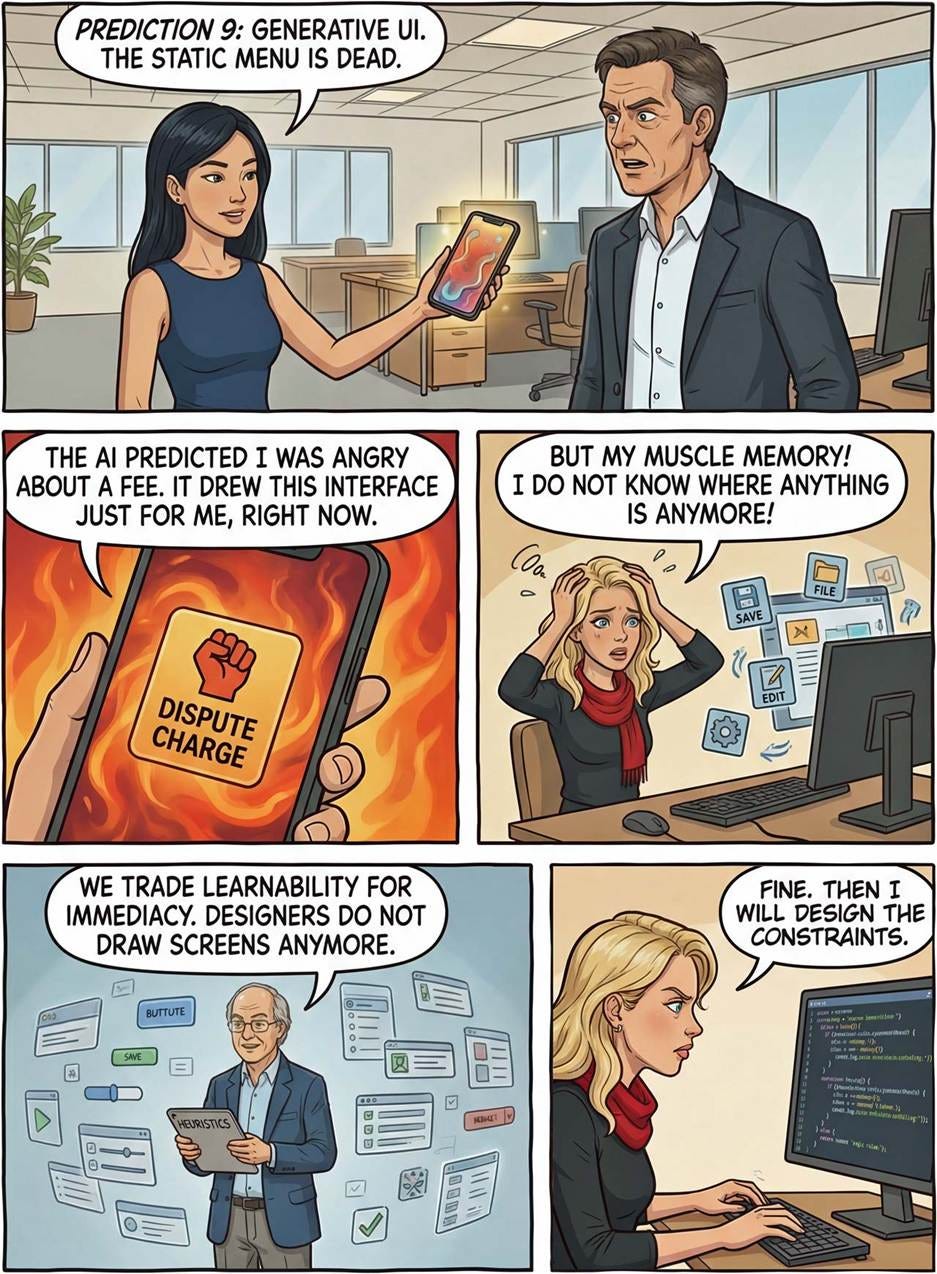

Prediction 12: Single-Mode AI Providers Bought by Multi-Modal AI Labs

The time has passed for building quality single-modality AI models without integration into world models. Image models backed by powerful language models demonstrate superiority. Video and image companies like Flux, Ideogram, Leonardo, Midjourney, and Reve will likely be acquired by multimodal labs such as Google, Meta, OpenAI, and xAI. Or they may simply die. Midjourney’s strong style makes it attractive.

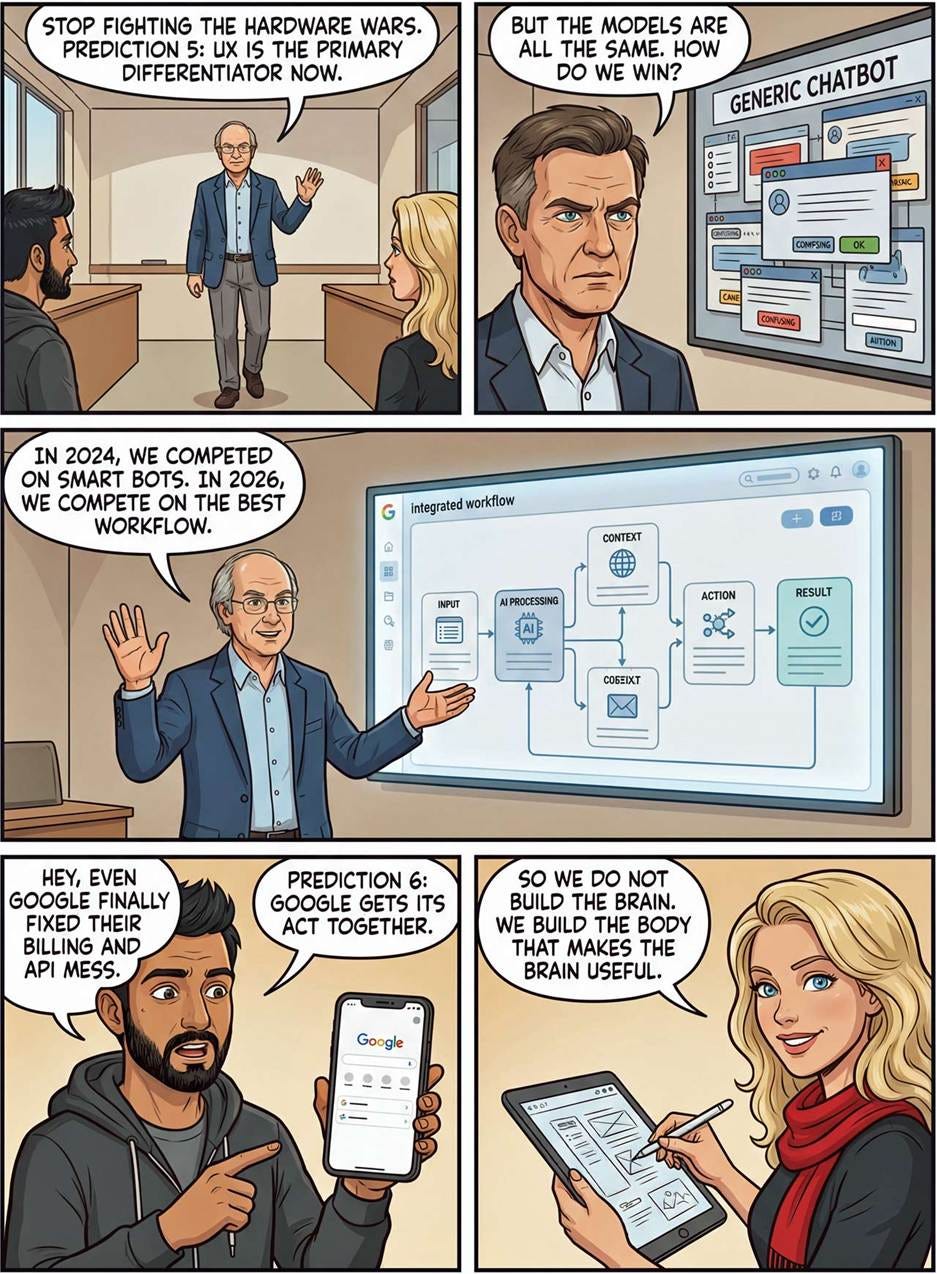

Prediction 5: UX as an AI Model Differentiator

Foundation models have reached convergence in raw reasoning capabilities; differences are now indistinguishable to average users. User Experience will replace model intelligence as the primary differentiator. Companies will compete on workflows rather than smarter bots. Vertical AI platforms wrapping commoditized models in specific, defensible workflows will win. However, AI labs currently lack strong UX teams and thought leadership.

Prediction 6: Google AI Gets Its Act Together

Google will finally create a decent, integrated UX architecture for its many AI products in 2026. Currently, offerings are scattered across locations without coherent organization. The same model appears in different places with different features. API key billing is complex, and there is no simple way to buy extra credits. Competitive pressure from OpenAI, xAI, Anthropic, and Chinese vendors will force improvements.

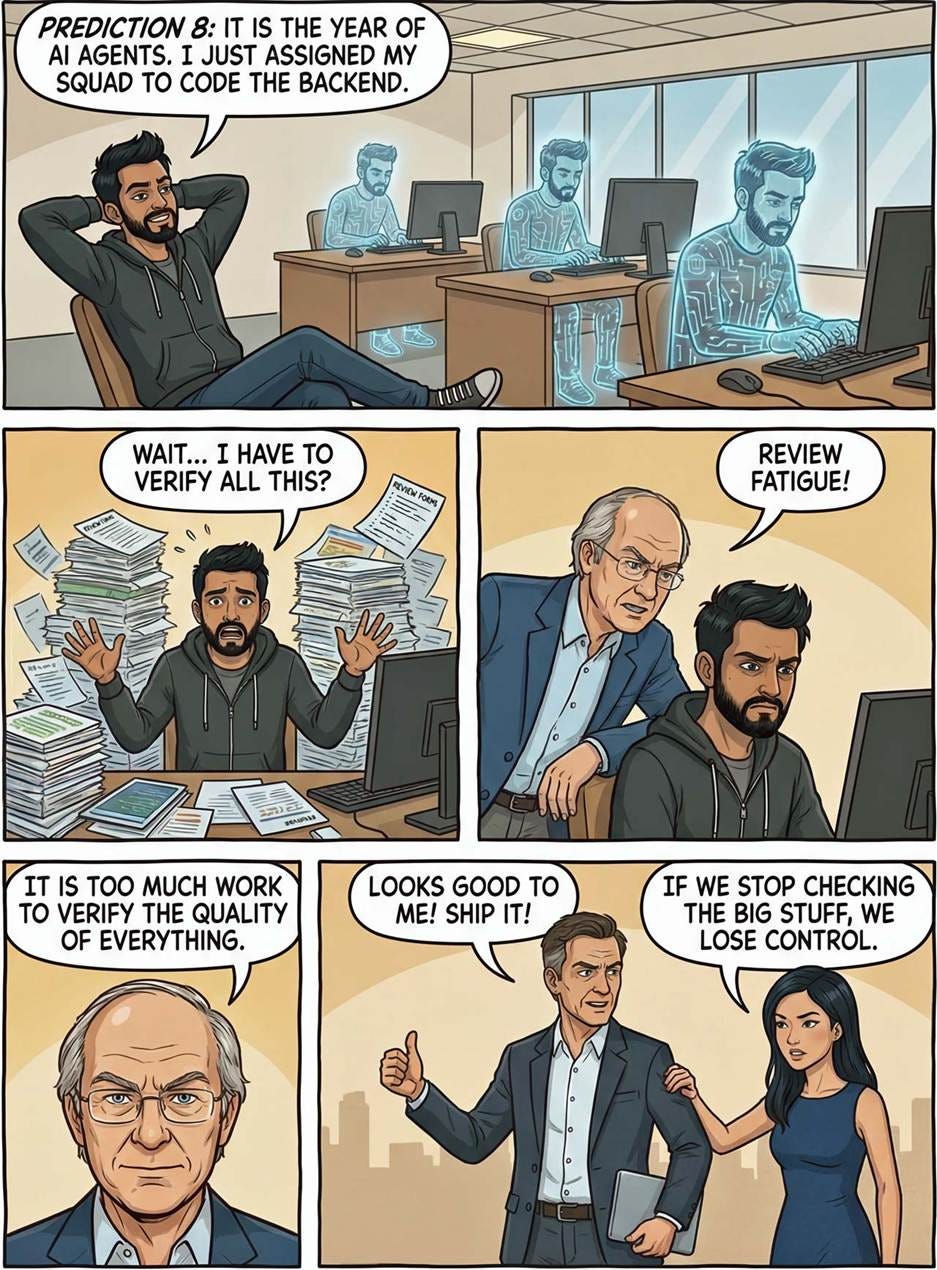

Prediction 8: AI Agents

2026 will be the year of AI agents, shifting from passive chat tools to active agentic systems that plan and execute tasks autonomously. This represents a fundamental shift from conversational UI to delegative UI. Multi-agent systems will collaborate on complex workflows. The dominant enterprise metric shifts from “tokens generated” to “tasks completed autonomously.” The challenge becomes designing audit interfaces for human oversight.

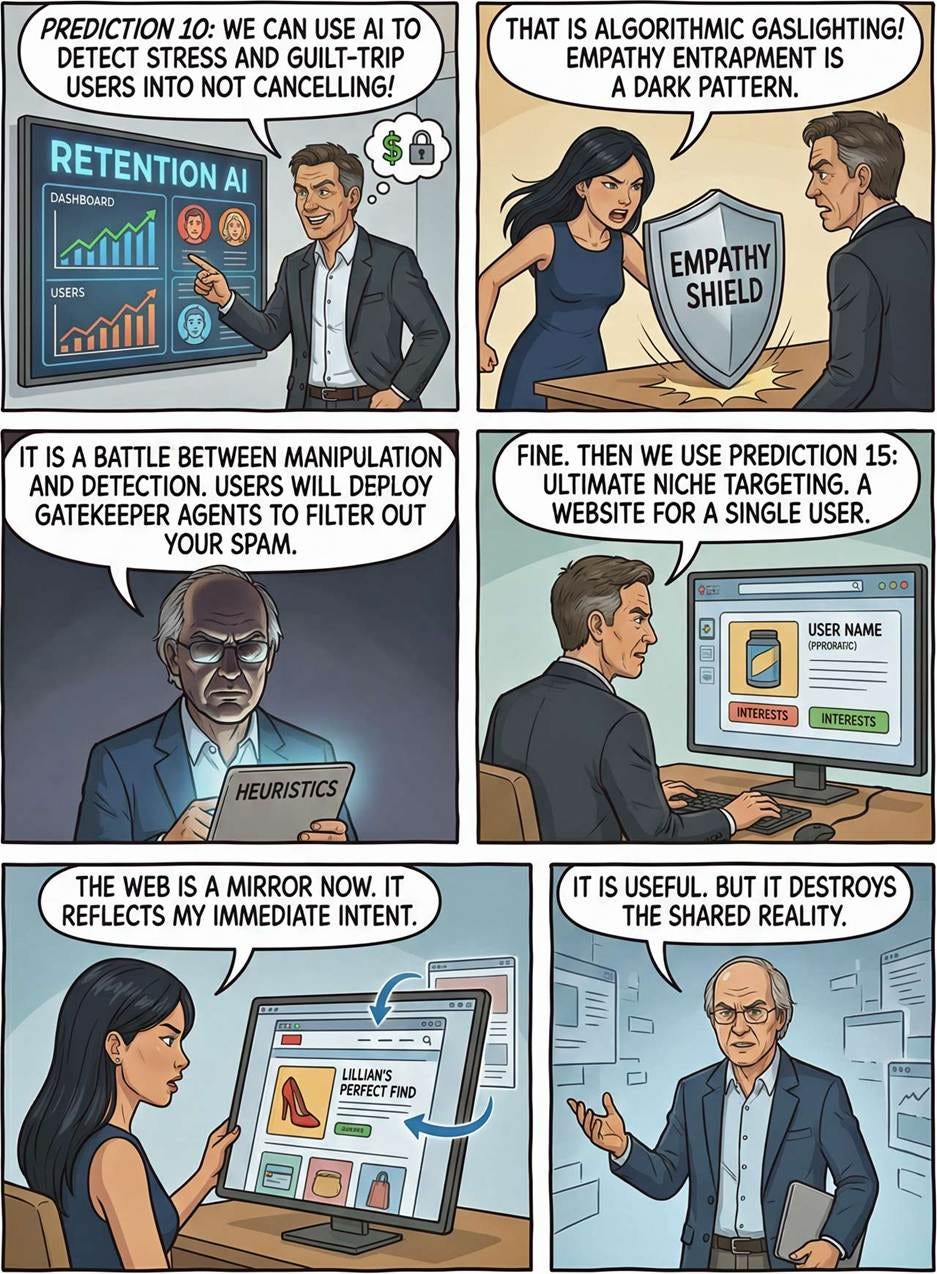

Prediction 9: Generative UI (GenUI) and the Disposable Interface

Static interfaces where every user sees the same layout are becoming obsolete. Software interfaces will be generated in real-time based on user intent, context, and history. UX designers will focus on designing constraint systems and design tokens rather than static screens. The hidden cost: death of muscle memory and expert performance based on spatial consistency. Learnability is traded for immediacy.

Prediction 11: Multimodal AI

By end of 2026, frontier models will speak, listen, see, imagine, and edit with every modality treated as first-class. The era of text-based Large Language Models ends; we transition to Large World Models. Models will process raw sensory data directly, not by translating to text first. They will develop rudimentary intuitive physics, understanding object permanence, gravity, and cause-and-effect for reliable video generation.

Prediction 13: Editing AI-Generated Images

Image generation shifts from slot machine randomness to design software with handles, layers, and constraints. When generating “a cat on a sofa,” AI will understand these as distinct entities. Users can click the cat, drag it elsewhere, and AI will in-paint backgrounds while adjusting lighting. Semantic sliders will adjust abstract concepts like mood or lighting hardness, enabling precise editing without re-prompting.

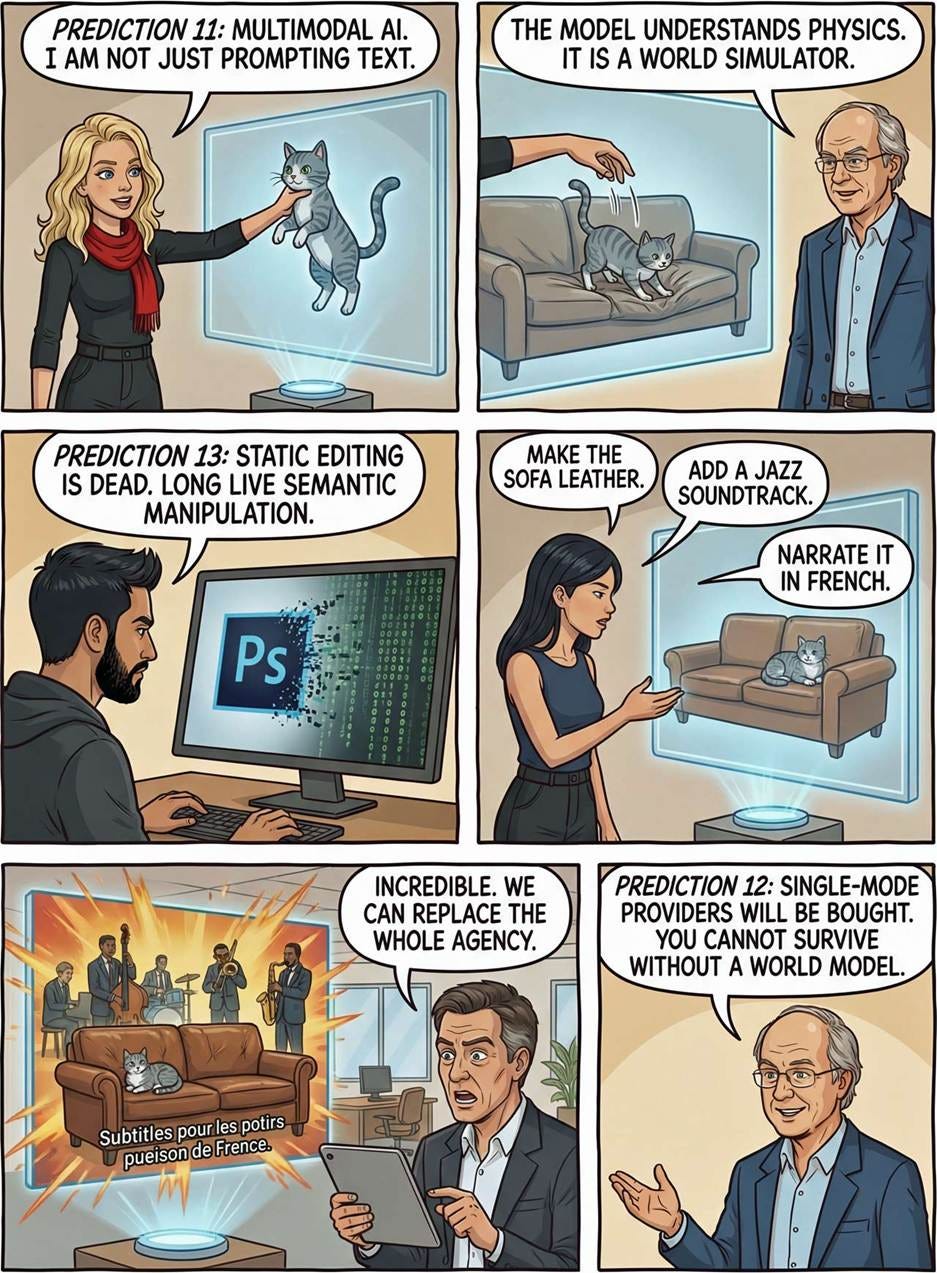

Prediction 15: Ultimate Niche Targeting: One User, Right Now

The concept of “target audience” becomes obsolete. AI enables content, offers, and creative assets assembled dynamically so each person sees a version optimized for their current context. Meta will use AI assistant interactions for ad personalization. Creative and targeting collapse into one optimization layer. Brands ship constraint sets while AI assembles unique combinations of image, copy, offer, and landing page per user.

Prediction 10: Dark Patterns Move to the Model Layer

The most dangerous dark patterns will become persuasive AI systems rather than deceptive buttons. Companies will deploy behavioral dark flows powered by personalization, learning which phrasing increases conversion for individuals. “Empathy entrapment” will use simulated emotions and social obligation to manipulate users. Consumers will respond by deploying defensive “gatekeeper agents” to screen communications and negotiate on their behalf.

Prediction 16: Physical AI: The Brain Gets a Body

2026 sees AI invading the physical world through expanded autonomous vehicle services, moving from pilot zones to multiple cities. Robots will appear in retail, hospitality, healthcare, and logistics. Firefighting drones will save lives. In-home robots remain years away. Xpeng plans mass production of humanoid robots later in 2026 for industrial use, with expansion expected in 2027.

Prediction 3: New AI Scaling Law: Maybe

A new paradigm for scaling AI may emerge in 2026 to parallel existing approaches like pre-training, reinforcement learning, and reasoning models. Rumors suggest Google DeepMind has continuous learning in development, and OpenAI has something major brewing. However, research breakthroughs are unpredictable by nature, so this prediction remains uncertain despite heavy investment attracting top talent to AI.

Prediction 17: The Apprenticeship Comeback

Junior jobs continue dying off as AI performs their tasks better and cheaper. Entry-level UX hiring becomes more apprenticeship-like, with companies hiring fewer generalists and more trainees attached to specific domains. Juniors will be evaluated on decision quality, not output volume. A major risk: relying on synthetic users for testing will raise designers who understand how AI thinks people behave, not actual behavior.

Prediction 18: Human Touch as Luxury: No

Predictions about handmade content becoming luxury are wrong except for rare cases. During transition, audiences may pay more for legacy actors or musicians. Long-term, content quality matters, not production method. 2026 will see the first breakout hit video game created entirely through natural language prompts. AI-native games with conversational NPCs will create new genres. Only prostitutes and schoolteachers require humans permanently.

Watch my music video about my predictions for AI & UX in 2026. (YouTube, 5 min.) And compare with the music video I made a year ago with my predictions for 2025. (YouTube, 3 min.) AI avatar animation has progressed substantially in just one year!

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 42 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (29,817 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s first 41 years in UX (8 min. video)