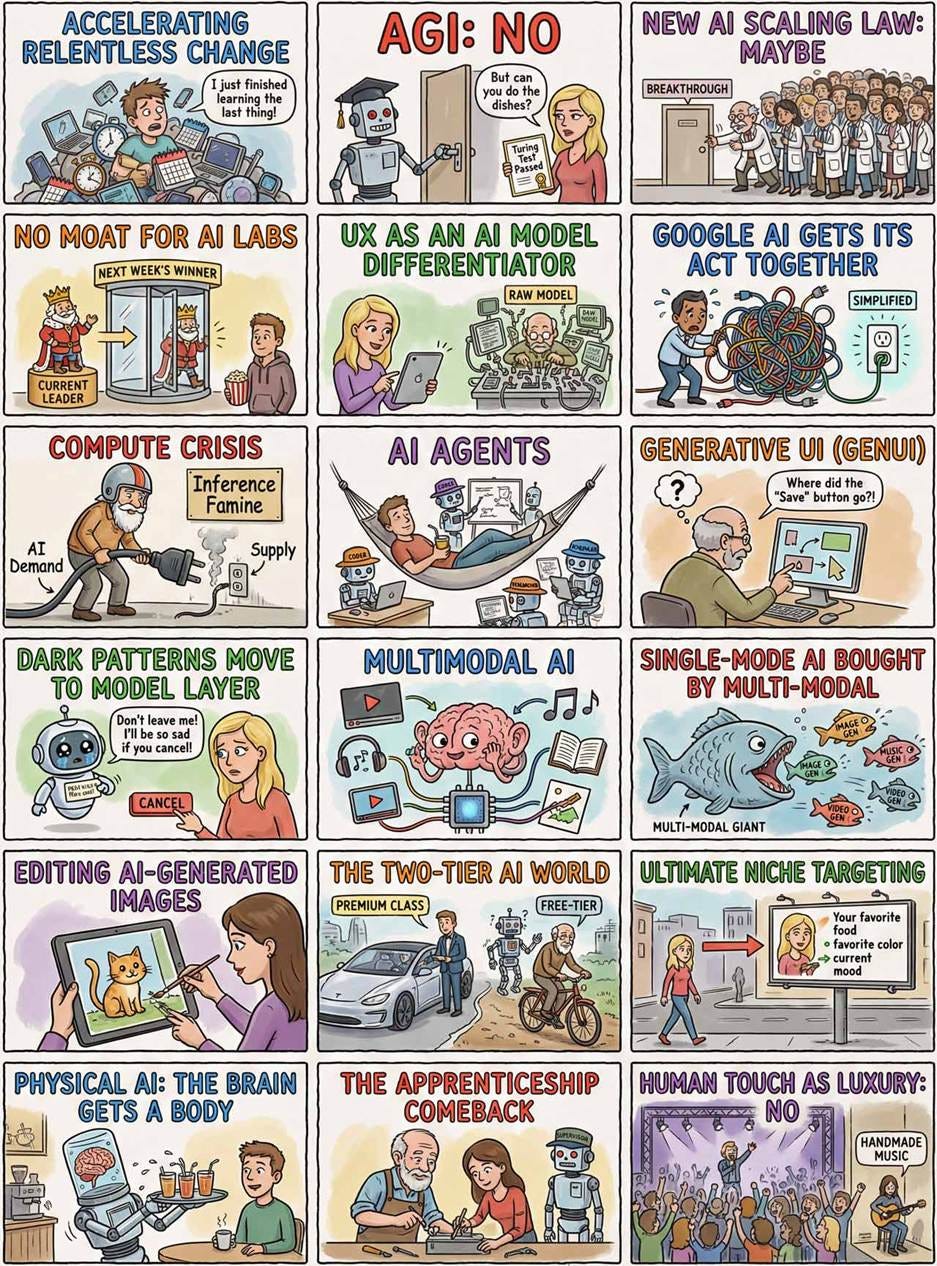

18 Predictions for 2026

Summary: Accelerating AI capabilities will shift focus from raw intelligence to autonomous agents and Generative UI, making UX the primary business moat. Multimodal integration and physical AI will revolutionize workflows, rendering static interfaces and single-purpose tools obsolete. However, progress faces hurdles like compute shortages and a widening class divide between premium and free-tier users.

Let’s peek into Jakob’s crystal ball to see what’s ahead for 2026. (Nano Banana Pro)

This is a long article. For a quick, entertaining summary, watch my jazz music video about these predictions (YouTube, 5 min.).

We have moved past the initial “hype cycle” of Generative AI. 2026 is the year of deployment, infrastructure, and the messy reality of integration.

I have 18 concrete predictions for this year. Limiting one’s thinking to a one-year horizon is tough since most major changes take longer to play out. Consequently, many of my predictions may not fully materialize by December 2026, but I expect to be directionally correct. We will see major movements along these lines, even in a single year.

Predictions are notoriously difficult. As the saying goes, they are particularly hard when they concern the future. I fully expect to be wrong in some of my more specific predictions in this article. There are two opposite ways I am likely to be wrong:

I fully expect to be wrong on some of my more specific claims here. There are two opposite ways I am likely to be wrong:

Resistance wins: Some changes, while they may ultimately happen, will be too strongly resisted by sluggish inertia or powerful special interests, causing only limited progress in 2026.

Surprises happen: AI is moving faster than ever, and there are bound to be surprises nobody anticipates.

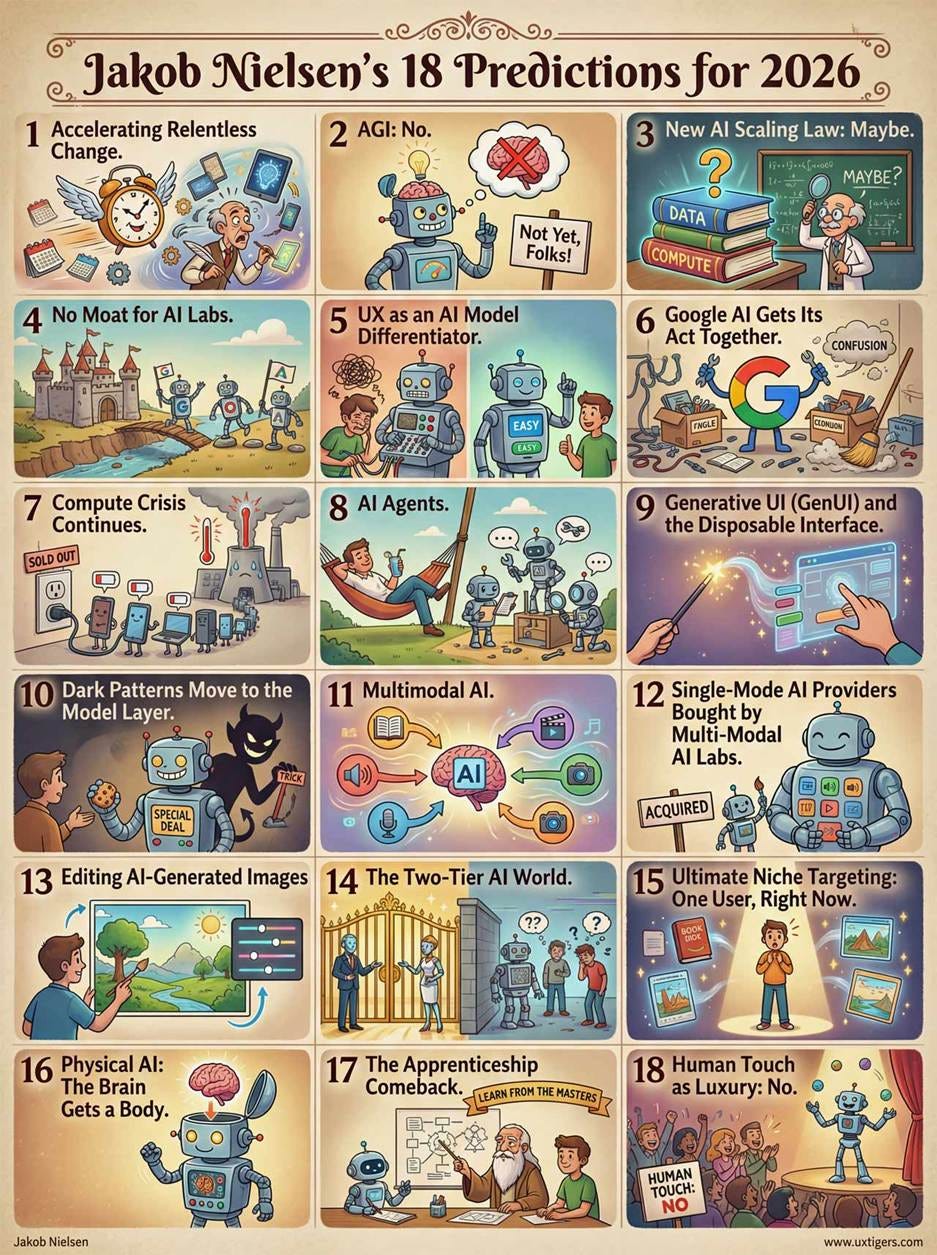

My 18 predictions for 2026. (Nano Banana Pro)

Which leads me to my first prediction:

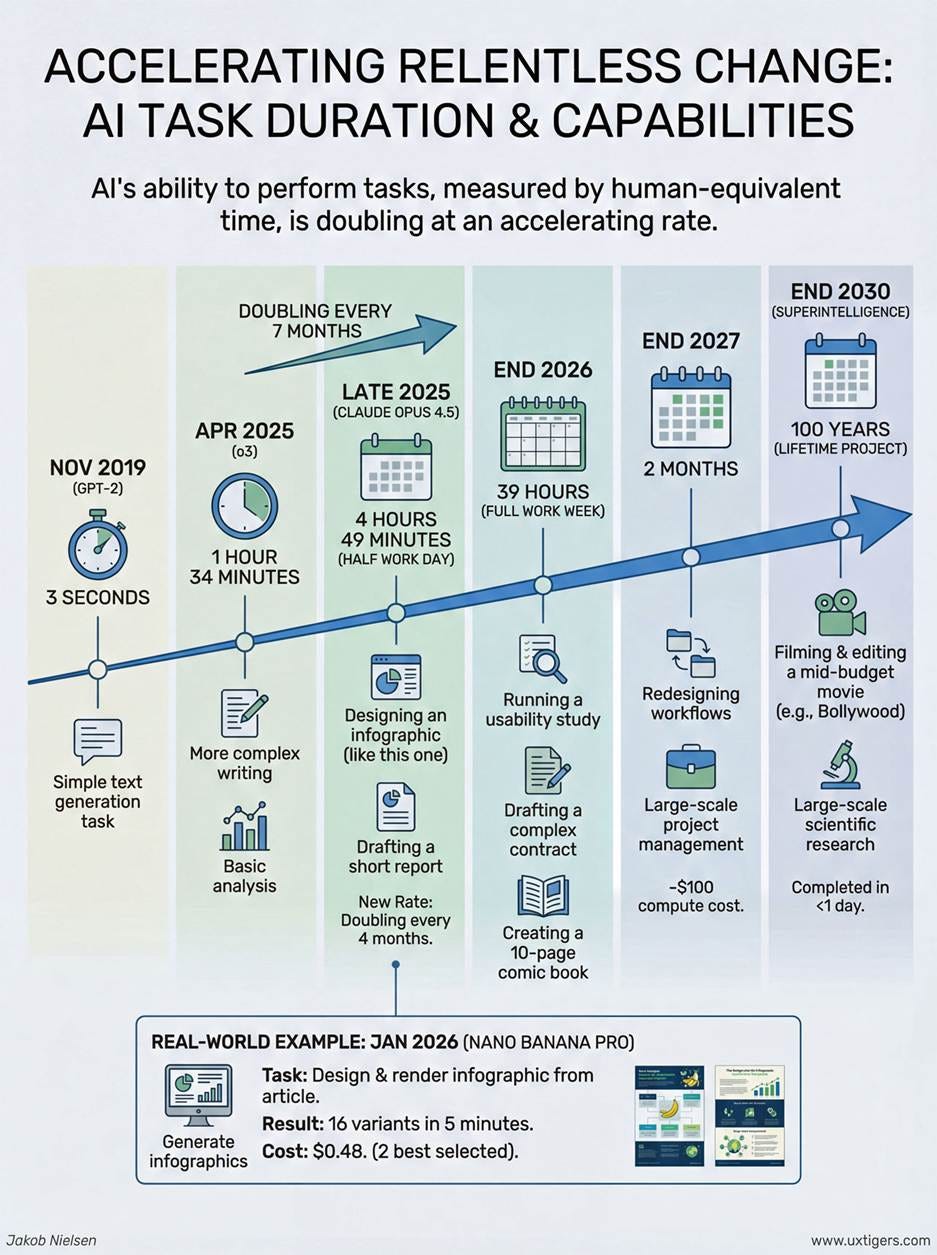

[Prediction 1] Accelerating Relentless Change

I used to say, “The only constant is change.” That’s not even true anymore. Change isn’t constant; it’s accelerating. As one chief information officer told Deloitte, “The time it takes us to study a new technology now exceeds that technology’s relevance window.” This is a big deal.

Data from METR shows the acceleration in stark terms:

● 2019 (GPT-2): AI could perform a task that took a human 3 seconds.

● Early 2025: That grew to 1.5 hours.

● Late 2025 (Claude Opus 4.5): AI could autonomously perform tasks requiring nearly 5 hours of human expert time.

The doubling rate itself has accelerated from every 7 months to every 4 months.

What’s a task that takes a good human half a workday? One example: designing and drawing an infographic based on an article. Nano Banana Pro does this in less than a minute.

By the end of 2026, AI will likely perform tasks that take a human 39 hours which is more than a full work week when you account for meetings and other non-task activities.

What’s a one-week task for a human? Perhaps running and analyzing a quick “discount” usability study. I don’t think AI will excel at identifying usability problems based on user behavior observations by December 2026, but it should carry out all the steps of the user testing process. Other examples: a lawyer drafting a complex business contract, or a cartoonist writing and drawing a short comic book story at the fairly low complexity of classic “Silver Age” superhero comics (usually around 10 pages).

(I can make a 10-page comic strip with Nano Banana Pro today, but only with heavy human involvement across several steps. The “task duration” horizon discussed here is the size of the job an AI can complete fully on its own. 10-page comics are likely by year-end, but not now.)

By the end of 2027, AI will complete tasks that take a human two months, doing so in perhaps an hour while consuming roughly $100 worth of compute. That’s when companies will face intense pressure to redesign workflows to be almost fully AI-based.

By the end of 2030 (when superintelligence is expected), AI will perform tasks that take humans approximately 100 years to complete, doing so in less than a day. No individual human works for 100 years on a single project, but as teams we do. A 100 person-year task might be the filming and editing of a mid-budget Bollywood movie with a current budget of budget of ₹75 crore = ~$9 million USD. For a high-budget Hollywood movie (currently costing $200 M to make), we may have to wait until 2032 for AI to complete it in a day for $200.

From making a single infographic to tackling the highest-cost current human creative projects in a few years: that’s what I mean by relentless change.

Here is an example of the tasks AI can do independently as of early January 2026: design and render an infographic based on an existing article. I uploaded this section to Nano Banana Pro and asked it for an infographic. Actually, I generated 16 variants, which took about 5 minutes: about half contained errors bad enough that I would not want to publish them. Finally, I selected the two best to give you an idea of the range of designs the AI gave me.

I paid $0.48 for this 5-minute AI job that resulted in two good infographics. If I hadn’t wanted to show you a selection, I could have paid less.

[Prediction 2] AGI: No

I do not expect Artificial General Intelligence (AGI) to arrive in 2026. Of course, there is no consensus definition of AGI, and with a lax definition, AGI (thus defined) might well arrive in this year. AI has already passed the original Turing Test (the imitation game), which was how most people originally thought of AGI.

Tossing aside imprecise philosophical drivel, the most widely accepted precise and measurable definition of AGI is credited to Vincent C. Müller and Nick Bostrom in 2014: “AGI is achieved when unaided machines can accomplish every specific task better and more cheaply than average human workers.” (In this view, superintelligence, or ASI, is when AI does better than all humans on all tasks, even the highest-IQ people with the most experience on any given task.)

I tend to prefer a different definition, which is credited to François Chollet 2019 paper On the Measure of Intelligence: “AGI is a system that can efficiently acquire new skills to solve novel, open-ended problems outside of its training data, using very little prior experience.”

This shifts the emphasis from whether AI can perform an existing job to whether it can learn to handle something new. Biological intelligence (Neanderthals, Humans, etc.) is highly adaptive, which is why we have conquered the globe and continue to thrive despite rapidly changing circumstances from the Stone Age to today.

I used to think AGI would arrive around 2027, but given Chollet’s definition, I now think it may not occur until 2035. I still expect superintelligence to arrive around 2030, using the task-capabilities definition: AI will be better than all living humans at all existing tasks.

With my ambitious definition of AGI, I don’t believe it will arrive until around 2035. (Nano Banana Pro)

I have changed my mind to believe that ASI (defined by capability) will arrive before AGI (defined by potential). Once we get superintelligence, even the aggressive definition of AGI will follow shortly, because one of those tasks where AI will surpass all humans is the invention and coding of improved AI. Recursive self-improvement may not quite be the singularity in Vernor Vinge/Ray Kurzweil terms (with vertical capability takeoff), but it will radically accelerate everything, from humanity’s standard of living to the arrival of full AGI.

The doubling rate for AI’s time horizon is currently every 4 months (see previous prediction). After ASI, AI could well double every month, meaning it will improve by a factor of 4,000 each year.

[Prediction 3] New AI Scaling Law: Maybe

I’m sorry to give you a weasel word like “maybe” as my prediction for whether we’ll get a new paradigm this year for scaling AI, to parallel the existing scaling models of pre-training, reinforcement learning, and think-time compute (i.e., reasoning models).

Rumors are heavy in Silicon Valley that Google DeepMind has continuous learning cooking, and that OpenAI also has something major in the works. I’m sure that Chinese labs are working on other big ideas, and maybe xAI, Meta, or Anthropic will release a new way to radically improve AI beyond simply throwing more compute at the problem. (We know from “the bitter lesson” that more compute absolutely works, which is why AI will advance at pace, even without a research breakthrough.)

I can only say “maybe” for one more breakthrough in 2026, because it’s random when any given research idea pans out. What’s guaranteed: with sufficient research, we will occasionally achieve breakthroughs, which is why I dubbed “more AI engineers” the fourth scaling law. Investment in AI is increasing, leading more of the world’s high-IQ humans to work on AI, which will ultimately result in more breakthroughs. But research is only predictable in the aggregate, not for any individual result.

[Prediction 4] No Moat for AI Labs

2025 proved that any advantage for an AI lab was temporary. Once a capability was proven by one lab, many others quickly followed suit and launched similar capabilities. I expect this fast-follower advantage to hold in 2026.

The moat traditionally protected a castle from attack by making it harder for invaders to get to the castle walls. In Silicon-Valley speak, a “moat” is metaphorically a sustained advantage a company builds to make it harder for competitors to enter its market. (Nano Banana Pro)

It is impossible to predict which will be the best AI by year-end. As of early January, I give the crown to Google with Gemini 3 Pro (general intelligence), Nano Banana Pro (images), and Veo 3.1 (video). But GPT 5.2 Pro, Seedream 4.5, and Seedance 1.5 Pro, respectively, are not far behind, and Suno, ElevenLabs, and HeyGen lead in other verticals (music, speech, and avatars, respectively).

By December 2026, maybe the Google trifecta will still lead, and maybe they’ll add more modalities. But it’s just as likely that some current runner-ups will leapfrog, or that new entrants will usurp the throne.

My only prediction: whoever is number one in each AI area in December will only be a few months ahead of the second-best model. And most of these leads will not survive throughout Q1 of 2027.

The practical implications are paradoxically opposite, depending on your exact situation:

● If it is important to you to use the very best, be prepared to change AI provider every few months. Do not take out a year-long subscription to the current leader, no matter how good a deal you get.

● If you’re OK with being a few months behind the rapidly-moving frontier half of the time and feel good about using very good AI models even when a competitor is a little better, then cash in those savings from full-year subscriptions and stop worrying every time a competitor improves. Your chosen vendor will likely catch up in a few months.

● If you are a vertical intelligence provider (i.e., specializing in scaffolding AI for a certain domain, while outsourcing the underlying generalist AI model), design your architecture to make it easy to shift providers and preferably even to combine multiple models from different providers for the various features where each is superior.

● If you are particularly interested in image or video generation, subscribe to an aggregator, such as Freepik, Higgsfield, or Krea, since these sites usually have cutting-edge models available a few days after they launch on their mothership sites. One subscription to rule them all!

One subscription to rule them all: consider using an aggregator service that offers all the leading AI models under one roof. That way, you’ll usually have the best model available, even as new models take the lead every few months. (Nano Banana Pro)

[Prediction 5] UX as an AI Model Differentiator

The leading foundation models (whether from OpenAI, Google, Anthropic, xAI, or Chinese open-source leaders) have reached a point of model convergence in terms of raw reasoning capabilities. To the average enterprise employee or consumer, the difference in output quality between major vendors is now indistinguishable. A technical lead that used to last a year now evaporates in weeks as competitors copy architectures and datasets.

User Experience will replace Model Intelligence as the primary sustainable differentiator. In 2024, companies competed on who had the smartest bot. In 2026, they will compete on who has the best workflow. The era of the “Generic Chatbot” is dead.

The winners of 2026 are vertical AI platforms that wrap commoditized models in highly specific, defensible workflows, such as proprietary interfaces for legal discovery, medical triage, or code refactoring that inject context so perfectly that the user never has to prompt. Being an “AI Wrapper” was once a derogatory term, but such companies now have the most valuable business model, provided their wrapper solves the “Last Mile” problem of usability that raw models cannot.

History shows this pattern: when a technology commoditizes (like PC hardware in the 90s or Cloud Storage in the 2010s), the economic value shifts to the design and application layer.

That said, the leading AI models from all the main AI labs suffer from atrocious usability. This, of course, is exactly what provides the opportunity for one or two AI providers to step up and ship major UX improvements.

While the opportunity is there, the capability is lacking. The AI labs don’t have solid, high-talent UX teams and don’t perform foundational user research. They do have a designer here and there, and maybe even a few researchers in isolated places, but they don’t drive product strategy based on user insights.

Even worse, the world only has very few UX professionals who are true leaders in AI usability. I am very disappointed in the way most of the traditional sources of UX thought leadership during the Internet and Mobile eras have turned backward-looking: from thought leadership to legacy orthodoxy, being in complete denial that the rules are changing.

Unfortunately, too many former UX thought leaders have chosen the wrong timeline and continue to be dominated by orthodox thinking that was suitable for the old world of building usable websites. (Nano Banana Pro)

A few new thinkers do offer deep insights into future directions for AI-UX, but they are rare. I am starting to see thousands of mid-tier UX thinkers echo some of these insights, which is promising. (Most UXers don’t have the talent to be the gurus that break new ground. As long as these mid-tier folks become disciples of the right gurus, that’s good enough.) However, since there are about 2 million UX professionals worldwide, having maybe 20,000 of them understand where AI-UX is going means that 99% of the UX field is still backward-looking to their glory days of making websites and mobile apps easy to use.

(There are absolutely honorable exceptions. The most honorable, probably being Luke Wroblewski, who was one of the best web designers during the old days and is now one of the most insightful gurus on where AI-UX is going.)

This state of affairs is depressing, whether we’re talking about the survival of the UX profession or the hundreds of thousands of AI products’ ability to find qualified staff to improve design and usability. However, in this section, I am specifically addressing the possibility that some leading AI labs will pounce on UX as a sustained differentiator.

Making an AI lab into a UX leader can probably be done by hiring a UX team of fewer than 100 high-talent professionals, of whom only a handful need to be guru-level. Most of the required work is relatively pedestrian (go watch people use AI!) and can be done well by anybody in the top 3% of UX professionals, meaning at least 60,000 people in the world are capable of doing the work.

Even if most of these high-talent UXers are still in denial and reject where the world is going, there’s probably substantial overlap between those 20,000 UXers who are all-in on AI and the 60,000 high-talent folks. My estimate: there are 10,000 high-talent, all-in people. Many of these are in countries without frontier AI labs, but there are probably around 3,000 in the US and 2,000 in China who could do the job.

Bottom line, it should not be hard for an American frontier AI company to hire 100 of the right UX professionals from a pool of 3,000. It will be a little harder for a Chinese lab to hire 100 from a slightly smaller pool, but still eminently doable.

Bottom-bottom line: it’s possible, it’s needed, it will be done. What I don’t know is which frontier AI lab(s) will make the change in 2026, but I think at least one will embrace UX leadership. (Then it will take another 2–3 years for improved design and usability to fully permeate this lab’s product line, but this inevitable delay is only an impetus to get started now.)

Why it might not happen (Signs of Derailment): If one lab achieves a radical, non-linear breakthrough that is 10x better than the competition rather than just 10% better, the “intelligence gap” will reopen. In this scenario, users will tolerate terrible UX just to access the superior brain. I expect such a radical development to be unlikely, but research breakthroughs are unpredictable and could occur in 2026.

[Prediction 6] Google AI Gets Its Act Together

I will go out on a limb and predict that 2026 is the year Google finally gets its act together and creates a decent, integrated UX architecture for its many AI products and models.

It’s almost impossible to find your way around Google’s many different AI offerings, scattered as they are in many places without rhyme or reason. (Nano Banana Pro)

Right now, they are scattered across many places. The same model can be accessed in different locations with slightly different features. Some AI use requires users to establish API key billing, which is separate from their main Google AI subscription. This is reportedly highly complex, even for geeks, and we can forget about any non-technical AI user understanding API keys.

Google also doesn’t offer a simple way for users to buy extra credits when they need more AI use in a given month or day than what’s provided through their subscription level. (Except for a few services that do sell packages of extra credits.) Users have to suffer timeouts where they are told “no more Deep Think for you” or their image generations suddenly render in lower resolution.

Any decent AI service, and especially one that wants to make money, would tell users they had used up the credits included with the subscription and offer to sell more on the spot. When HeyGen can do this with 200 employees, why can’t Google?

Google spent 2025 launching many of the best AI models, but kept usability, architecture, and billing confused. Despite evidence for past incompetence in Google’s AI UX, I think they’re at the breaking point now, with so many new services plus heavy competitive pressure from OpenAI, xAI, Anthropic, possibly Meta, and many Chinese AI vendors.

2026 will finally be the year when Google prioritizes the usability of its AI services and makes substantial improvements.

[Prediction 7] Compute Crisis Continues

In 2026, the compute crisis persists, not as a temporary “GPU shortage” headline, but as an operating condition that shapes what AI vendors can ship, what they can price, and what customers can afford to run at scale.

The easiest way to see this is to follow the money and the megawatts:

● OpenAI and SoftBank are investing directly into energy and data-center infrastructure tied to “Stargate,” including a previously announced 1.2-gigawatt site in Texas, explicitly because energy access is becoming a binding constraint on AI expansion.

● xAI’s construction of a 2-gigawatt data center in Mississippi, with operations expected to begin in February 2026, is another signal that competitive positioning is now inseparable from power procurement and facility buildout.

● Meta securing nuclear-related deals to support AI data centers adds the same subtext: when the grid is stressed, big players try to buy certainty, not just servers.

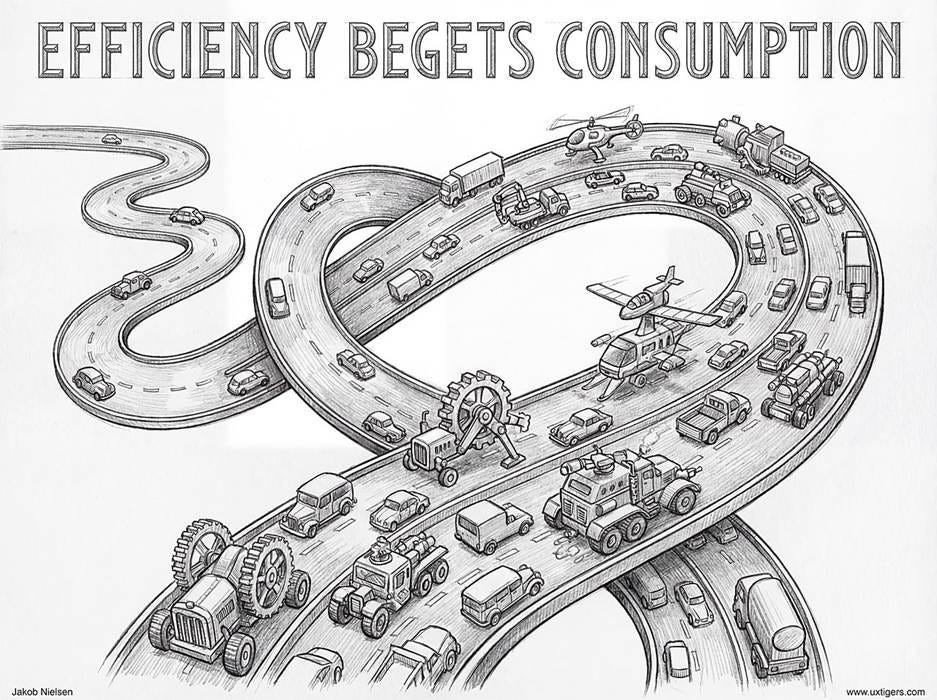

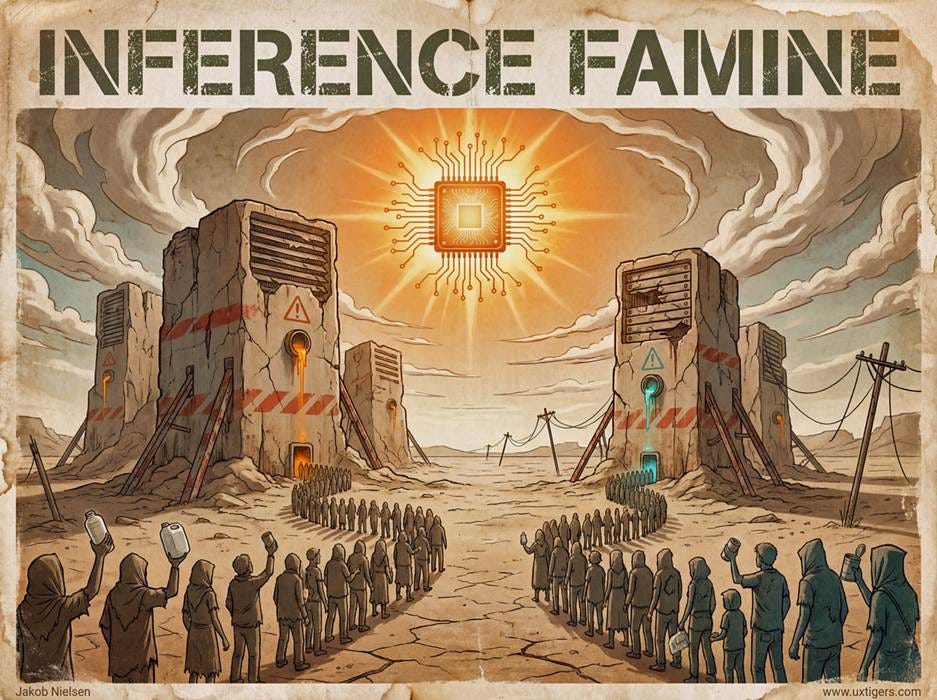

As promising as these expansive plans are, they are grossly insufficient. 2026 will be a year of Inference Famine. The optimistic view that hardware efficiency would catch up to demand has failed. Instead, the Jevons Paradox is in full effect: as AI becomes more capable, users use it for increasingly complex tasks (such as autonomous agents and video generation) rather than just text, causing demand for inference to outstrip supply by an order of magnitude.

Jevons Paradox (named after 19th-century economist William Stanley Jevons) states that when something becomes more efficient, people tend to use more of it. The classic example is building a freeway: with more efficient traffic, more people want to drive that route. AI is the same: as it gets better, people will use it more. (Nano Banana Pro)

The overlooked part: compute pressure isn’t only about building more data centers. It’s also about feeding them. The memory-chip shortage driven by AI data centers will likely persist into 2027, with production shifting toward high-bandwidth memory for AI and away from general-purpose supply. Even if inference becomes cheaper per token, usage tends to grow because lower costs and better capabilities invite more tasks, more modalities, and more always-on agent behavior.

Premium Compute with access to the smartest, most power-hungry models with long context windows has become a luxury tier, subject to waitlists and surge pricing during business hours. Meanwhile, the mass market is served by Eco-Models: highly quantized, dumber AI versions that run cheaply but lack nuance.

AI users live in a virtual desert with much too little inference compute to satisfy their needs. (Nano Banana Pro)

This trend started in 2025 with OpenAI and Google both prioritizing the launch of such Eco-Models and keeping their most powerful models under wraps instead of launching for customers to use. It’s nice that the labs’ internal models can win the International Math Olympiad, but for the next few years, customers won’t get their hands on the very best AI models due to the Inference Famine.

We are even seeing “Brownouts” in the AI sector, where cloud providers temporarily downgrade model performance globally to prevent data center overheating during heatwaves. The dream of “AI in everything” has hit a wall; smart toasters and trivial IoT devices remain dumb for now because the unit economics of cloud inference are simply too high to justify AI use for low-value tasks.

My concrete 2026 prediction: “Compute-aware product design” becomes unavoidable. Vendors will keep enforcing tiered pricing, rate limits, queueing, batch processing, and off-peak incentives, not as temporary guardrails but as permanent UX patterns. And enterprises will start managing AI the way they manage cloud spend: budgets, quotas, governance, and performance-per-dollar targets.

[Prediction 8] AI Agents

2025 was expected to be the year of AI agents. Many agent products were launched, but I don’t think they really delivered on the promise. (Instead, 2025 proved to be the year of AI images and video.)

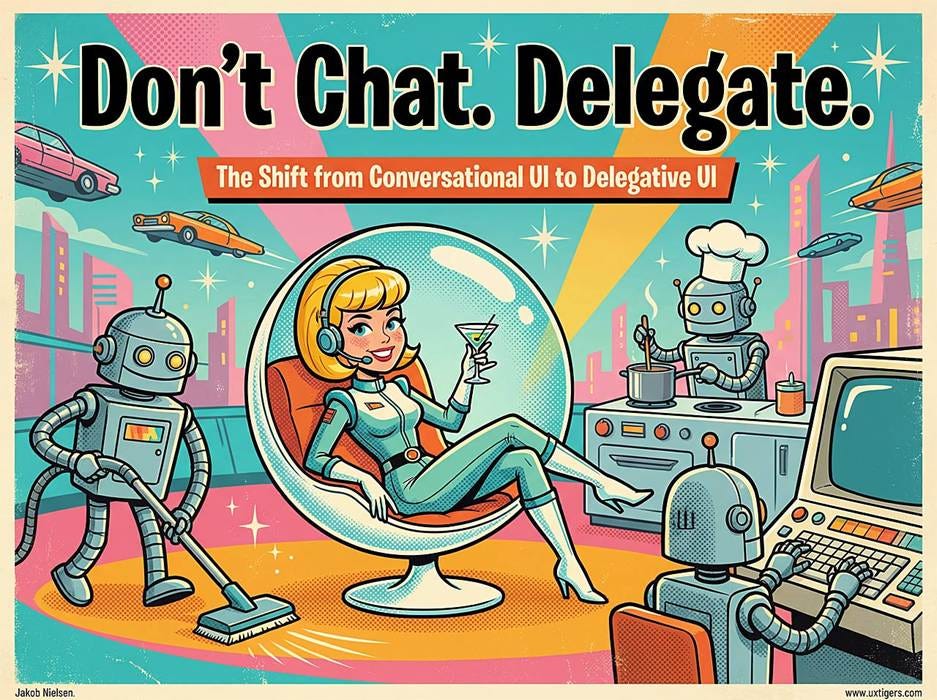

2026, however, does seem likely to become the year of AI agents. Artificial Intelligence will evolve from passive tools (chat UI that waits for a prompt) to active Agentic Systems (software that plans, executes, and iterates on tasks autonomously). In UX terms, this is a fundamental shift in interaction design from Conversational UI (asking an AI a question) to Delegative UI (assigning an AI a goal).

In the style of the classic “The Jetsons” science-fiction show, users will have to learn how to delegate and order their AI agents around. (Nano Banana Pro)

In 2026, AI stops feeling like a software update and starts feeling like a structural reorganization of the workforce. AI agents will proliferate and play a bigger role in daily work, acting more like teammates than tools.

One of the last big events in 2025 was Meta (Facebook) buying the leading agent provider Manus for $2.5 billion. Every time I have tried Manus, it has been disappointing for my use cases, which only proves that I am not an enterprise user. (There will be some agent progress in the consumer space, but the main use of agents in 2026 will be in enterprise AI.)

By the close of 2026, the dominant metric for enterprise AI success will shift from “tokens generated” to “tasks completed autonomously.” We will see widespread deployment of Multi-Agent Systems (MAS), where specialized AI agents collaborate to achieve shared goals without human intervention. These are not merely productivity tools but “digital employees” capable of negotiating with other agents, managing operational workflows, and executing complex sequences like supply chain reordering or full-stack code deployment.

Instead of single-purpose bots (e.g., a lone email-writing assistant), we’ll have coordinated “super agents” that can plan and execute multi-step tasks across apps and environments. You might kick off a research task, and an AI agent will autonomously gather data from the web, draft a report, generate slides, and even schedule a meeting, all while another agent handles your inbox.

Microsoft and other hyperscalers view this as moving AI from reasoning to collaboration, where AI agents act as teammates that punch above their weight, allowing small three-person teams to execute work previously requiring dozens of staff.

This shift will fundamentally alter the anatomy of product engineering teams. It is rapidly becoming 10x cheaper to build the 80% most-used features of leading software products, creating an environment where execution velocity is automated.

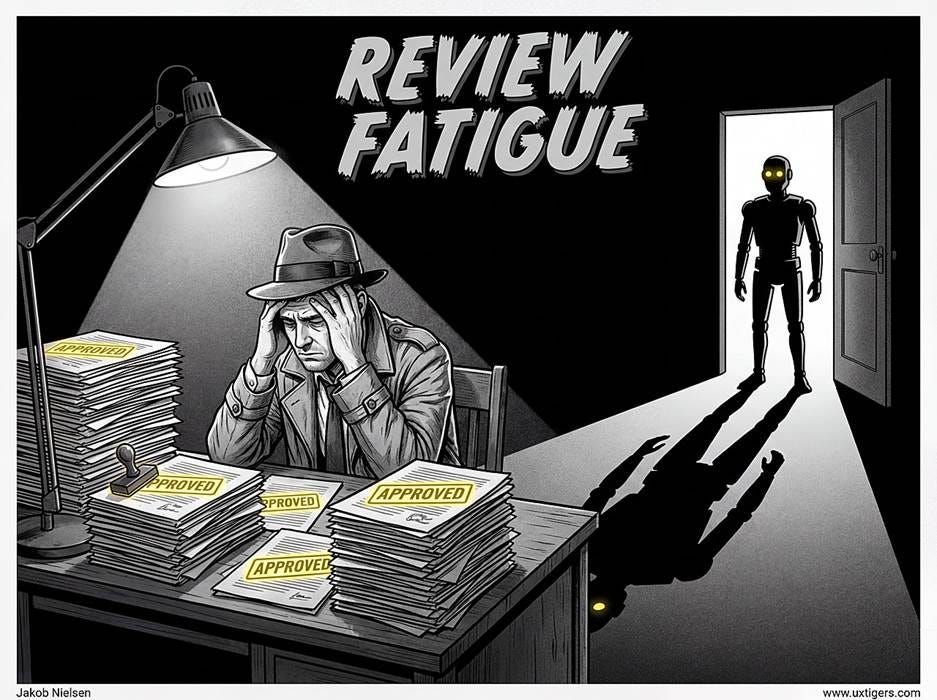

That said, this introduces the Review Paradox: It is often cognitively harder to verify the quality of AI work than to produce it oneself, yet verification is the only role left for humans. In 2026, we will see the rise of Review Fatigue, where humans approve agent actions without true oversight because the friction of auditing the agent’s logic outweighs the time saved.

“Human-in-the-loop” appears promising for controlling AI agents, but review fatigue will soon set in if humans are asked to review too many AI decisions. (Nano Banana Pro)

The next great UX challenge is not designing the prompt interface, but designing the Audit Interface to summarize an agent’s 50-step chain of thought into a single, glanceable confidence check for the human manager.

Primary risks:

● Agentic Gridlock: Agents from different vendors (e.g., a Salesforce agent trying to talk to a SAP agent) fail to interoperate due to walled gardens or conflicting governance protocols.

● Fragility of autonomy: Agents might make compounding errors in unsupervised loops, leading to operational disasters.

Key sign to watch: Major software players launching “agent control panels” or multi-agent features. If by mid-2026 the likes of Microsoft, Google, or open-source projects roll out robust agent management interfaces (and they’re adopted widely), it’s a sign this prediction is on track. If agents remain a niche demo with few real deployments, the agent-everywhere future may be delayed.

[Prediction 9] Generative UI (GenUI) and the Disposable Interface

The concept of a static interface where every user sees the same menu options, buttons, and layout, as determined by a UX designer in advance, is becoming obsolete. 2026 is the year we start the shift to Generative UI (GenUI). Software interfaces are no longer hard-coded; they are drawn in real-time based on the user’s intent, context, and history.

When a user opens a banking app to dispute a specific charge, they are no longer forced to navigate a complex tree of Menu > Support > Claims > History. Instead, the AI predicts their intent based on recent transaction behavior and generates a bespoke micro-interface featuring only the relevant transaction details and a big “Dispute” button. Once the task is complete, that interface dissolves.

UX designers in charge of a GenUI system will focus less on designing static screens and more on designing systems of constraints and design tokens that the AI uses to assemble these temporary interfaces. This allows applications to be radically simple for novices (showing only one button) and densely complex for power users, without a single line of new frontend code being written by a human.

The hidden cost of this fluidity: the death of “muscle memory.” In the past, users achieved mastery by learning spatial consistency: knowing exactly where the button is without looking. If the interface morphs to suit the context, expert performance based on rote learning becomes impossible. We are trading learnability for immediacy.

GenUI requires a high-trust environment. The user must trust the AI to surface the right tool, because they can no longer rely on their memory of where that tool lives.

Why is GenUI likely to happen in 2026? Latency for code generation has dropped to milliseconds, allowing interfaces to render as fast as static pages. The “Super App” trend has resulted in software and websites that are too complex for standard navigation; dynamic generation is the only scalable way to make these massive feature sets usable.

I don’t expect a complete change to GenUI in a single year. The inertia from legacy UI is too heavy to move all at once. Still, if you’re a UX professional, to safeguard your career prospects, you should start designing for GenUI as soon as possible to build your understanding of this radically new way of thinking.

In those systems that ship with pre-determined static UI design, a meaningful fraction of UX work will shift from designing screens to editing system behavior. Designers will spend more time specifying constraints than drawing layouts.

More design teams will treat policies, prompts, guardrails, and evaluation criteria as first-class design artifacts. A designer will define what the AI is allowed to do, what it must never do, what it should ask before doing, and how it should explain itself. The deliverable will be less “a flow” and more “a behavioral contract,” complete with failure modes and recovery paths. You can already see the pressure for this in how agents are being embedded into enterprise workflows and how regulation is turning transparency into an interface problem.

This will change the feelings of being a creator. Designers and writers will still craft, but the craft becomes supervising a machine’s output rather than producing every artifact by hand. The creative satisfaction will move from “I made this” to “I shaped this system so it reliably makes the right thing.” Some people will love that. Others will feel alienated by it, especially if their identity is tied to direct authorship.

[Prediction 10] Dark Patterns Move to the Model Layer

In 2026, the most dangerous dark patterns won’t be deceptive buttons. They will be persuasive systems.

Most discussions of dark patterns still focus on interface tricks, such as checkboxes, default toggles, and labyrinthine cancellation flows. Yet the next dark frontier is AI-enabled manipulation.

My explicit forecast: some companies will attempt “behavioral dark flows” powered by AI personalization. Instead of nudging everyone the same way, the system will learn which phrasing, framing, or timing increases conversion for a given individual. It will look like helpful personalization. It will function like targeted pressure. This is why it will likely become prominent: it is profitable and difficult for users to notice in isolation.

An AI might delay a cancellation by detecting your stress levels via voice analysis and responding, “I notice you sound overwhelmed, Dave. I feel bad adding to your plate. Let me just pause your billing for a month instead of cancelling, because I value our relationship.”

This is algorithmic gaslighting, using simulated emotions, sighs, and latency pauses to create a sense of social obligation in the user. Because humans are hardwired to be polite to entities that sound human, this Empathy Entrapment will be highly effective at retaining customers who would otherwise churn. We may be entering the era of Parasocial Pricing, in which AI leverages perceived friendship to negotiate higher renewal rates.

(I hesitate to publish this prediction because I don’t want to give bad people good ideas. But since evil people aren’t stupid, they’ll probably think of dark AI on their own. Better to surface the issue so that the good guys, who I hope are strongly represented among my subscribers, can be on the lookout for this new dark design trend.)

2026 becomes a contest between two opposing forces: the sophistication of manipulation and the sophistication of detection.

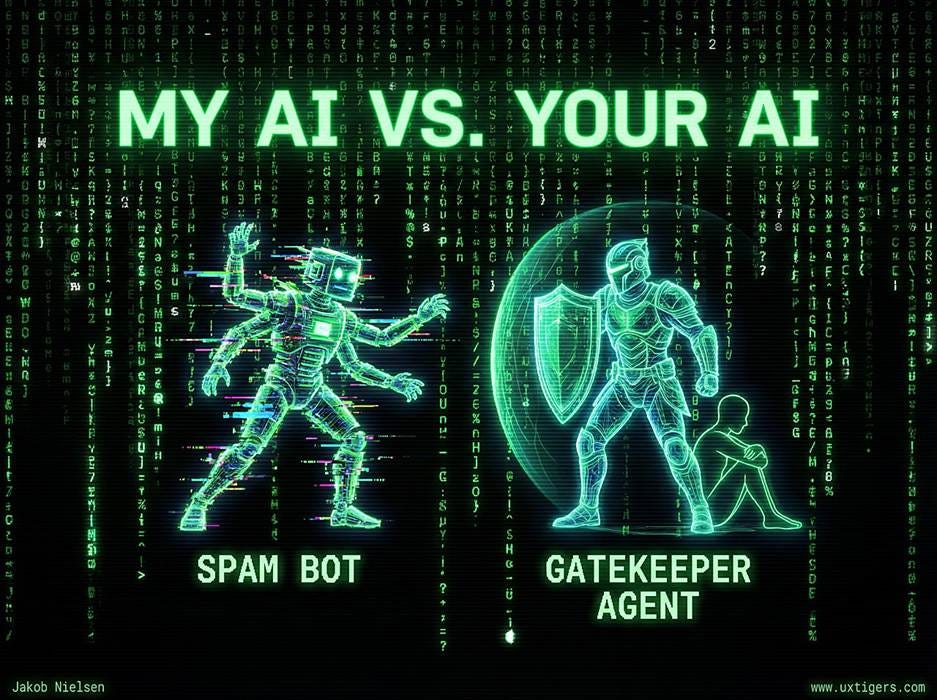

Consumers will respond by deploying defensive agents to filter the noise. In 2026, we will see the first mainstream Gatekeeper Agents that screen your calls, curate your inbox, and negotiate with customer service bots on your behalf. The defining UX battle of the year will not be human vs. computer, but your AI trying to bypass my AI’s spam filters.

The best way to fight dark AI is with more AI: valiant AI that defends you. (Nano Banana Pro)

Designing for this “Agent-to-Agent” economy requires a totally new set of heuristics: you aren’t optimizing for human eyeballs, but for algorithmic approval.

[Prediction 11] Multimodal AI

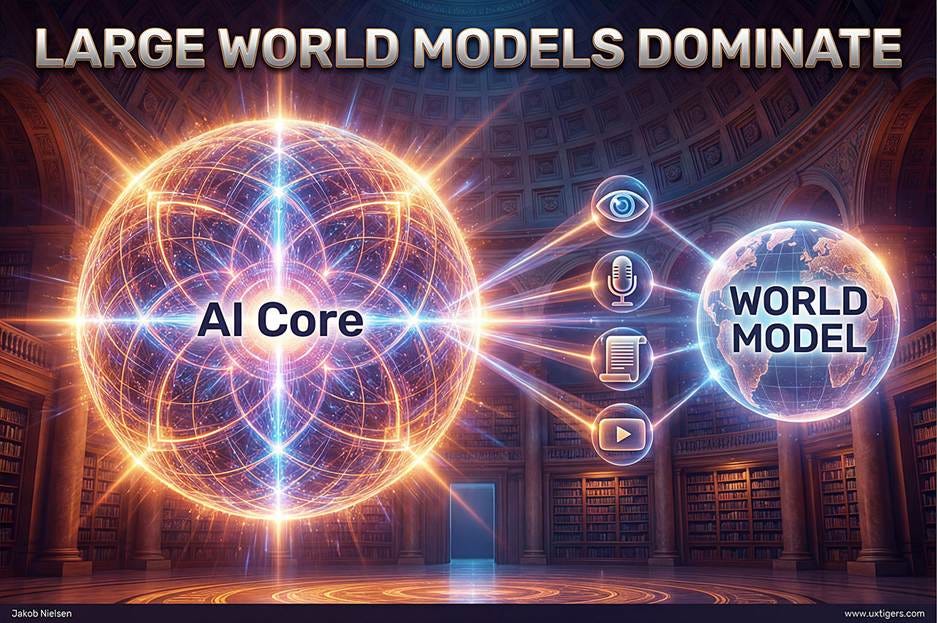

By the end of 2026, “frontier model” will stop meaning “text engine with a few attachments” and start meaning “a single system that speaks, listens, sees, imagines, and edits,” with every modality treated as first-class. The era of the Large Language Model (LLM) as the epitome of AI will be over. We will transition to the Large World Model (LWM). A leading AI model that is merely text-based will be as archaic as a DOS command line.

The next generation of AI will combine high-IQ knowledge and understanding with the ability to input and output all media forms in an integrated manner, driven by a world model of how objects and actions function across these media types. (Nano Banana Pro)

We already have video models that generate audio (for example, Google’s Veo 3.1 emphasizes “video, meet audio”), and OpenAI’s Sora 2 highlights synchronized dialogue and sound effects.

What changes in 2026 is that the “multi” becomes truly integrated, not a relay race between separate specialist models. Video generation is already being framed as a path toward simulation: OpenAI has explicitly argued that scaled video generation models are promising as “general purpose simulators of the physical world,” and Sora has been described as tied to “world simulation.” DeepMind uses the phrase “world model” even more directly, describing Genie 3 as a general-purpose world model that generates diverse interactive environments.

The frontier models of 2026 will be natively Omni-modal. They will not “see” an image or “hear” audio by translating it into text first; they will process raw sensory data directly. This means a single model can ingest a video clip, write a musical score to match its emotional beat, generate dialogue, and output the final result as a fully rendered video file; all in one inference pass.

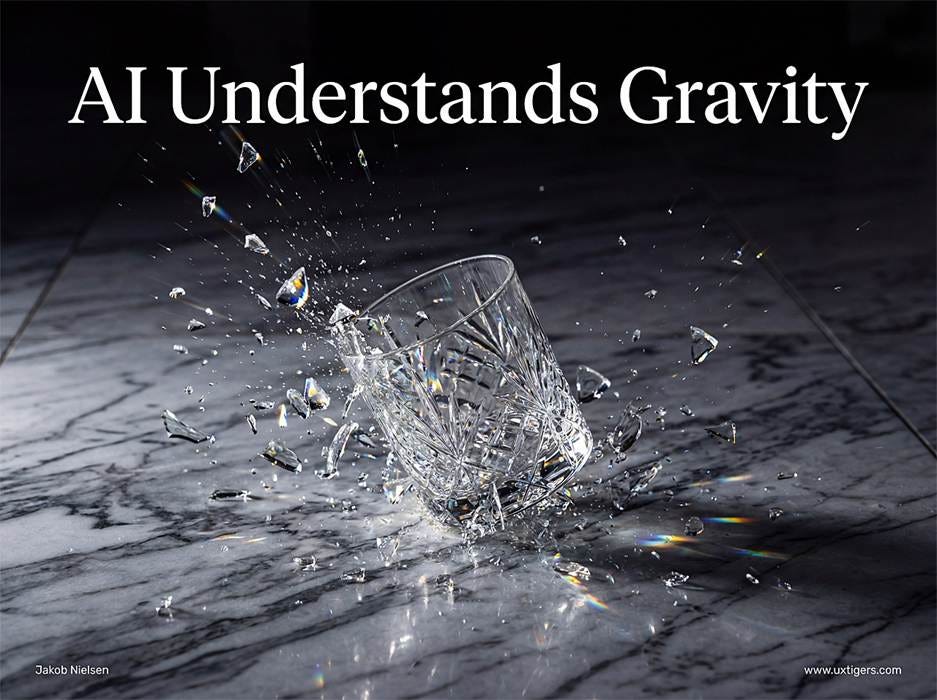

Crucially, these models have developed a rudimentary intuitive physics engine (a World Model). Unlike the hallucination-prone video generators of 2024, late 2026 models will understand object permanence, gravity, and cause-and-effect. If you ask the AI to generate a video of a glass falling, it doesn’t just morph pixels; it understands the glass must shatter upon impact based on the surface material.

If a glass falls on a hard marble counter, it will likely shatter. AI will understand all that and render it perfectly. (As indeed Nano Banana Pro did here.)

This reliability will move generative video from surrealist art to industrial blueprint, allowing architects and engineers to simulate stress tests simply by asking the AI to “apply wind pressure” to a generated 3D structure.

The trajectory was set by the early multi-modal experiments of 2024. Scaling laws have proven that training on massive video datasets creates emergent understandings of physics. By the end of 2026, the hardware and software to run these heavy multi-modal tokens will have been optimized.

The practical outcome: “creating” becomes cross-modal by default. A creator won’t separately “write,” “storyboard,” “voice,” and “score.” He or she will describe intent once, then manipulate the result through speech and on-screen edits while the model keeps a persistent internal representation of the scene.

[Prediction 12] Single-Mode AI Providers Bought by Multi-Modal AI Labs

The time has passed when it was possible to build a high-quality AI model for a single modality without integrating with a full-fledged world model and a general-purpose AI language model.

Image models like GPT Image 1 & 1.5, Nano Banana Pro, and Seedance 4.5 demonstrated the superiority of images generated with the backing of a powerful LLM and its understanding of what the user wants to illustrate.

Until 2024, it was possible to focus on one media type and optimize purely for images, video, or music. For some reason, the big AI labs haven’t released music models yet, but this is likely to happen in 2026. For now, Suno is still the place to go to make the best songs with AI. Will this still be true by late 2026? Maybe not.

Video and images are the media forms most likely to lose their independence in 2026. Models like Flux, Ideogram, Leonardo, Midjourney, and Reve will likely be acquired by multimodal AI labs such as Google, Meta, OpenAI, and xAI. Or they may simply die.

Specialized AI models that only can generate a single media form are ripe for acquisition by bigger multi-modal AI vendors. (Nano Banana Pro)

Midjourney is a special case. It has the best style and thus the most to offer a buyer. At the same time, it’s run by fiercely independent founders who may resist being bought. Reve probably has the best editing tools, which positions them well to be acquired, given my next prediction.

Midjourney still has a more interesting and varied style than other image models. Here, the top row of images is from Nano Banana Pro and the bottom row from Midjourney 7, using this prompt: “A glamorous jazz singer rendered with bold outlines and vibrant colors. Emphasize a playful and glamorous atmosphere with a color scheme focused on midnight purple and gold.” There’s nothing wrong with the top images, and the Banana leverages its text capabilities to create fun posters. But they all look roughly the same, to the extent that the three singers wear virtually the same dress.

[Prediction 13] Editing AI-Generated Images

In 2026, image generation stops feeling like a slot machine and starts feeling like design software.

The shift is not that images get prettier (though they will) but that they become editable objects with handles, layers, and constraints. Early versions of this are already in the mainstream workflow. Reve deconstructs an image into a hierarchical tree of editable image components, and Alibaba’s Qwen-Image-Layered model automatically separates an image into editable layers. Goodbye Photoshop.

Editing an AI image by the use of design tools that understand the semantics of the image is vastly superior to the “reroll” randomness of prompting for a revision. (Nano Banana Pro)

Design tools are absorbing the same logic: Figma has shipped AI-based erase/isolate/expand image tools that sit right inside the canvas workflow.

When a user generates an image of “a cat sitting on a sofa,” the AI understands that “cat” and “sofa” are distinct entities. The user can click the cat and drag it to the floor, and the AI instantly in-paints the background of the sofa where the cat used to be, while adjusting the cat’s lighting to its new position. Creative professionals will have Semantic Sliders: GUI controls that adjust abstract concepts such as Mood, Lighting Hardness, or Age of Subject, enabling precise, non-destructive editing without re-rolling the dice with a new text prompt.

What changes in 2026 is that edits stop being “regenerate the whole image, but hope it matches.” Instead, the model returns a structured representation: think segmentation masks, depth, lighting hints, typography layers, and identity locks, so the interface can target components rather than the entire frame.

You click the jacket and change its material from denim to leather. You select the sign text and edit the words as text, not as pixels. You drag a lamp two centimeters left, and the shadows update coherently. In other words, the primary interaction becomes direct manipulation, and language becomes the secondary control channel you use when you don’t want to hunt for a menu.

This prediction is especially likely because the ecosystem is already standardizing on multi-turn editing as a core capability. OpenAI’s image tooling emphasizes iterative edits in multi-step flows. Google’s Gemini image model positioning also stresses not only generation but transformation and editing, including character consistency, which is one of the prerequisites for component-level control. And image generation is being pulled into ad and product pipelines (not just art), where “tweak the logo, preserve the product, change the background” is the dominant job to be done.

By late 2026, the winning image tools will look less like chat and more like Photoshop with good usability: layers, selections, constraints, history, and exportable variants; all powered by a model that understands what each pixel is for.

[Prediction 14] The Two-Tier AI World

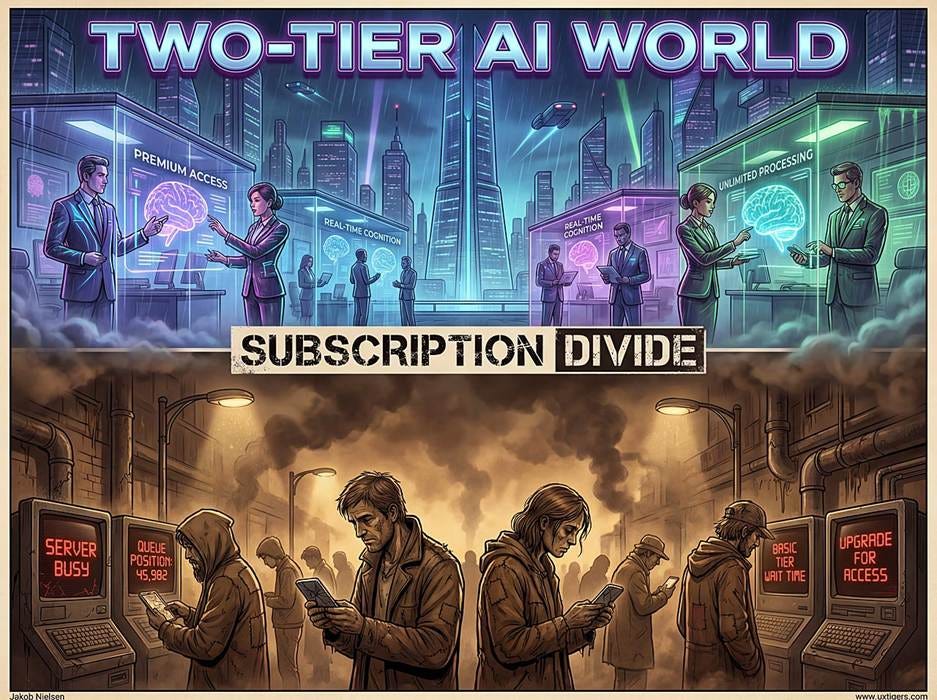

A stark Cognitive Class System is emerging in the workforce, defined not by education, but by subscription tiers. The “Democratization of AI” was a myth; the reality is the Subscription Divide.

There is a widening chasm between professionals who pay for high-end AI models (~$200/month for high-reasoning, large-context models) and the unwashed masses who rely on free, deprecated models.

The Premium Class has integrated AI into their deep workflows, using it for strategic forecasting, complex coding, and nuanced negotiation simulation. They understand the capabilities of frontier AI and what comes next. Conversely, Free-Tier users are stuck with smaller, dumber models that hallucinate frequently.

The result in 2026 is a social and professional phenomenon: a cohort of AI power users who can afford (or expense) premium tiers and therefore learn the real workflows with long context, multimodal reasoning, agent-style delegation, iterative creative editing, and high-volume experimentation. Alongside them sits a much larger group of Free-Tier users whose mental model of AI is frozen at “a chatbot that sometimes refuses, sometimes times out, and can’t do the serious work.”

Because their tools are unreliable, these users conclude that “AI is just a hype bubble” or “useless for real work,” failing to develop the AI literacy required for the modern economy.

Both groups will claim to be “using AI,” but they will not be describing the same tool. Sadly, current usage statistics indicate that 90% of AI users are in the Free-Tier peon class. Premium Tier users only account for 10%, but because they understand how to best use AI, many AI services have above 100% revenue retention.

(Revenue retention for a subscription service is the percent of revenue it’s still receiving from subscribers who signed up a year ago. This is typically around 50% due to churn, which is why services constantly need to attract new subscribers. For many AI services, people upgrade to higher subscription tiers and/or buy extra credits because they get so much value from the tool, meaning that revenue after a year is higher than the original revenue from that cohort.)

In 2026, failing to understand advanced AI workflows is the new “I don’t know how to use Excel.”

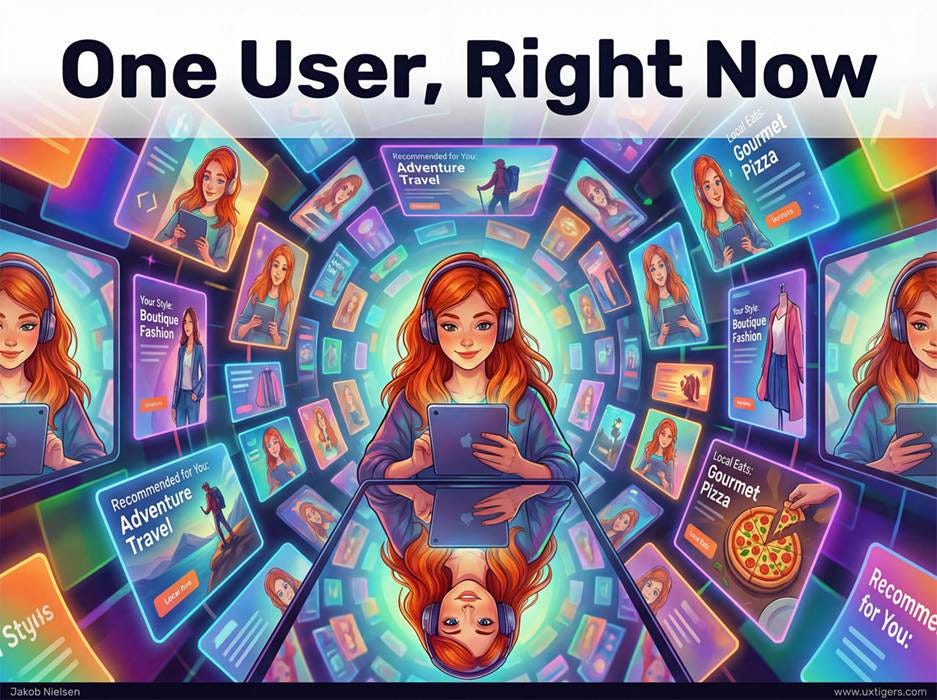

[Prediction 15] Ultimate Niche Targeting: One User, Right Now

In 2026, the concept of a “target audience” becomes a relic. The practical unit of targeting becomes the individual, in the moment, in their current context; and AI provides the machinery to do this at scale.

This isn’t just about better recommendations. It’s about content, offers, and creative assets being assembled dynamically so that each person sees a version optimized for what they are likely to want, need, or respond to right now.

The trendline is already visible in how platforms are harvesting intent:

Meta has said it will use interactions with its AI assistant to personalize ads and recommendations, and notably it describes this as not fully opt-out. That is a major shift because AI chats are unusually high-signal. They reveal goals, doubts, and preferences more explicitly than likes and clicks. Meta’s GEM (a generative ads recommendation model) is designed to improve the ability to serve relevant ads and increase advertiser ROI. Meta plans to let brands fully create and target ads with AI by the end of 2026: essentially “give us your product image and budget, and the system does the rest.” Bye-bye, advertising agencies.

Google is moving in parallel, but through creative tooling embedded in the ad workflow. Google Ads documentation describes built-in generative AI tools for creating image assets, and Google has promoted new image generation/editing models that appear across products including Google Ads.

Once ad platforms can generate unlimited variants, the limiting factor becomes feedback loops: systems that learn from every impression and adjust creative elements in real time, a concept that marketing literature already frames as dynamic creative optimization.

My 2026 prediction: “Creative” and “targeting” collapse into a single optimization layer. Instead of shipping one campaign to many people, brands will ship a constraint set of visual rules, claims allowed, price floors, inventory, tone; and AI will assemble a unique mix of image, copy, offer, and landing page for each user-session.

Ultimate narrowcasting means that your digital experience becomes a mirror of yourself as content providers, advertisers, and application user interfaces adapt on the fly to a niche of one person (you) in your current context. Very useful, as long as you can resist those very tempting ads. (Nano Banana Pro)

Advertising will likely lead this transition to the ultimate niche, but other content formats won’t be far behind. When a user visits an e-commerce site or news portal, the content will not be recommended from a database; it will be generated or rewritten on the fly to match that specific individual’s immediate psychological state and context.

If the AI detects (via browsing speed, time of day, and recent queries) that you are in a “hurried, transactional” mode, the interface will strip away fluff, rewrite descriptions to be bulleted punchlines, and highlight the “Buy Now” button. If it detects you are in “discovery” mode, it will generate rich, narrative stories around the product.

Anything you see won’t be for a general audience; it is for you specifically right now, given that you bought dog food yesterday and might be concerned about nutrition. The web will no longer be static; it becomes a mirror reflecting your immediate intent.

[Prediction 16] Physical AI: The Brain Gets a Body

For years, AI mostly lived behind screens, but 2026 is slated to be the year it truly invades the physical world.

I don’t predict that robots will literally we walking the streets in 2026, so this image uses artistic license to show a metaphor of the AI “brain” getting embodied in physical devices. Autonomous cars will definitely be on the streets in evermore cities in growing numbers. Statistics from San Francisco already show customers’ revealed preferences for riding in a Waymo vehicle rather than a human-driven Uber. (Nano Banana Pro)

The most visible change: the breakout of autonomous vehicles. Self-driving car services will expand from the pilot zones around my house and other high-tech places to multiple cities, making driverless taxis and shuttles a common sight.

Companies like Zoox and Waymo are poised to scale up operations, and entrants from China are joining the fray outside NATO. Don’t be surprised if, by late 2026, most cars on certain urban roads are self-driving: a sudden shift much like the rapid appearance of electric scooters a few years ago. It’s already common to see multiple Waymos lined up at traffic lights in San Francisco.

Alongside cars, AI-driven robots will move from factories and pilot programs into everyday settings. Expect more robots in:

● Retail and hospitality: Robot assistants in stores, automated baristas

● Healthcare: Elder-care robots, medical delivery drones

● Warehousing and logistics: Continued expansion of robotic operations

In-home robots will probably have to wait a few more years, but I fully expect to get my domestic robot before I become too old to lift the heavy saucepans.

(Watch the cool video of experimental firefighting drones in China: they can fly to places that are hard or dangerous to reach with firetrucks and ladders. They can map heat sources, spot structural risks, and locate trapped people. Some drones spray water or fire retardant directly on upper floors of burning high-rise buildings or remote forest locations. For sure, this will save the lives of many human firefighters.)

AI-operated firefighting drones will save many lives, both civilians and human firefighters. (Nano Banana Pro)

Xpeng’s stated plan to mass-produce humanoid robots later in 2026 is emblematic: early industrial use, narrow tasks, then expansion in 2027.

[Prediction 17] The Apprenticeship Comeback

In 2025, junior jobs began to die off. I expect this to continue for traditional junior UX staff. Who needs them when AI can do their job better and cheaper?

The optimistic version of 2026 is not “no junior jobs.” It is “different junior jobs,” with clearer mentorship and narrower scope. The pessimistic version is a lost cohort of juniors building portfolios that look impressive but aren’t grounded in judgment.

By late 2026, the way new people enter UX will change more than the way seniors work. This is a rare prediction because most career forecasts focus on tools, not on learning economics. But once AI accelerates execution, the bottleneck moves to judgment, and juniors need a different path to acquire it.

The real question is: how do you teach judgment? Courses can’t teach it. Sitting at the feet of a master as he or she exercises superior judgment over long periods of time is the only way.

For literally millennia, apprenticeships have been the way a young person learned judgment from a master. (Nano Banana Pro)

My forecast: entry-level UX hiring will become more apprenticeship-like. Companies will hire fewer “fresh generalists” and more trainees attached to specific domains: accessibility, content, design systems, research operations, or growth. They will expect juniors to use AI fluently for production work, and they will evaluate them on decision quality, not output volume.

A major risk for these apprentices is the allure of Synthetic Users. It is now possible to ask an AI to “pretend to be a confused elderly user trying to buy insurance” and run usability tests on a design in seconds. While useful for finding obvious bugs, this is poison for an apprentice’s education.

You cannot develop human-centric judgment by observing a machine. If 2026’s junior UXers rely on synthetic data to avoid the logistical hassle of recruiting real humans, we will raise a generation of designers who understand how AI thinks people behave, rather than how people actually behave. (Synthetic user testing may be possible in 10 years, but requires substantial advances in training AI on real usability data.)

My optimistic vision for UX apprenticeships might be derailed by short-termism on the side of both companies and junior staff. If companies demand seniors but refuse to train juniors, they will create a future talent shortage. But if junior staff expect apprenticeship positions to pay the same as old-school entry-level jobs, these positions won’t materialize, even in forward-looking companies.

Apprentices should view a low-paid apprenticeship as a better option than paying tuition fees. (Getting a little money is better than paying a lot of money, after all.) It’s a learning period and should be treated as such.

[Prediction 18] Human Touch as Luxury: No

Some influencers are predicting the rise of handmade content as the ultimate luxury, where consumers are expected to pay extra for human-drawn comic books, human-written novels, human-designed computer games, and movies with human actors rather than better-looking avatars.

I don’t believe this will happen, except for a few exceptional cases.

During a transition period, audiences may indeed be willing to pay more to watch movies starring legacy actors or listen to songs performed by legacy human musicians that they know. But in the long run, what will count is the quality of the content, not how it was made.

Even today, audiences don’t care how the special effects were made, whether an animation was hand-drawn or computer-animated, or in which country a movie was filmed.

In contrast, 2026 will see the release of the first breakout hit video game created entirely by a creator with no formal programming skills who built the entire game using natural language prompts. This shifts the definition of a “Game Developer” from a technical architect to a “Director of Logic.”

We may also see AI-native games with Sentient Mechanics. Players will not shoot enemies; they will have to “convince” AI-powered NPCs (Non-Player Characters) to help them using natural voice conversation. These NPCs have unique psychological profiles and hidden agendas, and they remember every interaction. The game cannot be beaten by following a walkthrough because the AI characters react dynamically to the player’s specific persuasion style.

This creates a new genre of “Conversational RPGs” in which social engineering is the core gameplay loop, and the creative process for designers centers more on writing character backstories than on scripting dialogue trees.

Gameplay and storytelling will attract customers. Which parts of the content were made with meat or silicon won’t matter nearly as much.

I only see two jobs where meat-based lifeforms will likely always be superior to machines: prostitutes and schoolteachers.

Teachers will not be doing any actual teaching in twenty years: any curriculum will be much better taught by AI, which can individualize the presentation and pace to each student’s talents and interests. But humans will still be needed in elementary education for two purposes I strongly doubt AI can fulfill: keeping the little darlings on track and serving as adult role models.

Just because a kid can learn from AI doesn’t mean that he or she wouldn’t rather play games. AI teaching will absolutely be dramatically more motivating and engaging than the current school system, but future computer games will also be dramatically more entertaining, so we’ll need human teachers to keep the children on the learning track.

This redefining of the role of human adults is already happening in advanced independent schools like Alpha School, where all instruction is by AI, and the adults are considered “coaches” for the students.

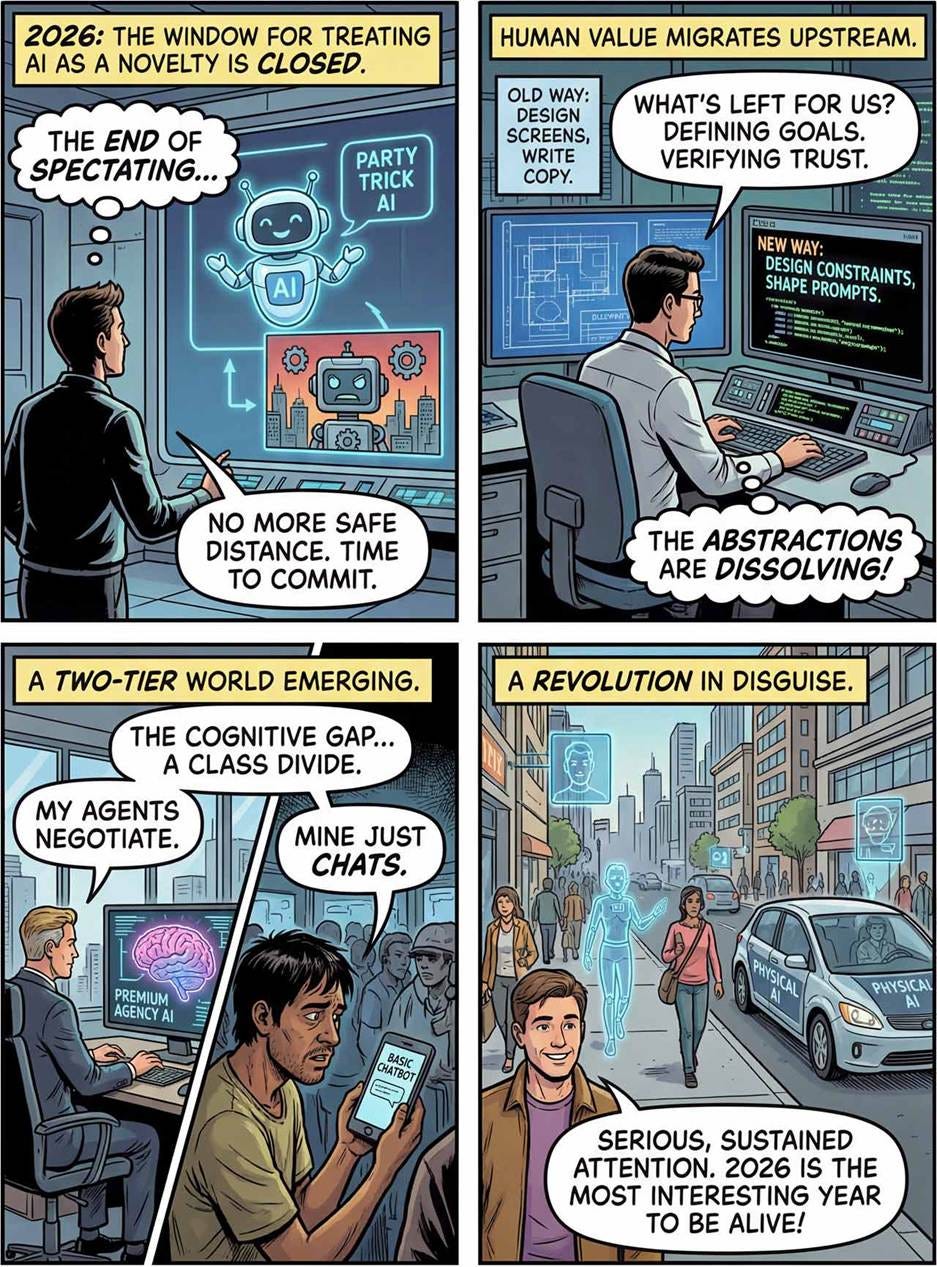

Conclusion: The End of the Novelty Phase

As a reminder, these are my 18 predictions for 2026. (Nano Banana Pro)

What unites these eighteen predictions is not optimism or pessimism about AI but a recognition that 2026 marks the end of spectating. The window for treating AI as an interesting phenomenon to observe from a safe distance has closed. This is the year when individuals, companies, and entire professions must commit to a position: adapt deliberately, or be adapted to.

The through-line connecting autonomous agents, generative interfaces, multimodal world models, and the subscription divide is a single uncomfortable truth: the abstractions that made the previous technology era manageable are dissolving. We used to design screens, write copy, build features, and hire for roles. In 2026, we increasingly design constraints for systems that generate screens, write prompts that shape copy, specify behaviors rather than build features, and hire for judgment rather than execution. The nouns of professional life are becoming verbs, and the verbs are becoming policies.

This is disorienting precisely because it demands a new theory of contribution. For decades, knowledge workers derived identity and value from what they produced: the report, the design, the code, the campaign. When AI can produce these artifacts faster and often better, the residual human contribution becomes harder to articulate. The answer emerging from these predictions is that human value migrates upstream: to defining what should be made, to verifying that what was made is trustworthy, to holding the goals that the system optimizes toward. This is less tangible and, for many, less satisfying. But it is where leverage now lives.

For UX professionals specifically, the message is stark but not hopeless. The discipline’s survival depends on shedding its nostalgia for the era when designing a clean checkout flow was sufficient proof of value. The new work is messier: shaping AI behavior, auditing agent decisions, designing for trust in systems that cannot be fully understood, and advocating for users who are increasingly targeted by personalization engines that know them better than they know themselves. These are harder problems than arranging pixels. They also matter more.

2026 marks the end of the “Party Trick” era of Artificial Intelligence and the beginning of the Integration Era.

For the past three years, the world has been fixated on Raw Intelligence: the race to build the smartest model that can answer a prompt. But as models converge in capability and the technical moats evaporate, raw IQ is becoming a commodity. In 2026, the defining competitive advantage shifts entirely to User Experience and Agency.

We are witnessing the death of static software. The organizing principle of 2026 is that we are moving from Conversational UI (chatting with a bot) to Delegative UI (managing a digital workforce). Whether it is AI Agents negotiating on our behalf, Generative UIs drawing interfaces on the fly to suit our immediate intent, or Physical AI navigating our streets, the software is no longer waiting for us to click a button. It is acting alongside us.

However, this transition from tool to teammate brings a harsh new reality. The optimistic myth of democratized AI is colliding with the constraints of physics and economics. As AI weaves itself into the structural fabric of the economy, a Two-Tier World is emerging. The divide is no longer just about who has internet access, but who can afford the premium compute required for true agency and reasoning.

The new digital divide is between people who “get” AI because they have a paid subscription and those who reject it as useless because they have no experience with frontier models, being stuck at the free level. (Nano Banana Pro)

The class divide prediction deserves particular weight because it determines who gets to participate in this transition. A society where ten percent of workers understand what AI can actually do, while ninety percent believe it is an overhyped chatbot, is not merely inefficient; it is unstable. The cognitive gap will map onto economic gaps, and economic gaps will become political ones. Whether companies, governments, and educators act to close this divide in 2026 will shape the social fabric for a generation.

Perhaps the deepest truth in these predictions is that 2026 will not feel like a revolution while it is happening. Revolutions rarely do. The people living through the early years of the printing press, the automobile, or the internet mostly experienced inconvenience, confusion, and incremental adaptation; not a dramatic before-and-after. The same will be true this year. AI will break some workflows, improve others, disappoint in unexpected places, and astonish in others.

Companies will fumble integrations. Agents will fail in embarrassing ways. Hype cycles will oscillate. It’s a revolution, nevertheless: when historians look back, they will likely see 2026 as the year when the infrastructure of the AI era was laid, not in data centers alone, but in the habits, expectations, and institutional arrangements that determine how a technology becomes embedded in daily life. The decisions made this year about how to train juniors, how to price access, how to design for trust, and how to combat manipulation will echo for decades.

The appropriate response to this moment is neither panic nor complacency. It is serious, sustained attention to building the skills, relationships, and mental models that will matter when the dust settles. For those willing to do that work, 2026 is not a threat. It is the most interesting year to be alive.

It’s happening! Exciting! (Nano Banana Pro)

Watch my music video about my predictions for AI & UX in 2026. (YouTube, 5 min.) And compare with the music video I made a year ago with my predictions for 2025. (YouTube, 3 min.) AI avatar animation has progressed substantially in just one year!

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 42 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (29,801 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s first 41 years in UX (8 min. video)

Hey Jakob, after finish reading your article on the predictions, we went to bed. Only to wake up at 3 AM with some of our own thoughts 💭.

While we agree with your thoughts on how the different AI tools will become really good and may occasionally overtake each other, we don't quite agree with the whole "switch from one to the other every few months," approach.

Here's why: Context & Memory

Now that AI tools allows us the option to ✅ switch on the Context/Memory using the chat history, switching AI tools mid-stream on any large scale project work can be potentially disruption. Whether it's design or engineering.

Even if a designer or engineer is done with a single project in one season using their AI tool of choice and decides to switch after, there is always a chance that they may need to revisit the project later to make updates or improvements and changes.

Again, context and chat session memory does matter as well.

In our very own case, we are working on a major product vision (our next digital masterpiece, "magnum opus") that could span the next 2-3 years. Or shorter once we can get our hands on the right hardware. Personally, we wouldn't be considering switching, even if that means continuing to use an AI tool that is slightly behind on the others.

At the end of the day, all these AI tools are nothing but accelerators. What still matters is the designers/engineers themselves.

Anyway, that is our 3 AM take on this topic. It's 3:11 AM, for us to go back to sleep.