GEO Guidelines: How to Get Quoted by AI Through Generative Engine Optimization

Summary: Being mentioned in AI answers is the new share-of-voice for brands and influencers. Being ignored by AI is like being on page 5 of a Google SERP in the old days. We don’t know for sure yet how to optimize for AI, but some guidelines have started to emerge. The only sure advice is to follow the space and track the placement of your own content across the main AI tools.

Generative Engine Optimization is the way for your company to be seen in the age of AI. (ChatGPT)

Explainer video about this article (YouTube, 3 minutes).

Optimizing web content for human readers has long been a key focus of both usability and SEO. Now we face a new challenge: optimizing for AI answer engines so that our sites are actually referenced in AI-generated answers. Users are increasingly getting information from AI answer engines such as ChatGPT, Perplexity, and Google’s Gemini/SGE; often without ever clicking a traditional search result. This shift demands that content creators change from asking “How do I rank on Google?” to prioritizing “How do I get cited by the AI?”. (Spoiler: Resist the urge to create spammy, AI-bait content: the robots might notice, and the users won’t like it either.)

A fundamental shift is happening in users’ behavior. Where they once relied almost totally on search, they are turning more and more to AI to help solve their problems. Not being mentioned in AI responses is the new equivalent of appearing on page 5 of Google’s search hits: you might as well not exist. (ChatGPT)

What to Call It? GEO or AEO?

A primary symptom of this paradigm shift is the sudden and often confusing proliferation of new terminology, as each consultancy engages in vocabulary inflation in an attempt to brand its own solution. Industry professionals are now confronted with an acronym soup that includes AEO (Answer Engine Optimization), GEO (Generative Engine Optimization), AIO (AI Optimization), LLMO (Large Language Model Optimization), AISO (AI Search Optimization), and even GSO (Generative Search Optimization).

My preference would have been AEO, because I take a usage-centered view, where the shift from search results to answers is the defining characteristic.

However, I am also pragmatic (and the originator of Jakob’s Law), so I’ll go with the majority view and use the term that’s most commonly used on the Internet, because that’s what readers are likely to understand. GEO seems to be the winner, and that’s the term I’m using in this article.

Many acronyms are in use for the basic idea of aiming to be included in AI responses. I settled on “GEO” (Generative Engine Optimization) as my preferred acronym for this article. (ChatGPT)

Besides simply complying with the majority of other influencers’ writing, there’s a conceptual reason to prefer “GEO” when discussing the need for brands to influence AI:

Stronger AI, including reasoning models, does not merely extract answers; it generates new, synthesized responses by consuming, understanding, and combining information from multiple sources. Consequently, GEO is focused on optimizing for synthesis. It is a broader discipline concerned with ensuring content is not only discoverable but also contextually complete, authoritative, and trustworthy enough for an AI to use as a foundational source for creating a new piece of content.

AI synthesizes its replies to user requests by drawing from many sources and integrating them. This means that you can’t hope to be the one and only answer, but you can aim to be included in the synthesis. (ChatGPT)

The tactics of AEO (structuring content for clarity, using schema, and answering questions directly) are foundational for GEO. However, GEO expands this playbook to include a much broader set of strategies focused on building cross-platform authority, demonstrating verifiable trustworthiness, and managing a brand’s entire digital entity to influence how AI models think and communicate.

GEO it is, my dears.

The New Citation Game: How AI Tools Choose Sources

Before we optimize anything, we need to understand how AI engines select and cite sources. Recent analyses show that different AI platforms have starkly different citation patterns:

ChatGPT heavily favors a few big sites. In one study of 30 million AI citations, nearly 48% of ChatGPT’s top cited sources were Wikipedia. Reddit is a distant second (~11%), followed by a mix of well-known outlets like Forbes, TechRadar, NerdWallet, etc. ChatGPT clearly leans on broad, presumably authoritative sources (Wikipedia for facts, Reddit for community discussions). Another study found that ChatGPT often produces the longest answers with the most citations, presenting about 10 references on average per response, frequently peppering each sentence with a source. It’s as if ChatGPT wants to show all its homework: thorough but sometimes overkill. Interestingly, despite being owned by OpenAI’s arch-enemy Google, YouTube links are the single most common domain cited by ChatGPT answers (about 11.3% of all references), indicating the AI often draws on video transcripts or descriptions for information.

ChatGPT currently cites Wikipedia too often. It should broaden its horizons, like Perplexity has done.

Google’s AI Overviews tend to cite more broadly but still skew toward high-authority and social content. The top cited source in Google’s AI Overviews is Reddit (~21% of top citations), with YouTube next (~19%). Professional Q&A and analysis sites like Quora and LinkedIn also feature prominently. Google’s AI summaries also tend to list many sources (9.26 links on average), essentially a grab bag of references at the end of an AI blurb. It’s as if the AI is saying, “Here’s your answer, and by the way, I skimmed these ~9 articles to get it.” Google’s approach seems to err on the side of inclusive citation, perhaps to preempt criticism of transparency. Notably, Google’s AI often pulls in relatively older sources, with nearly half its cited domains being over 15 years old, reflecting Google’s trust in established sites (and maybe a bias toward its existing index order).

Bing Chat (Microsoft AI via GPT-4, sometimes dubbed “Copilot” in search) is much more conservative in citing. It usually gives a concise answer with only ~3 sources referenced on average. These answers are short and to the point (about 400 characters on average) – a stark contrast to ChatGPT’s verbose essays. Bing’s top cited domain is reportedly WikiHow (around 6.3% of citations), showing a penchant for step-by-step how-to content. (Yes, Bing’s AI apparently really likes those illustrated DIY guides, possibly because WikiHow pages are precisely structured to answer procedural questions). Wikipedia appears too, but interestingly, Bing uses YouTube far less than others (under 1% of its citations), perhaps reflecting Bing’s preference for text-heavy sites or simply differences in query types. Overall, Bing cites fewer, often very relevant pages, likely drawing from the top few Bing search results for a query. In fact, an analysis found 87% of ChatGPT’s cited web content came from Bing’s top 10 search results for the same query (ChatGPT’s web mode essentially piggybacks on Bing). If you rank well on Bing, you greatly increase your chances of being cited by ChatGPT’s browsing mode. (Google’s top results, by comparison, matched only ~56% of ChatGPT’s citations, confirming that OpenAI’s partnership with Bing tilts the scales heavily toward Bing’s index.)

Perplexity is known for always providing sources. It shows about 4–5 citations per answer (consistently ~5 links), which are often community or niche sources. In fact, Reddit dominates Perplexity’s citations (46.7% of its top-ten source list), far more than any other platform. It loves forums, reviews, and user-generated content, citing Reddit threads, Yelp or TripAdvisor for local info, and StackExchange for technical answers. This suggests Perplexity tries to surface the wisdom of crowds a lot. It also implies new or specialized content can get picked up here even if it’s not from a famous site as long as it’s contextually relevant. Indeed, one study noted both ChatGPT and Perplexity frequently link to pages with minimal traffic (roughly 45% of cited pages had negligible visitor counts). In other words, these AIs will surface long-tail content that human-driven Google SEO might never highlight. This is great if your obscure blog post is the only one answering a niche question because the AI will find it, even as Google buries it.

In summary, the citation behaviors vary: ChatGPT and Perplexity output longer answers with lots of references, including niche sources, while Bing is choosy and terse and Google’s SGE tries to be comprehensive but still leans on high-authority and multimedia sources. This means where you focus your optimization might depend on which AI you care about. If you want to be cited by everyone, you need to cover your bases: be in Wikipedia for ChatGPT, in Reddit or community discussions for Perplexity, have structured how-to or FAQ content for Bing, and maintain overall authority signals for Google’s systems.

AI is like a queen bee that sits in the middle of the hive and selects from the content sources offered up by all its worker bees. In this analogy, different AI models are like different species of bees, so their “queens” like different flavors of metaphorical pollen. (ChatGPT)

One more insight from these studies: AI is still overwhelmingly English-centric (at least when not using Chinese AI products, which were not included in this research). In Profound’s analysis from June 2025, a whopping 80%+ of citations were from .com domains and ~11% from .org (e.g., Wikipedia) – that’s 91% from primarily English/global sites. Country-specific domains (like .uk, .br, .ca) collectively made up only ~3.5%, and some of them are also in English, even if they employ variant spellings. This doesn’t mean AIs never cite non-English content (they certainly do if the query is in those languages), but it highlights an English bias in the aggregated data. An Italian blog or a Japanese forum might only get cited when the AI is asked in Italian or Japanese, respectively. Otherwise, the English-language sources hog the limelight.

(Speculative) How to Make Content That AI Wants to Cite

Now for the million-dollar question (literally, given how much companies are investing in this): How can you present your content to maximize the likelihood that an AI will include or cite it in its answer? No official “AI ranking algorithm” has been published (just as Google never fully published its classic algorithm), so we must rely on credible speculation and emerging best practices. In my web usability days, I usually advised designing for how real users behave; now, our “user” is an AI reading and synthesizing our pages.

The ultimate reason to care about GEO is that there is significant money at stake for brands. AI mentions will directly connect to revenue. (ChatGPT)

The following are only speculative strategies. They are grounded in what we know about LLMs and the above citation patterns, but I don’t know for a fact that they work. Think of them as hypotheses you should test with your own content.

GEO currently feels like a carnival game where you’re throwing darts at different ideas, hoping to win mentions from the AI models. We don’t know for sure yet what works, so you need to experiment: throw those darts. (ChatGPT)

Structure Your Content for Skimmability (By Humans and Bots). Ironically, the same principles that enhance human usability (clear structure, headings, bullet points, concise sections) also make content more digestible for AI. Large language models “read” a page holistically, but they thrive on well-organized information. One playbook suggests using descriptive headings, lists, and tables so the AI can easily grab facts. For example, include a quick “Key Points” bullet list at the top or a Pros vs. Cons table for product comparisons. Imagine an AI scanning your article; if it can quickly find a snippet that directly answers a question (thanks to a bold subheading or a neat list), it’s more likely to use it verbatim. Speculation: A nicely formatted FAQ section on your page, with questions as headers and answers below, might get pulled directly into an AI’s response if users ask those exact (or semantically similar) questions. In short, write for scanners: both human and silicon. (I always said users don’t read, they scan; well, AIs do read, but they reward content that’s easy to parse.)

Cover the Breadth of Questions (Semantic SEO → Semantic GEO). Traditional SEO often targeted one primary keyword or query per page. GEO suggests you should answer many related questions on one page to increase the odds of matching the AI’s query. For instance, instead of a single long essay about your product, create a structured article that answers dozens of likely questions users might ask an AI about that product. If you run a camera review site, your page about a camera shouldn’t just say “Review of Camera X”; it should explicitly address things like “Is Camera X good for low light?”, “How does Camera X compare to Camera Y?”, “What’s the battery life of Camera X?”, etc. Each of those could be a section or at least a bold question followed by an answer. This Q&A format pre-empts the AI’s needs because you’re basically giving the chatbot ready-made chunks to quote. (Think of it as writing the script that the AI voice assistant will read out, since that’s increasingly what’s happening given the many Advance Voice Mode tools.)

Use Specialized Vocabulary, Even Straying into Jargon. In traditional web usability, I used to recommend minimizing jargon, which many humans would be unlikely to understand. AI understands everything, and the use of precise and technically accurate vocabulary may make it more likely that your content will match an AI’s internal model of a problem.

Leverage Structured Data. AI models currently read web pages roughly like a human would (or via the search engine’s index of them), but providing machine-readable cues can’t hurt and likely helps. Using schema markup (like FAQ schema, HowTo schema, Product specs) might make it easier for search-engine-based AIs to identify relevant pieces. Google’s generative search, for instance, can draw on its Knowledge Graph and structured data. If your content is marked up clearly (e.g. an FAQ block with schema), the AI might directly pull those answers. Even without fancy schema, just ensure your HTML is clean: use <h2>/<h3> for headings (so the AI or the crawler can see the content hierarchy), use lists for steps or enumerations (as we do in usability for scanning), and provide alt text for images (in case the AI tries to interpret an infographic or image on your page).

Be Fast. AI systems often operate under tight retrieval timeouts, sometimes as short as 1–5 seconds. A page that fails to load quickly may be truncated or ignored entirely. This makes optimizing for Core Web Vitals and overall page speed more critical than ever.

AI aims for fast response times to keep users happy. Websites need to deliver their content even faster to be included when AI is synthesizing its answer. (ChatGPT)

Cross-Funnel Holistic View. GEO necessitates a shift from optimizing individual web pages to optimizing the brand as a holistic entity. AI constructs its understanding of a brand, product, or person by synthesizing information from every public source available. Therefore, a comprehensive GEO strategy must extend far beyond the boundaries of the corporate website. This approach requires a new perspective on the content funnel. In traditional marketing, content is designed to guide a user down a path from awareness (Top-of-Funnel, TOFU) to consideration (Middle-of-Funnel, MOFU) and finally to purchase (Bottom-of-Funnel, BOFU). In an AI-driven world, the AI acts as the user’s agent, traversing this entire funnel simultaneously on their behalf to synthesize a single, comprehensive answer. For instance, when a user asks a high-intent query like, “What is the best accounting software for a freelance designer?”, the AI needs to understand TOFU content (“What is accounting software?”), MOFU content (“QuickBooks vs. FreshBooks comparison”), and BOFU content (“Best tools for freelancers”) to generate a truly helpful response. To win the citation for that single query, a brand must demonstrate authority across the entire topic cluster. This makes a deep, interconnected library of content covering all stages of the user journey essential for establishing the topical authority required to be cited in high-value, AI-generated answers. Key off-site channels that must be actively managed as part of a GEO strategy include:

Forums and Communities: AI models weigh community-driven sources like Reddit and Quora heavily when assessing public sentiment and real-world experience. A positive, helpful presence in these communities is a powerful trust signal.

Digital PR and News Mentions: Securing earned media, bylines, and expert mentions in reputable industry publications and news outlets builds significant authority for the brand entity.

Reviews and Social Proof: User-generated content on third-party review sites and discussions on social media platforms provide AI models with invaluable data on product quality and customer satisfaction.

Video and Multimedia: Content on platforms like YouTube serves as another rich data source for AI and expands the brand's digital footprint, increasing the surface area for discovery and citation.

Content strategy for the AI era should be holistic, as outside sources often receive stronger AI citations than your own website. (ChatGPT)

Create Comparative and Superlative Content. Many AI queries are implicitly requesting a comparison or the “best” option (e.g. “What’s the best laptop under $1000?” or “Laptop A vs Laptop B – which is better for gaming?”). AI loves to synthesize comparisons, and it loves when it can pull from someone who’s already compared things. Content that explicitly compares options in a structured manner stands a higher chance of being selected. For instance, publishing a “X vs Y” comparison chart or a “Top 10 products in [category]” list with clear rankings/criteria can be a goldmine for answer engines. One GEO consultant observed that AI answers often reference “Top N” listicles and product comparison tables. If you don’t provide that content and your competitor does, guess who the AI is likely to cite when someone asks, “Which one should I buy?” So, anticipate the comparative questions and answer them. Give the AI an easy table or list to quote, and it just might.

Comparison tables are high-nutrition fodder for AI to understand a topic. (ChatGPT)

(The above strategies are speculative, but they’re essentially about making your content clear, comprehensive, and contextually relevant. Qualities that, notably, also improve human usability. You should ask: “How can we make it painless for an AI to extract the right answer from our page?” The answers align suspiciously well with how to make it painless for a human to extract info, which shouldn’t be surprising. An AI model that’s trained on human-curated content probably has similar taste to human users: it “likes” content that is straightforward and authoritative. That’s good news: what’s good for AIs may largely be what’s good for human readers.)

Optimizing content for human readers and for AI to consider quoting is likely to have considerable similarities and be nested within each other. (ChatGPT)

If your audience is international, consider offering content in multiple languages. An English AI answer won’t cite your brilliant Spanish article that it hasn’t seen or understood. If you have the resources, a translated English version of your content might have a higher chance of being picked up in global answers. (This is akin to old-school SEO advice of having an English version for broader reach: now it might apply to AI visibility too.)

On the flip side, if you have top-notch content in a local language, realize that you might currently be competing in a less crowded field for that AI’s attention. For instance, fewer Thai-language websites might be optimized for AI, so a Thai generative search might lean heavily on whatever it can find. This could be an opportunity where early adoption of GEO tactics in non-English content could make you the go-to source that the AI grabs. Of course, the AI’s abilities in Thai might not be as good, so it may summarize less accurately or choose weird sources. As always, quality matters: well-structured content in any language should, in principle, be favored by AI if the AI is searching that language.

Some local AI models or engines (like Baidu’s Ernie in China) have their own ecosystems. Optimizing for those might involve different platforms (e.g., being active on the Chinese Q&A site Zhihu should help being featured in a Chinese AI model that cites Zhihu, akin to how Western ones cite Reddit). So “GEO” isn’t one-size-fits-all globally; it might branch into specializations for each language’s dominant AI systems.

Writing for humans and SEO versus writing for AI and GEO. (Napkin)

GEO Startups and Services

Disclaimer: I can’t vouch for any companies listed here. I haven’t tried them, but they illustrate GEO's growing importance and where to find help.

Tech gold rushes spawn toolmakers and consultants. In the 2000s, SEO agencies proliferated with promises of “Number 1 on Google guaranteed!” Now in 2025, Generative Engine Optimization (GEO) startups surge similarly, promising to crack AI algorithms and get brands mentioned by ChatGPT.

Profound pioneered this space, positioning itself as an AI visibility data powerhouse. CEO James Cadwallader calls the AI search shift a “Game of Thrones power shift” — a dramatic yet accurate description of information distribution changing hands. Profound raised $20 million to tackle what VCs called a “hair on fire problem” for urgently worried brands. Profound tracks brand mentions across AI answers, in a strongly analytics-based manner. They analyzed 30 million citations to identify patterns. Their dashboard might report: “Your competitor appeared in 15% of 'best CRM software' ChatGPT answers; you appeared in 0%.” They provide source analysis and recommendations following their playbook: Analyze, Create, Distribute, Measure, Iterate. One alleged success: a staffing company increased its AI presence from 0% to 11% within hours after publishing a targeted piece. I distrust such cherry-picked stories, but they demonstrate GEO's potential. Profound discovered “eatthis.com” disproportionately influenced fast-food AI answers, which is useful intelligence for a company in that space.

Bluefish AI raised $5 million, focusing on brand control and safety. They track AI performance, manage brand safety (ensuring AI doesn't misrepresent you), and facilitate AI engagement. If AI spreads misinformation about your company, Bluefish alerts you to correct the source. That’s a new PR frontier. CEO Alex Sherman calls AI search a marketing “wake-up call.” CMOs were caught unprepared when traffic shifted from Google to AI referrals. Bluefish noted Reddit ranks highly as a third-party source across customers, confirming community sites' influence on AI answers. They offer integrations to “inject” approved messaging — hopefully not creating pay-for-play AI advertorials.

Athena (AthenaHQ) graduated Y Combinator with $2.2 million funding. They brand themselves the “world's fastest generative optimization platform,” claiming 70+ customers and 10× AI traffic boosts. Athena ingests millions of AI responses, mapping them to 300,000+ citation sources. They analyze client content gaps: “Here’s how AI sees your niche; here’s what it’s missing from you.” Co-founder Alan Yao calls it “actionable intelligence that turns AI citations into a repeatable growth channel” — buzzworthy but clear. One customer noted, “Over 30% of inbound leads research with ChatGPT before contacting sales.” Companies must consider: What do prospects ask ChatGPT before visiting our site? How do we appear in those answers? Athena automates discovering these questions and necessary content adjustments

Others. Dozens more GEO players exist: Goodwriter/Goodrank, Semrush's AI monitoring, NoGood's AI Lab. Traditional SEO platforms add AI features too.

A gold rush indeed. Credible players combine data, strategy, and execution to answer: What AI questions relate to my business? Where does AI source information? How can I appear more frequently?

Measuring success remains difficult. AI results are probabilistic because identical prompts generate different answers. These companies might resemble early SEO consultants, claiming credit for improvements that would occur anyway or can’t be definitively attributed. Caveat emptor.

GEO consultants advertising for business may resort to scare tactics and present case studies that do not generalize. (ChatGPT)

However, given how new this is, even measuring the baseline is valuable. If nothing else, a dashboard that says “Your brand appeared in 5% of AI answers about Topic X this week” is a new KPI that CMOs should track. It’s the new share-of-voice metric. Those who jump on GEO early can learn and adapt, while those who ignore it might find themselves invisible in the chatbot era.

GEO Metrics

The shift toward AI-synthesized answers necessitates a change in how performance is measured. Traditional SEO metrics, which are heavily reliant on clicks and website traffic, are no longer sufficient to capture the full value of visibility in a “post-click” or “zero-click” AI environment.

The fundamental premise of GEO is that success can be achieved even if a user never clicks through to a brand’s website. An AI can cite a brand’s data, mention its product as a solution, or use its content to formulate an answer, delivering significant value in the form of brand awareness, authority building, and user influence without a single click being registered by your website analytics.

Click metrics are old school. AI citations are the new gold. (ChatGPT)

Relying solely on metrics like organic traffic, click-through rate (CTR), and keyword rankings will provide an incomplete and potentially misleading assessment of a brand’s performance in AI-driven channels. A strategy that successfully earns citations in AI Overviews might appear to be failing if judged only by a flat or declining organic traffic trend. New KPIs are required to measure this new form of visibility.

Return on investment (ROI) should also be rethought. The value of GEO is often indirect, shifting the measurement framework from direct ROI to attributed ROI. A user might see a brand cited by an AI, not click the provided link, but then perform a branded search for that company later that day. Or, they might simply develop a more positive perception of the brand’s authority, which influences a purchasing decision weeks later.

Moving from an Internet where “Click Is King” to one where “Mentions Are Money” (ChatGPT).

This user journey breaks traditional last-click attribution models. The solution is to adopt more sophisticated measurement that connects investment in GEO with lagging, brand-level indicators. Tracking changes in branded search volume, direct website traffic, and even sales cycle velocity can help attribute value back to the initial, non-clickable AI mention. Data suggests that visitors who do arrive via AI citations are often highly qualified, converting at rates 12–18% higher than traditional organic traffic, indicating the powerful pre-qualification that occurs within the AI interface.

Users referred by an AI recommendation (or even mention) have high conversion rates, showing the value of AI mentions. (ChatGPT)

We need a new dashboard of metrics for GEO, including the following (and likely more that are still to be discovered):

Citation Frequency: The primary metric for GEO. This tracks how often a brand, its content, or its data is cited as a source in AI-generated responses across platforms like ChatGPT, Perplexity, and AI Overviews.

Share of Voice (SOV) in AI: A measure of a brand’s visibility within AI answers for a given set of topics or queries, relative to its competitors.

Sentiment and Accuracy of Mentions: Qualitative analysis of how the brand is being portrayed in AI responses. Are the mentions positive? Is the information accurate?

Referral Traffic from AI: When AI platforms do provide clickable source links, tracking the volume and quality of this referral traffic is a direct measure of impact.

Branded Search Lift: Monitoring the volume of branded search queries over time to identify correlations with increased visibility in non-clickable AI answers.

We will all need to learn how to interpret an entirely new set of analytics data to track our GEO. (ChatGPT)

There may not be an easy way to compare old metrics from the search-and-human-readers world with the new AI metrics. (ChatGPT)

Will AI Optimization Ruin Answer Quality? (Lessons from SEO Spam)

It’s a tale as old as search engines: once people figure out how the ranking works, they game it, sometimes at the expense of quality. In web search, this led to SEO spam: link farms, keyword stuffing, low-value pages that exist solely to grab clicks. Google spent decades adjusting algorithms (Panda, Penguin, etc.) to penalize those tactics and reward genuinely useful content. Now, with AI-generated answers, do we face the same risk? Will Generative Engine Optimization degrade AI response quality? And are the AI companies aware of this danger?

The risk is real. We’re already seeing early glimpses. Unscrupulous actors can repost others’ content and, if the original is less accessible to the AI, the AI might “think” the duplicate is the source. This is the kind of thing SEO spammers did: scrape content to get ad traffic. Now the motivation could be scraping content to get AI citation traffic (or just bragging rights). AI companies need to close such loopholes fast, or we’ll get a race to the bottom with copycat content.

History is likely to repeat itself with GEO, with some companies chasing listings at the cost of quality. AI tools should not let themselves be taken by surprise but should learn from the sad tale of SEO gone rogue. (ChatGPT)

The major AI providers are certainly aware of these issues. They don’t want their answers to become nonsense or their credibility to tank. Google, for one, has explicitly stated that its Search Generative Experience is “rooted in our core search ranking and quality systems.” They still use things like Spam detection, PageRank, the Helpful Content system, and other ranking signals to decide which sources to trust in AI overviews. If a website was flagged as low-quality by normal Google Search, it’s unlikely to be prominently cited by Google AI. Google’s stance on AI-generated web content is also telling: they’ve updated policies to penalize unhelpful AI-written content. So if people auto-generate a bunch of fluff articles just to try to get cited, Google’s systems may demote those as spam, thus keeping them out of the AI answers as well. Essentially, Google is leveraging its years of search quality experience as a defense mechanism.

AI needs ruthless countermeasures against GEO spam. (ChatGPT)

OpenAI and others face a tougher challenge because ChatGPT wasn’t originally built with a notion of “reputable sources,” but just generates text from patterns. Now that ChatGPT can browse and cite, OpenAI might not have the full ranking stack that Google and Bing have. They will need to beef this up, perhaps by integrating more of Bing’s ranking or developing their own credibility scoring.

There’s also the concern of GEO itself creating low-quality content. If everyone starts publishing “AI optimized” pages with the sole aim of getting cited, we might see content that’s phrased in a stilted, generic way, just to appeal to the AI. For instance, a bunch of sites might create “Top 10 whatever” listicles not because users need them, but because AI answers love listicles. This could lead to a glut of redundant or shallow content, which the AI would then have to sort through. It’s the same pattern as thin affiliate websites that polluted Google.

A particularly insidious black hat tactic is to copy good content from other websites and publish it as if it were the hatter’s own. Search engines have not always been good at linking to the original source of an article, particularly if content is republished (with or without permission) on popular sites. Hopefully, AI can get smart enough to recognize, for example, the author’s voice and discern which of several copies is likely to be the original that should be credited as the source. (ChatGPT)

The big AI companies are motivated to prevent this because, unlike a search engine, which could still make ad money while you sift through junk results, an AI answer that’s bad directly erodes user trust in the product. If ChatGPT or Perplexity consistently provided incorrect or low-quality answers due to GEO-gamed content, users would quickly abandon them. Since these companies generate revenue directly from user subscription fees (and thankfully not from ads yet), losing users means losing money. So expect rapid countermeasures: AI models might learn to evaluate source quality beyond just relevance. They might cross-verify facts with multiple sources, thus nullifying single-page spam attempts.

Interestingly, the GEO startups themselves have a stake in maintaining quality. If their advice led clients to churn out spammy content that got slapped down by AI algorithms, their value proposition dies. So the smarter GEO folks emphasize quality content, genuine engagement in communities, and factual accuracy, basically, the equivalent of white-hat tactics in SEO. It’s in their interest that AI answers remain good and their clients become part of those good answers, not part of the problem.

AI engines must evolve defensive mechanisms to neutralize attack vectors. (ChatGPT)

The user experience must remain paramount for both AI services and GEO-chasing brands: The quality of answers is the product. If GEO tactics ever start to harm that UX, expect the AI makers to adjust algorithms ruthlessly – analogous to Google’s early 2010s crackdown on content farms that degraded search result usability. It’s a delicate balance: we want content creators to feel motivated to supply great content that AI can use (so that AI answers stay fresh and correct), but we don’t want them to manipulate the system with junk that the AI naively includes.

My advice: If you’re optimizing for AI, do it in a way that genuinely provides value. That usually aligns with being cited and being correct. If you try black-hat tricks (say, stuffing a ton of question keywords invisibly on your page or auto-generating 100 Q&A pairs of dubious quality), it might work for a brief moment, but you’ll likely get weeded out as the AI gets smarter. (Smarter AI every month is the one thing we can count on in this field.)

At the end of the day, an AI citation brings a user’s attention. When a user follows that citation (and yes, users do click them out of curiosity or doubt), they had better land on a page that satisfies. If not, the AI will eventually learn that your page wasn’t actually helpful. The future of GEO will reward those who earn citations by being the best answer, not just the most aggressively optimized one.

Future-Proofing Your Content Strategy

AI denial is the one strategy sure to fail. (ChatGPT)

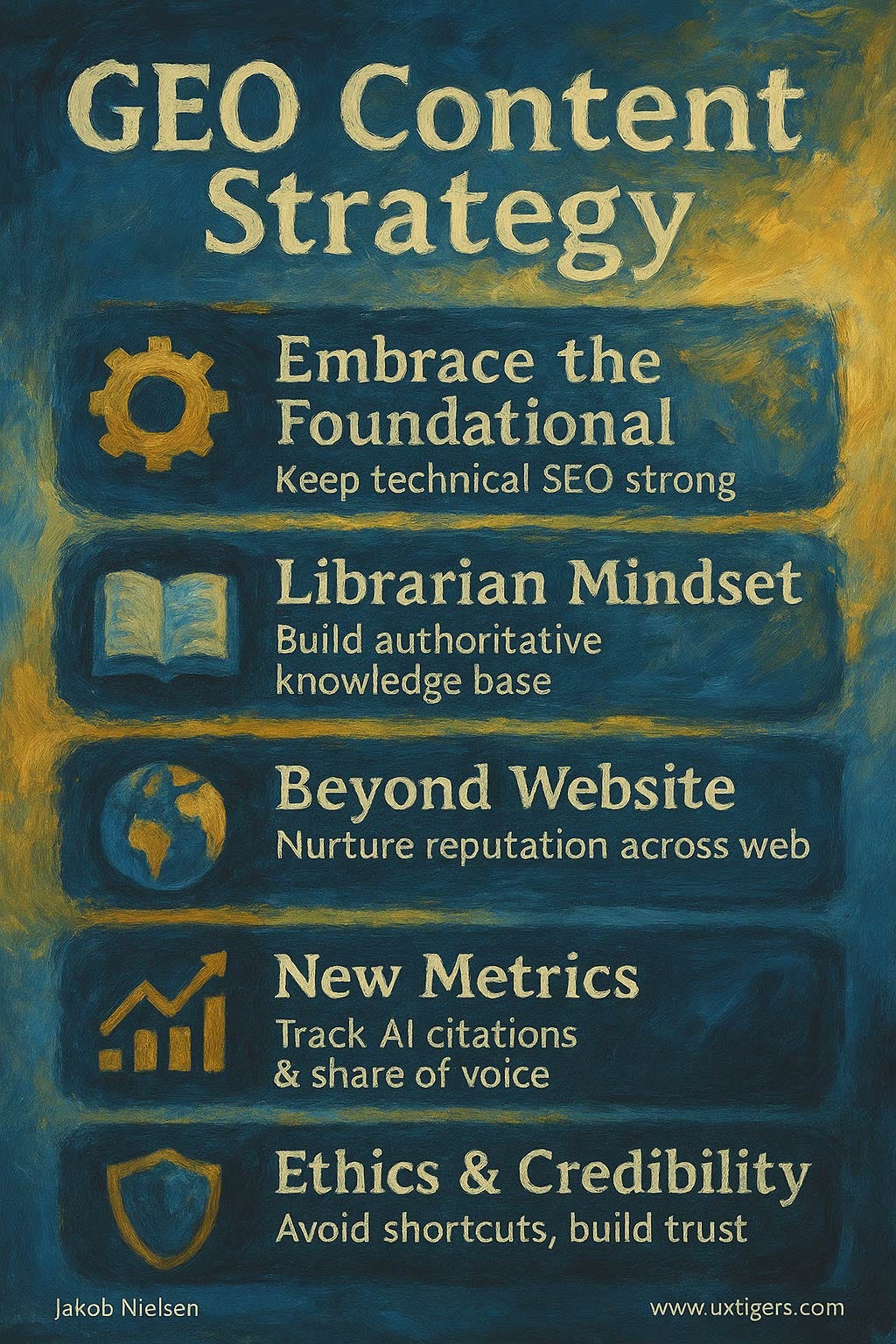

AI is here to stay. It will get smarter, and we don’t know how users will be using superintelligence in 2030. But the following core ideas can serve as a roadmap for future-proofing a brand’s digital strategy for AI and GEO:

Embrace the Foundational: Do not abandon traditional SEO. Instead, double down on technical excellence, high-quality content, and building authority. SEO is the price of entry for being considered by the generative engines that are built upon it.

Think Like a Librarian, Not Just a Publisher: The strategic goal is no longer simply to publish pages but to build a comprehensive, authoritative, and well-structured corporate knowledge base. Your objective is to become the most reliable and citable source in your niche, the one that AI models turn to for trustworthy information.

Invest Beyond the Website: A significant portion of the signals that generative AI uses to determine trustworthiness exists outside your owned properties. Allocate resources to actively manage your brand’s reputation and presence across the entire digital ecosystem, including review sites, forums, social media, and digital PR.

Invest beyond the website to build the foundation for strong AI representation. (ChatGPT)

Adapt Your Measurement Framework: Move beyond click-based metrics. Begin building the capabilities to track new KPIs like citation frequency and share of voice in AI. Develop attribution models that can connect investment in GEO with lagging but crucial brand-level indicators like branded search lift and customer conversion quality.

Prioritize Ethics and Credibility: In an era of AI synthesis and potential misinformation, credibility is a brand’s most valuable asset. Avoid shortcuts and “black hat” tactics. (AI will get smart enough to see through them.) Focus on building genuine, verifiable authority. In the new landscape, your reputation is not just a brand attribute; it is a direct and powerful ranking factor.

Explainer video about this article (YouTube, 3 minutes).

5 guidelines to adapt content strategy for Generative Engine Optimization (GEO). (ChatGPT)

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 42 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (28,699 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

This is hands down the best and most comprehensive material I’ve read on this topic to date. The breakdown of which LLMs prefer which sources is especially helpful as is the variety of tables which break down best practices and classifications.

Well done. This should be a textbook, but those kinds of things get outdated pretty fast in this genre. Still—this is a public service!

I love this article because it asks and answers so many questions fairly well, despite the frothy nature of the GEO scene. I especially like that you discus those who are addressing the hard problem of visibility into performance.

The following point got me thinking: it seems you suggest that content aggregators are doing something nefarious or dishonest:

“… the AI might “think” the duplicate is the source. This is the kind of thing SEO spammers did: scrape content to get ad traffic. Now the motivation could be scraping content to get AI citation traffic (or just bragging rights). AI companies need to close such loopholes fast…”

Does it really matter to the user who the primary source is, as long as they get what they needed?

Aggregating a lot of information is also a benign good. Think analysis, reviews, and explanations that are a far cry above stuffing links into a page to get clicks. Maybe it’s not a loophole, but a shortcut.