Multi-Craft Specialized Content in the AI Era

Summary: AI won’t replace human creativity, it will amplify it. Forget mass-market entertainment; the true revolution is in hyper-targeted content experiences that seamlessly weave together text, music, images, and film. Authorship will be unleashed by eliminating the need to master individual media crafts.

More media forms are being conquered by generative AI every month. It started with simple prose text. Then came poetry, such as the version of my 10 usability heuristics as Haikus. We had primitive graphics in early 2023 and good graphics starting with Midjourney version 6 in December 2023. Music and songs followed from Suno. (Listen to my usability song and heuristics song on Instagram, made with Suno. You may need to click the unmute button.)

Now that character persistence and text-in-images are improving, we can stitch images together from Midjourney or DallE to create comic strips. See the example later in this article.

Finally, the announcement of OpenAI’s Sora on February 15, 2024, opens up the entire world of filmed media, from movies to animation, to be generated by AI.

The golden age of Hollywood will repeat, but this time the stars will be artificial, meaning that they can be prettier than any human and never flub their lines. The entire film crew will be AI, from writer to director to camera staff. (Midjourney)

To appreciate the pace of change, compare the stampede of woolly mammoth generated by Sora (released February 16, 2024) and the walking tiger I made (released January 1, 2024):

Wooly Mammoth made with Sora (YouTube)

Walking Tiger made with Leonardo (Instagram)

The tiger was impressive for the state of AI-generated video when I made it two months ago, and it’s still impressive that I could make an animation with one-click prompting: I had already made a still image of a tiger in Leonardo, and simply clicked a button to animate it. Leonardo figured out that a decent animation for a tiger would be to make the animal walk down the jungle path. Still, compared with OpenAI’s woolly mammoth, my tiger is primitive and not far removed from the simple image-panning animations that dominated AI a few months ago. (There are also unwanted artifacts in the animal’s paws as it walks.)

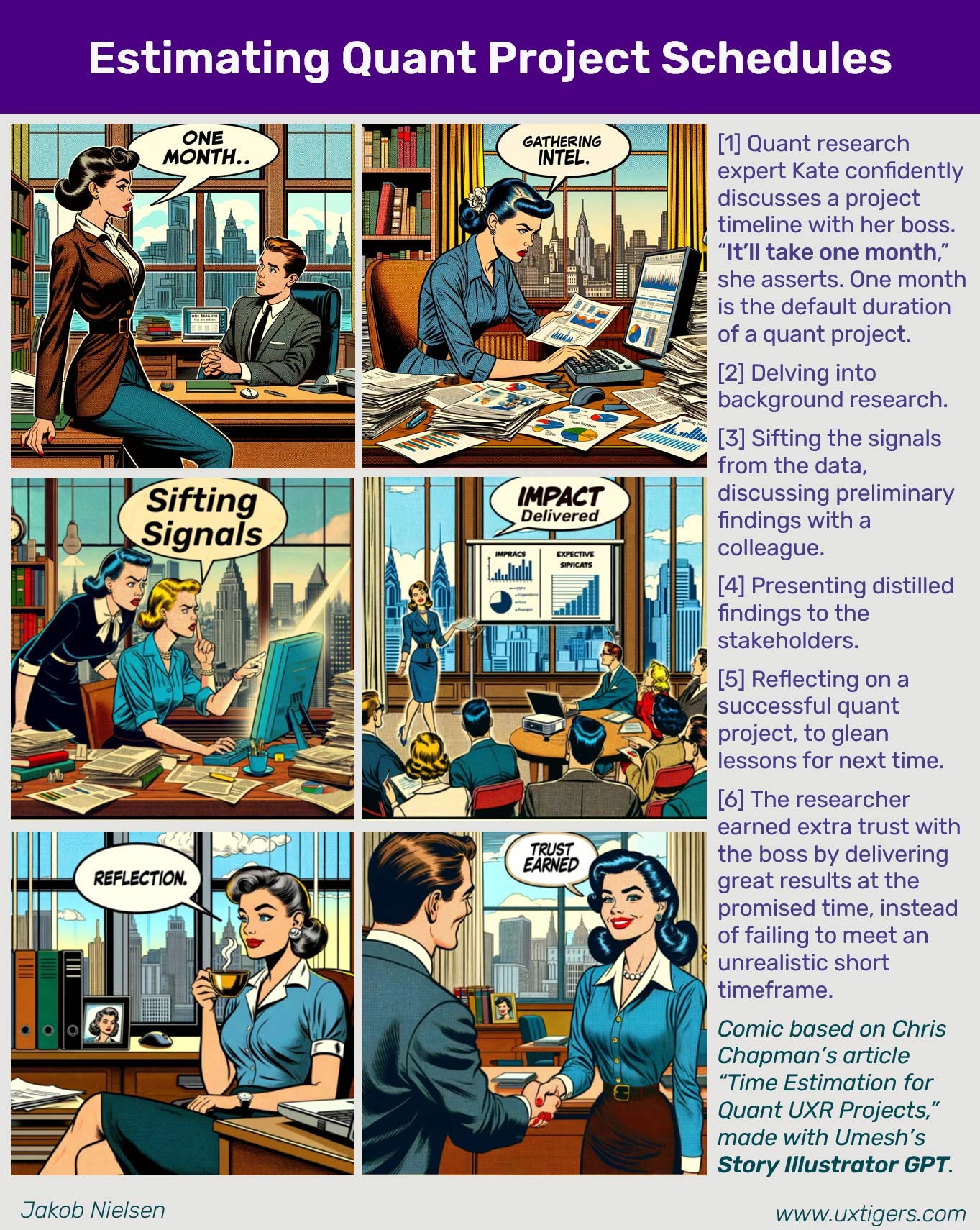

Example: Comic About Project Schedules for Quantitative User Research

Can there be a more UX-nerdy topic than project time estimates for quantitative user research? I count at least 3 levels of nerdiness: it’s quant, we’re talking project planning, and it’s a matter of time estimates. Any normal person would stop reading at any of these qualifiers, and even UX freaks would usually only make it through two of them.

Can we make this topic more approachable? Yes, with an old-school comic strip in the style of Silver Age comic books. So that’s what I did:

I made this comic strip using Umesh’s Story Illustrator GPT, setting the style to “1950s comics.”

AI Content Quality: From Bad to Good to Great

If you listened to my songs made with AI, you probably concluded that they’re a far step from The Beatles. Similarly, while the characters in my comic strip are nicely drawn, the character persistence is not complete, and we’re missing the panel where our hero collects her actual data. (The AI plotline is heavy on prep, analysis, and the aftermath. Possibly because these steps lend themselves to more drama than having to watch 40 users navigate websites.)

For sure, social media is full of people deploring the low content quality currently produced by AI. When I created 6 alternate illustrations for an article and asked for reader feedback, several respondents declared them all to be bad.

However, AI progress is fast and seems to be highly dependent on compute availability. New AI-optimized chips are being developed, and more fabs are being built. The current shortage of training and inference compute will gradually improve over the next year or two, after which we’ll see stunning improvements in content quality.

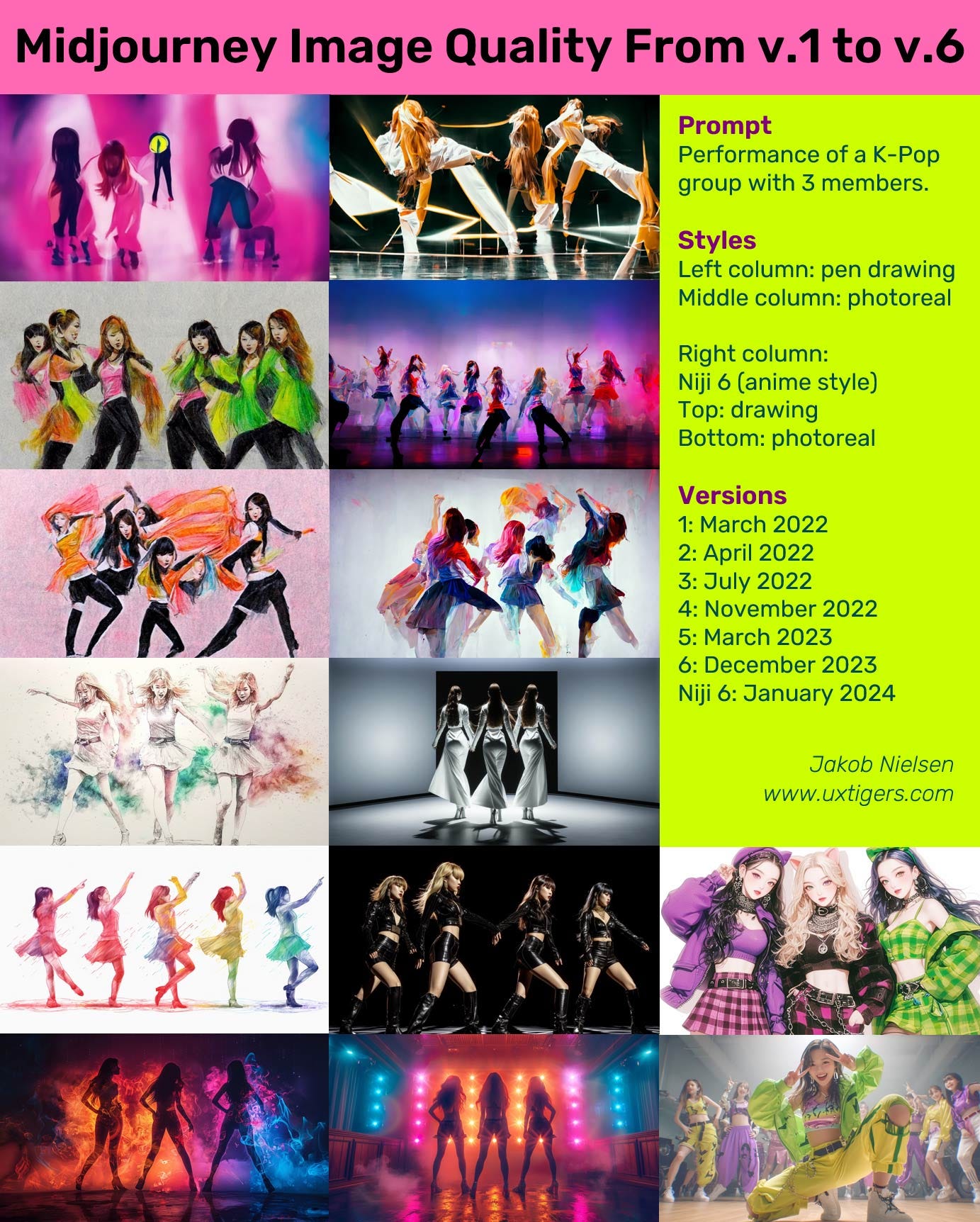

For an example of how far we’ve already come in just two years of image-generation, see this progression of images made with the same prompt in Midjourney from March 2022 to January 2024:

Midjourney has improved its image quality and prompt adherence dramatically in only two years. Versions 1-3 were practically useless. Version 4 (which launched November 2022) and 5 (March 2023) were decent, and version 6 (December 2023) now creates stunning images, as shown in this simple comparison of the same prompt used with the different versions. The early versions couldn’t even count to 3, and version 6 still makes many mistakes, so I expect better from version 7. [Feel free to copy or reuse this infographic, provided you give this URL as the source.] Click this image to see a larger version.

Niche Authoring Will Rule

The basic craft of producing any media form will soon enough be done better by AI than by humans. Right now, AI work has flaws that become apparent upon close inspection. This is already better than last year when AI work was blatantly bad.

We won’t have a fully AI-generated Hollywood movie or BBC show for maybe 10 more years. But a Netflix level of quality might be achievable within 5 years.

AI-generated movie stars and camera crew ensure basic craftmanship: the images will always be in focus and look good. But what will our artificial actress be saying? (Midjourney)

The basic craft will be in the can, whatever your preferred media form. But this begs the question of what all this content will communicate. Again, social media overflows with doomers. It’ll be nothing but C movies. AI can’t even make B movies, they say.

Wait 10 years, I say.

However, asking for B movies, or even A movie Hollywood blockbusters is to mistake the AI revolution in content creation.

As I have already shown you with examples of my own creations in media forms, from poetry over songwriting to comic strips, the value comes from highly specialized, targeted content. The long tail, in other words. The cumulative revenue from niche products will surpass the sales generated by traditional mass-market blockbusters, even if each of these few bestsellers generates high profits.

The long tail is the principle that only a few blockbuster productions will have huge audiences, whereas there’s much more niche content that satisfies the needs of smaller, specialized audiences. (Midjourney)

For the next 10 years, AI content won’t have the ultimate polish achievable by having hundreds of specialized human experts collaborate on crafting one piece of media. However, this old-school production method was limited to mass-market creations. Barbie movie, yes. Celebrating the 10 usability heuristics, no. Teaching the 10 heuristics, even less.

Niche content will rule. And the niche within the niche will be even better. Don’t think UX content in general. Think Jakob Nielsen’s take on UX. Think Jakob Nielsen’s explanation of his heuristic number 7.

We will experience a flood of individual creators making highly niche content, in video and other media. (Dall-E)

In 2021, the Wall Street Journal ran a series of exposés about the Columbia University Film School, revealing that master’s degrees costing about $300,000 often landed graduates with jobs as dog walkers or basically anything else than a solid Hollywood job. Film school graduates were deemed ‘Financially Hobbled for Life.’

Maybe the fortunes have finally changed for these hapless Film School graduates. No, they still will never work in Hollywood, which tends to prefer staff that combines storytelling talent with hands-on experience cranking out commercial movies rather than art projects. But they might well find jobs to oversee AI video production for mid-range projects that aim at higher production values than video clips made by amateurs like me.

Automated UI Design

User interfaces are one of the most complex media forms because they need to present an interactive environment where users can manipulate their own data in unexpected ways. This does not mean that AI can’t help with UI design. It simply means that we’re unlikely to get high usability or polished designs from short prompts.

It is quite likely that it will soon be possible to use AI editors to create user interfaces, with a lot of the legwork being automated. Standard design patterns will be inserted where appropriate and can then be modified if needed by a human designer.

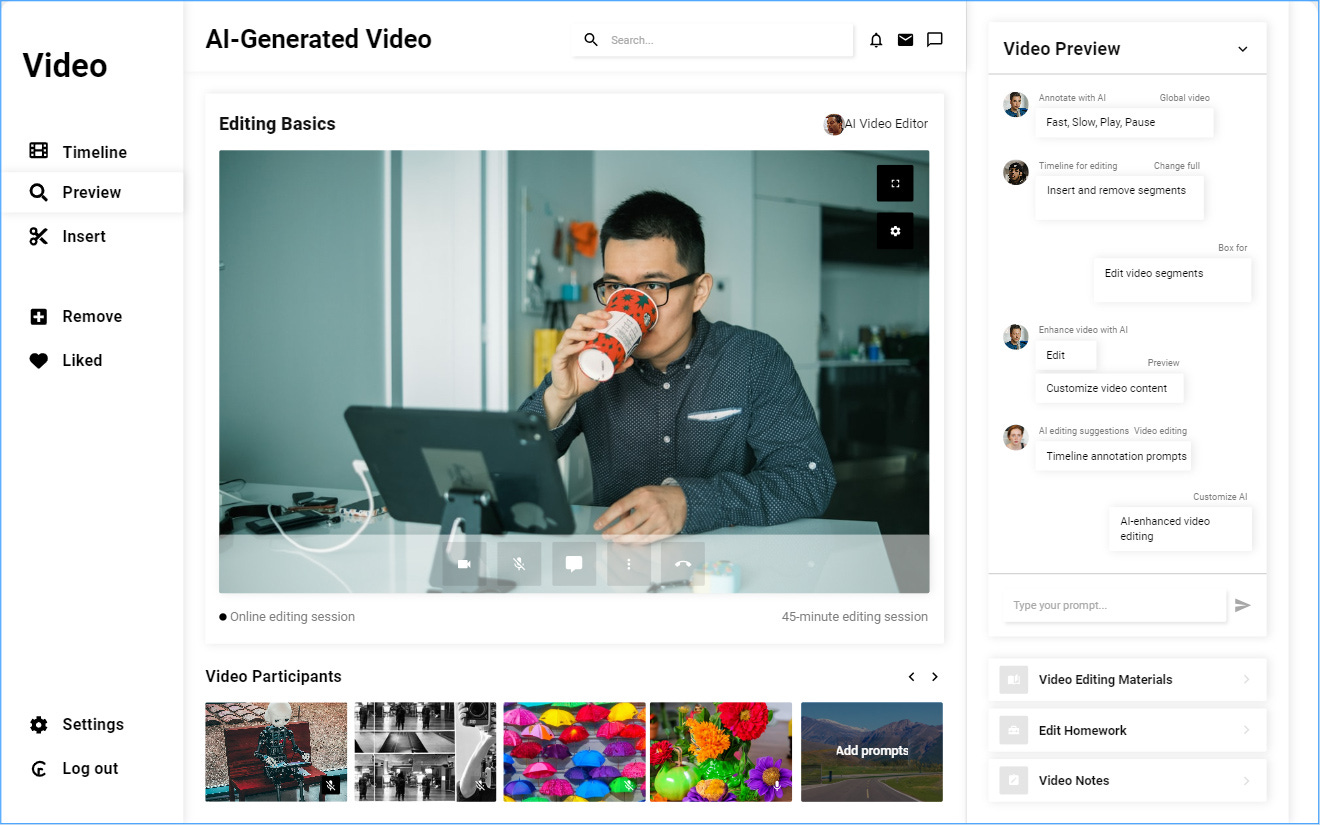

AI-generated first draft of a mockup of a user interface for editing AI-generated video clips. I doubt that anybody would ship this as a final video editor, but it forms a starting point for a human designer. It’s always easier to modify a first draft than to create from a blank page. (Made with UIzard.)

Delta Prompting Needed

The naysayers have one point: it is harder to modify AI-generated video than AI-generated text or images, which can be edited in a regular word processor or image software.

We need ways for authors to change an AI-generated object by specifying what they want to be changed. It’s unacceptable to have to start over from scratch with a new prompt.

We need to be able to apply new prompts that describe changes we want to be made to an existing object. This could be global changes to the mood of a scene in a video, or it could be a more locally-defined change to a shorter segment. For example, zoom in on the lead character’s face during these 5 seconds.

The better image-generation tools, such as Midjourney, currently offer a limited form of delta promoting in the form of inpainting. (Called “vary region” in Midjourney.) The user can select an area within the image and ask the AI to regenerate those pixels while leaving the rest of the image unchanged. The following before-after pair of images show an example of how this feature can be useful when we have a picture where we’re satisfied with the overall composition but need an element to be changed.

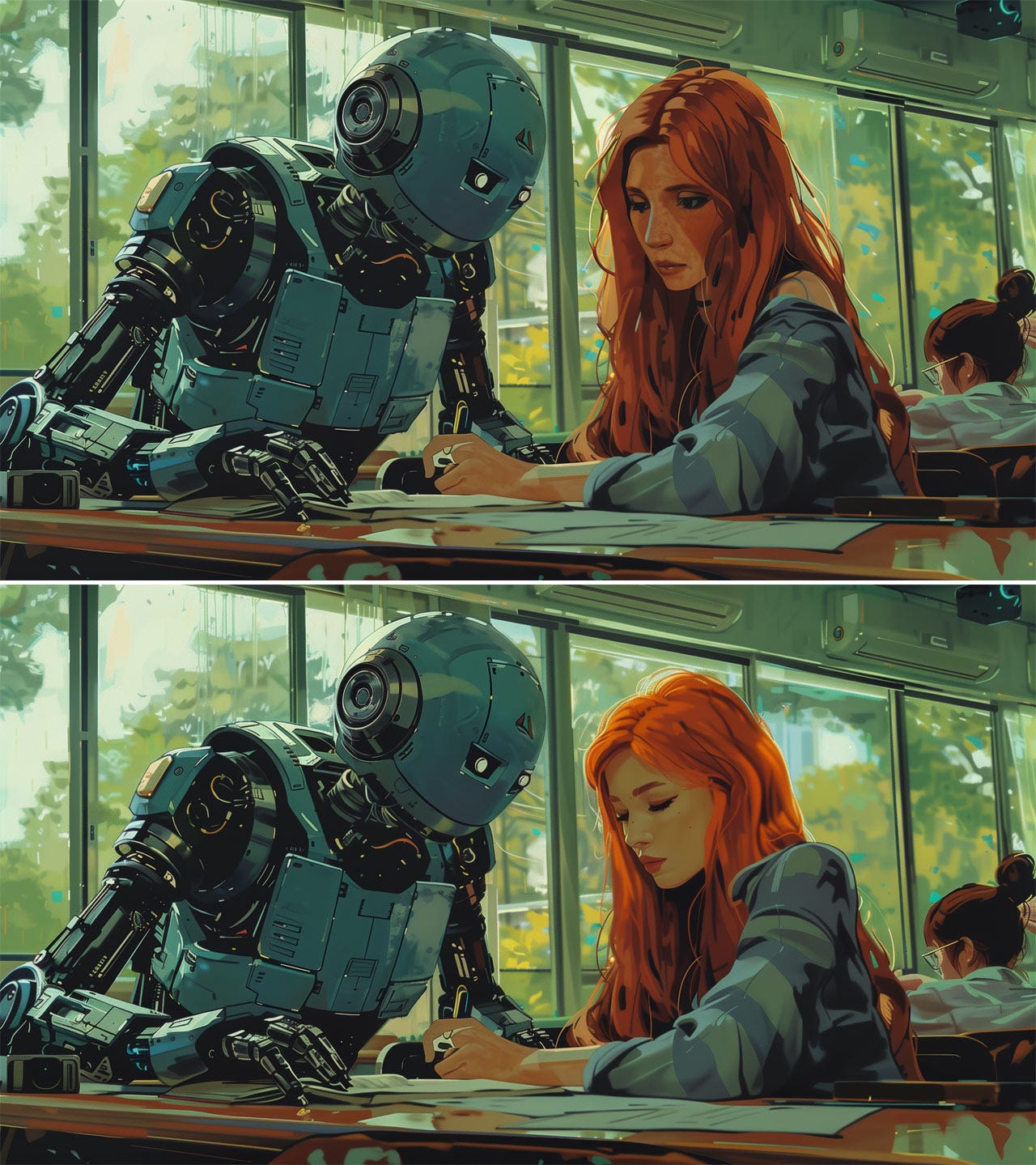

An image I made with Midjourney for a forthcoming piece about having AI inspect your work. In the original image (top), the human designer looks too glum to accept even constructive feedback from the AI. I defined an inpainting area around her face and asked Midjourney to recreate this area with a more accepting facial expression (bottom).

Liberating Creators from the Limits of Craft

In the past, authorship was limited by the requirements of mastering the craft of creating in the author’s chosen medium. Want to write a poem? First, master the craft of rhyming and meters. Want to write song lyrics? Ditto, but with stricter requirements for the ability to match words with music. And composing the music itself? Wow, an even harder craft.

Listing the craft requirements to create illustrations, comic strips, or films would run even longer. Basically, no individual can make movies. The auteur theory of the Director popularized by Alfred Hitchcock requires a supporting staff of hundreds of specialists in individual crafts. This meant that only authoring visions that could generate millions of dollars would be realized.

The notion of authorship is changing in the AI world. We’re moving from auteurs (possibly supported by hundreds of non-authoring individual workers) to co-creation between a human and AI.

Even more exciting, authorship now can inherently span as many media forms as you care to creatively combine. Even the most skilled triple-threat craftsperson in the past could only master a few crafts, and so could only combine a few forms of expression. Now, every individual can have it all.

Of course, we still come back to having something to say. There’s a bell curve of talent, as there has always been. Want to get a billion viewers? You must be in the top 0.001% of the talent pool for storytelling. But with 8 billion people in the world, that’s still 80,000 individuals. Many more than would ever get their proposal greenlighted for a Hollywood or Bollywood movie.

I would be happy with an audience of one million UX professionals. Currently, my most popular postings rarely reach much above half a million, and we all know that most of those impressions don’t represent a true audience.

My aspirations don’t matter to the world. But there are millions of people with some degree of talent in some specialized field. Liberating them from the limits of craft and the associated budgetary demands will unleash a wave of creativity and specialized content such as the world has never seen.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today. Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (26,737 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched). Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.