Generating Persistent Characters: Still Too Difficult

Summary: Generative AI for images does not draw the same characters with the same consistent appearance across different illustrations, as would be needed for UX persona illustrations and for illustrating comic strips and children’s books. The illustrations might be acceptable for storyboards in UX design and movie planning.

Many use cases for AI-driven image generation require showing the same characters in multiple images. This is true for UX methods like storyboards and when we need several illustrations for the same persona. Persistent characters are even more necessary in many entertainment applications, such as comic strips. We can’t have Flash Gordon sport widely different looks in each comic book panel. A character like Charlie Brown from The Peanuts wore the same T-shirt every single day from October 2, 1950, to February 12, 2000.

For 50 years, the character Charlie Brown wore a T-shirt like this in every single daily strip of The Peanuts. This persistent appearance made the character instantly recognizable. (For copyright reasons, I’m not showing you an image of Charlie Brown, just a shirt similar to his, generated by Microsoft Designer.)

Unfortunately, it isn’t easy to have AI-driven image generation produce persistent characters due to the probabilistic nature of the tools. This is great for creativity since we get different images every time, sometimes leading to unexpected results that are better than we envisioned.

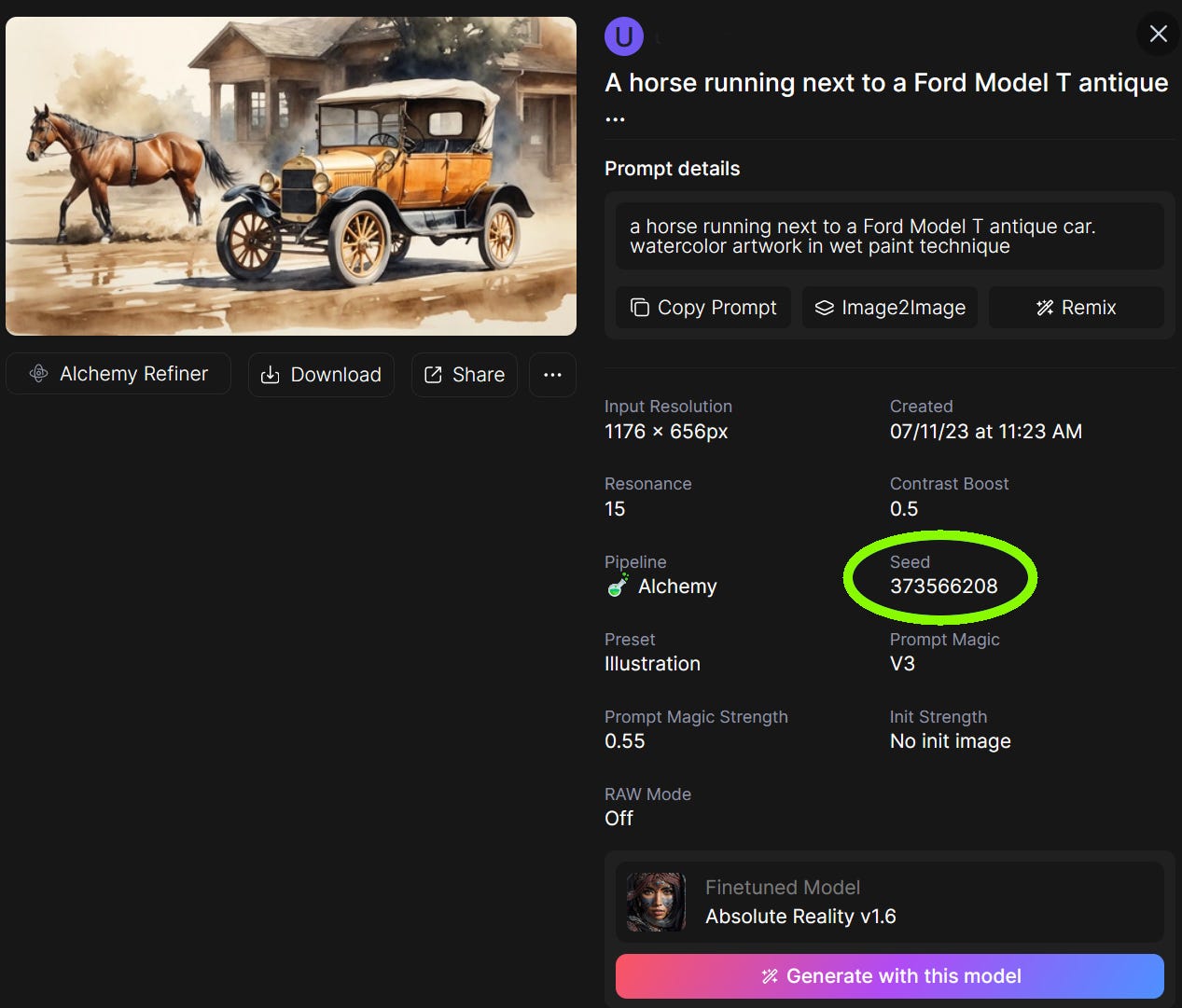

Until recently, the primary way to approach persistent characters in image generation was by controlling the seed, a random number employed by most tools to ensure image variability. The tools will generate similar results across prompts when the seed is held constant instead of random. However, manipulating seeds is a nerdy process. Some tools (like Leonardo) make the seed visible, whereas others (like Midjourney and Dall-E) require hidden steps. In either case, seeds have low usability.

The seed is shown in this inspector window for an image generated by Leonardo. The user can copy this number and paste it into a field hidden under “advanced settings” in the image generation window.

A Fairy Tale Experiment in Musavir

Musavir.ai is a fairly new image-generation tool that advertises persistent characters as one of its key features. (They call these characters “avatars.”) As an experiment, I tried to generate two different sets of illustrations for Hans Christan Andersen’s classic fairy tale, The Princess and the Pea. I wanted illustrations with a Danish princess (since Hans Christian Andersen is Danish and I am Danish) and a Korean princess (to experiment with a different look). Here are the two characters I generated in Midjourney:

Two characters generated by Midjourney for my experiments in illustrating a classic fairy tale: a Danish princess (left) and a Korean princess (right).

When using Musavir, you upload a portrait of your character and tell the tool to use it as the basis for a consistent avatar. So far, so easy.

Here are a few illustrations for the fairy tale generated in this manner. For each prompt, the Danish princess is on top, and the Korean princess is on the bottom. [Explanations in square brackets were not included in the prompt.]

(In case you have never read The Princess and the Pea, here’s a plot summary: A prince seeks a true princess to marry but finds the task challenging due to the difficulty in discerning genuine royalty. One stormy night, a young woman seeks shelter at the prince’s castle, claiming to be a princess. To test her claim, the queen places a pea in the bed where the girl is to sleep. The next morning, the girl mentions she had a sleepless night due to discomfort, proving her sensitivity and, by the story’s logic, her royal status. The prince, convinced of her authenticity, marries her.)

Prompt: A weary Danish/Korean princess standing at the gate of a Danish/Korean castle on a stormy night, drenched in rain, with a castle in the background. The scene is dramatic, with lightning in the sky and the princess looking exhausted yet noble.

Prompt: A restless Danish/Korean princess in a grand bed, unable to sleep [because of the pea], surrounded by luxurious bedding. The room is dimly lit, creating a mood of discomfort and unease, with the princess looking troubled and tired.

Prompt: The Danish/Korean princess at the royal breakfast table explaining her sleepless night, with a focus on her expressive storytelling. [She’s relating how the bed was so uncomfortable that she couldn’t sleep, which makes the Queen realize that she’s a real princess and thus approve of her marrying the Prince.]

Prompt: The Danish/Korean princess in her wedding gown.

Analysis: Inconsistent Characters

The characters were supposed to be persistent across prompts, but clearly, they are not. The Danish princess even has varying hair colors, though she does stay blonde.

Each image has some internal consistency, respecting the country specified in the prompt: the Danish castle looks Danish, and the Korean castle looks Korean, even though the prompt text was the same except for the one word specifying the country. Similarly, the Danish Queen looks Danish, and the Korean Prince looks Korean, even though these two characters were not specified in the prompts.

Given that Hans Christian Andersen wrote The Princess and the Pea in 1835, I had envisioned a Joseon dynasty setting for my Korean court. Instead, I got a Prince wearing an operetta uniform straight out of Franz Lehár and a wedding gown more reminiscent of Kate Middleton than a Joseon-era Jeogui. But that’s my fault for not specifying a period setting in the prompts.

Closer to what I expected for the Korean princess’s wedding robe. (Leonardo)

Ultimately, Musavir doesn’t deliver sufficiently consistent character looks across prompts. I didn’t even attempt illustrations with multiple persistent characters (currently not supported) or where a character is expected to wear an identical outfit in different situations. (As is the case in most comic strips.) In these fairly-tale illustrations, it’s reasonable for the princess to dress differently when traveling, in bed, at the breakfast table, and for her wedding.

The current level of character persistence is acceptable for internal applications like UX storyboarding and movie-planning storyboards. These characters are questionable for UX personas because you want all “Mary” illustrations to look the same. And AI character persistence is definitely insufficient for illustrating children’s stories — just imagine a 4-year-old asking why the protagonist looks different on every page.

Character persistency in generative AI is better now than 8 months ago when I started using these tools. It may be unreasonable of me to expect more rapid progress than we are currently getting, and maybe another 8 months will suffice to solve the problem. For now, it’s still too challenging to generate images that feature the same character.