UX Roundup: 1-Year Anniversary | Learn AI in 18 Minutes | Generative UI Prototyping | Uizard v.2

Summary: 1-year anniversary of Jakob Nielsen’s email newsletter | Engaging short video about generative AI basics | AI generates UI prototypes in 48 seconds | Uizard releases Autodesigner 2.0

UX Roundup for June 24, 2024. Celebrating the one-year birthday of my newsletter. (Ideogram)

Newsletter 1-Year Anniversary

After I stopped being a UX consultant in April 2023, I was free to write what I wanted without worrying about offending clients, big companies, or the many prudes that infect social media and like nothing better than inflicting their own morals on other people. After years in the salt mine of small-business profitability, I could think again.

I'm celebrating the one-year birthday of my newsletter. This image was made with Midjourney, which produces more interesting artwork than Ideogram, which I used for the hero image at the top of this newsletter. Ideogram takes the cake for typography.

Initially, I published my articles on LinkedIn, where I had a built-in audience of fans who, for unknown reasons, had followed me even though I had spent the prior 10 years without any creative output. However, I quickly discovered that LinkedIn has no interest in supporting creators. Articles were good, my follower count was high, but pageviews were low. LinkedIn does not promote original work or thought leadership. I can still see this today: when I publish a thoughtful piece it gets almost no impressions, whereas mundane work receives broad exposure.

After two months, I pivoted to an email newsletter hosted on Substack, with articles also being permanently hosted on www.uxtigers.com. My first email newsletter went out on June 26, 2023, to 108 pioneering subscribers: Using AI Makes Businesspeople Optimistic About AI. This is still a useful article to read if you want to encourage AI adaptation in your company. Note the sad image quality of my early Midjourney experiments used for the illustrations. (I had republished 5 earlier articles from my LinkedIn days during the previous 12 days to test out the platform, but since I hadn’t announced the newsletter, these proto-newsletters only went out to about 10 subscribers. I don’t know how those people found me.)

Here’s Leonardo’s birthday cake. Leonardo has many fun effects (which they call “elements”): here I used a combo of glowwave, glitch art, colorful scribbles, and sparklecore. But I completely abandoned any hope of getting Leonardo to spell. (This image was created with Leonardo’s traditional image models. See next image for the result of its newest model.)

Birthday cake made with Leonardo’s new ‘Phoenix’ model which has improved prompt adherence and typography capabilities. I requested an impressionist painting, and the background complies, but the cake itself is not very impressionist, much as it’s probably the most appetizing of the bunch.

During this one year of newsletter publishing, I grew the subscriber count from those 108 early fans to 15,059. Is this a lot or a little? For comparison, I wrote a previous newsletter from 1997 to 2012, and one year after launch, it only had 3,182 subscribers. It’s hard to compare the AI boom with the dot-com boom. There are many more people on the Internet now, but competition is also fiercer. I can’t even count the number of competing newsletters about UX or AI (the two main topics I cover). Many of them are extremely good. On balance, I am fairly pleased that my new newsletter does almost 5 times better than my old newsletter.

Here's the geographical distribution of my subscribers. I have subscribers in 140 countries. Clearly, UX fanatics live and work in every corner of the globe.

500 Instagram Images

A less important milestone is that I have posted 500 images to the UX Tigers Instagram channel, which shows that I generated more than 500 images for my articles this last year.

The main purpose of my Insta is to put all my images in one place, so that visually-oriented people can scan them. The channel is also a challenge: find a picture you like and try to predict why I made that image.

Screenshot from the UX Tigers Instagram channel showing that I don’t limit myself to a single visual style but pick what I think is nice for each story.

Intro to AI in 18 Minutes

As a subscriber to this newsletter, you may not need to learn the very basics of generative AI, but you probably know some holdouts who do. This recommendation is for them. Too many people either never used AI (thus relying on scaremongering naïve media reports) or only played for a few minutes with substandard ChatGPT 3.5 because it was free.

If I were to give only one piece of advice to people who still need to know about AI, it would be to try at least one small project with whatever is the true frontier AI model of the day. Currently, that’s ChatGPT 4o, but soon it’ll hopefully be GPT-5. Otherwise, Claude 4, Mistral XL, or whatever will take the crown. I don’t care which company is the current frontrunner but do use its model, not a weak old model, or you’ll get the wrong impression of AI’s capabilities.

I came across a really nice video by Henrik Kniberg. In it, he spends 18 minutes explaining Generative AI in a Nutshell. It’s an engaging presentation, which is surely needed to keep non-specialists glued to a video for 18 minutes.

And no, you won’t become an AI expert in 18 minutes. Nor can Henrik Kniberg tell you the best uses of AI in your job. Hands-on experimentation is needed for both of these goals.

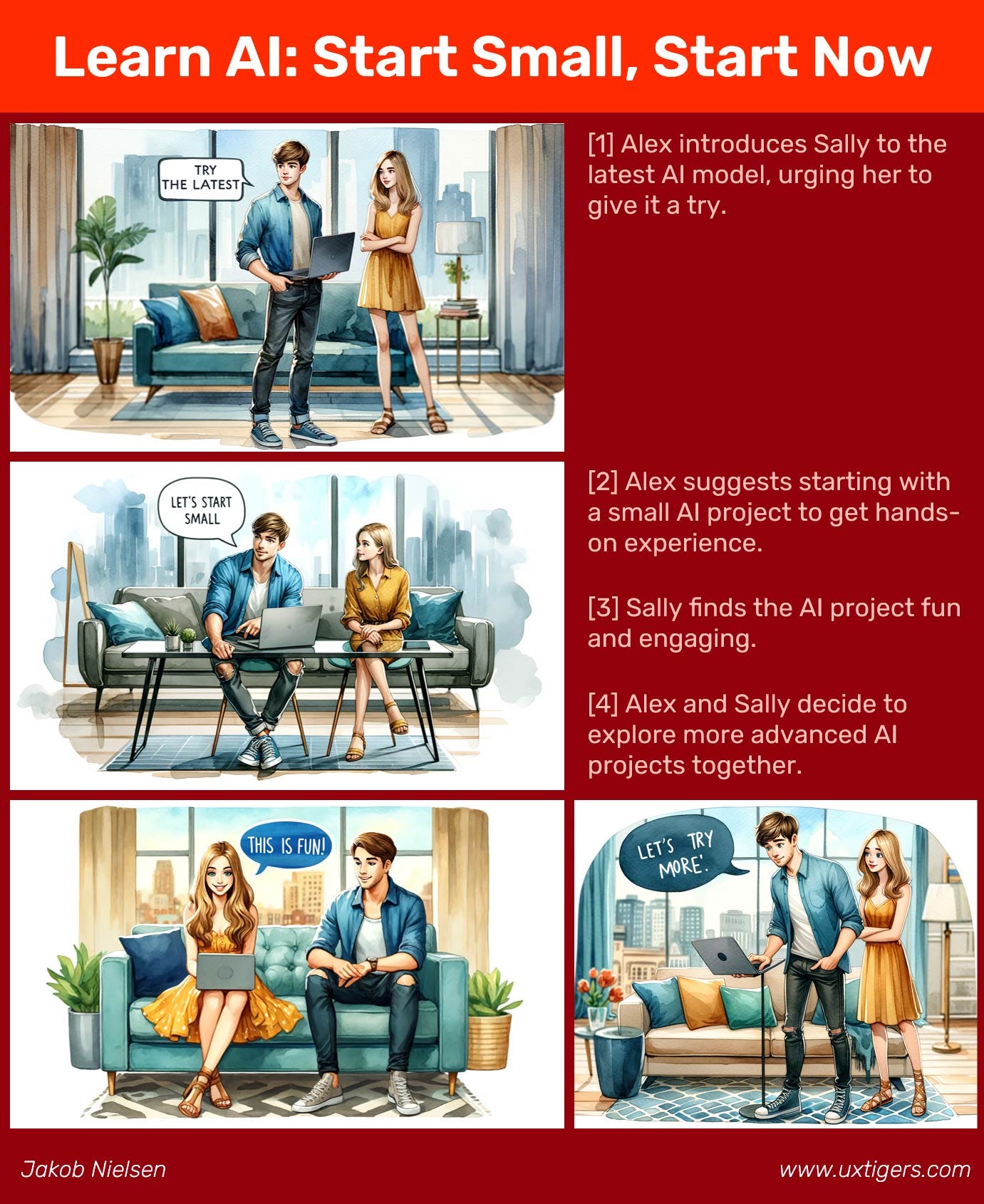

As an example of what you can do with current AI, I fed the above 4 paragraphs into Umesh’s Story Illustrator GPT and asked it to draw a small comic strip about learning AI. About one minute’s work gave me the following. (I spent more time on the layout in old-school graphic tools than on generating the story and images.) You can make a comic strip or a song in a few minutes about any topic you are discussing at work or school. (Currently, Udio and Suno seem to be the leaders in AI-generated songs.)

Sample comic strip about learning AI. Character consistency is less than ideal for Sally’s dress and the sofa (and it looks like the characters aged several years between panels 1 and 2), but I didn’t want to spend more time on this example of a very small AI project. (Story Illustrator GPT)

From Specs to Figma Prototype in 48 Seconds

Interesting video showing ChatGPT 4o generating prototype screens in Figma in 48 seconds, based on a software specification document.

Are these screens the final design that will ship to wide applause? No, they are clearly wireframes.

Do the screens have great usability? User testing will show! I can’t tell from the video since the screens seem to be in Chinese, and I don’t have access to those specs to see what the app is supposed to do.

The point is that we can generate a UI prototype in less than a minute. And if we can get one, we can get 10 different prototypes, due to the probabilistic nature of AI. This finally makes parallel design a feasible option for less-resourced product teams. Ideation is free with AI, as is early prototyping of the ideas.

Given the current state of Generative UX, we should not expect it to produce a final, high-usability design. We’ll have to iterate on the design based on findings from usability studies and the expertise of human UX specialists. In fact, I would probably expect a human designer to edit the AI-generated prototype before subjecting it to user testing. As I’ve said a million times, the best results come from human-AI symbiosis, not from either form of intelligence acting alone.

The beauty of this new approach to AI-originated UI prototyping is that it’s much faster for the human designer to edit the AI prototype to make it better than it would be for him or her to create an entire prototype from scratch in Figma (or any other tool).

UI prototypes galore can be produced in a minute by Generative UX. Parallel design can finally become part of the standard UX workflow. Edit, refine, and test for best results. (Ideogram)

Generative UX Advances: Uizard Autodesigner 2

More proof that Generative UX is getting more useful: Uizard Autodesigner 2 is now out. Uizard produced a one-minute intro video (with annoying background music) and an 18-minute overview video with more depth about the upgrade. (A smaller point about the latter video is that we get to hear the authorized pronunciation of “Uizard.” The CEO just introduces himself as “Tony” without mentioning his title, which seems too low-key Danish for my taste — and I’m Danish myself. But when speaking to the world, including Asia, tell us that you’re the company founder.)

Uizard Autodesigner 2. (Midjourney)

Here’s Leonado’s idea of a wall of UI prototypes at a design agency, made using the new Phoenix model. These look like too high-fidelity prototypes for my taste. In your first few rounds of user testing, best stay with rougher, low-fidelity prototypes and don’t waste time polishing a design that will change dramatically during the next several iterations.

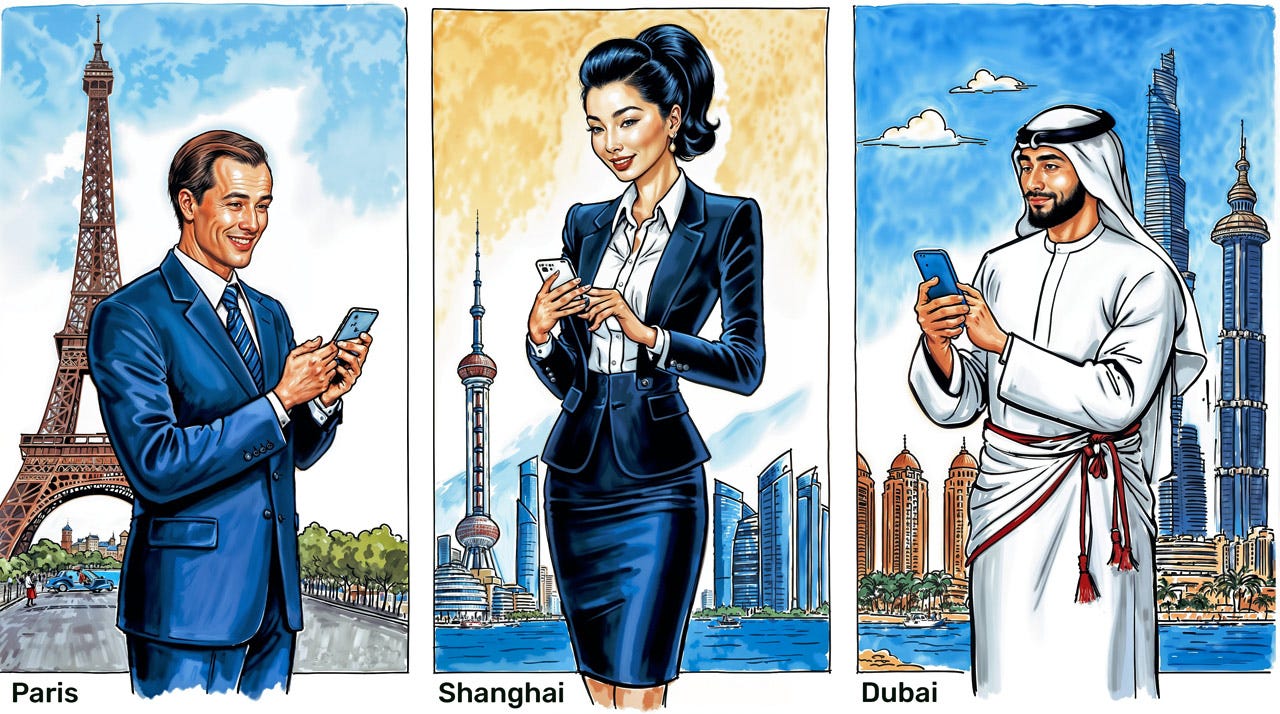

I haven’t had time to dig into the new release, but one feature I really like is the ability to translate the prototype into another language in one prompt. This will be a boon for international usability studies, which have been neglected in the past. Are these perfect localizations? No, but they should suffice to get early international user data about the big-picture UI, which is bound to change anyway before creating polished translations.

Natural language translation as a built-in feature for Generative UX will hopefully lead to substantial growth in early international user testing at the prototyping stage of the UX design process. (Leonardo)

In other developments, Uizard seems to have been acquired by Miro, which may or may not be good news. There are probably more resources but less independence. I tend to prefer having lots of different companies offering many different options.

Better AI Video

Literally (and I use the term “literal” literally), two hours after I sent out last week’s UX Roundup saying that my experiments with Luma Dream Machine had created a better music video than my attempt using Runway, Runway announced an upgraded “3rd generation” video model. However, Runway only announced the features in a posting, they didn’t make the improvements available to users. So they are clearly not ready for actual use yet.

It’s a bad habit for companies to announce “improved AI” that’s not shipping but only shown in demos. However, since Apple, Google, and OpenAI have all done this dirty deed, we can probably not blame Runway for wanting to redirect a bit of the AI-video limelight that had been completely focused on Luma after its launch.

(Luma also announced and shipped some minor upgrades. While the improvements are minor, it’s impressive to see any improvements barely a week after the release of the initial product. And bigger improvements are teased, so Luma joins the crowded field of showing us features that don’t ship.)

I’ll probably wait a few weeks or a month before I create my next music video, but when I do, I’ll look forward to exploring Runway’s new capabilities which will hopefully be live by then.

For now, let’s enjoy the fact that AI-generated video is evolving at a dizzying rate, to quote a line from my song about future-proofing education in the age of AI. When will AI video be good enough for real projects, as opposed to hobby projects like my UX songs? A year? Two years at the most, would be my guess. It’s nice to see this progress in AI video (and similar progress in AI image generation, as I discussed in last week’s newsletter) while we are kept waiting for too long to get GPT-5 level improvements in language models, which remain the most valuable use of AI.

Videos may be directed by humans and sometimes even star a human (though I expect the balance of on-screen talent to tip in favor of human-looking avatars instead of biologically-human actors), but most other parts of video production will be gradually taken over by AI. In the beginning, more so for domain-specific business videos or private home videos. The coming explosion of individual creators and the empowerment of tiny production companies will have a vastly bigger impact than the already-big effect of non-Hollywood videos currently published by the likes of YouTube and TikTok. (Ideogram)

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (27,141 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)

Congratulations on the 1-year anniversary of the newsletter, your content is very rich for me, I aim to see everything you leave as a link in the newsletters!

Sincerely,

Even more impressive than the AI tools building designs is TL;Draws "Make Real" (https://tldraw.substack.com/p/make-real-the-story-so-far). There are a couple of ≈2min videos demonstrating it. Not only can it produce the UI but it can create a fully work product from a sentence. The UI, graphics, code, and prototype are all provided to you in seconds.

This is so quick, UX researchers can iterate during a usability test. Pretty soon it is going to be very difficult to justify pre-concept research due to speed and cost reduction businesses will demand.

I'm afraid us UX Researchers have a very short shelf-life...