UX Roundup: AI Follow-Up Questions in Usability Studies | Top UX Evaluation Methods | AI Use in UX | Keep Users in the Flow | New Grok Image Model

Summary: AI can ask follow-up questions in usability sessions | The top 3 UX evaluation methods | AI use in UX | New hybrid UI for AI aims to keep users engaged in the flow | New model for AI-driven image generation in Grok

UX Roundup for December 11, 2024. (Midjourney).

AI Asking Follow-Up Questions in Usability Studies

New research finds that GPT-4-level AI can improve the findings from unmoderated usability sessions by asking follow-up questions. However, current AI is insufficiently insightful to generate deeper follow-up questions.

A recent paper in the International Journal of Human–Computer Interaction by Eduard Kurica, Peter Demcak, and Matus Krajcovic from the Slovak University of Technology analyzed sessions with 60 participants who tested a prototype e-commerce website. All sessions were run as unmoderated remote tests, half with and half without AI involvement.

It's great to see some research into using AI for UX work. Since I have made highly critical statements about the lack of good AI-UX research in academia, fairness dictates that I applaud these authors. (Hat tip to Lawton Pybus for alerting me to this study.)

“Unmoderated” testing means that each test participant works alone, performing test tasks based on written instructions. This contrasts with old-school “moderated” usability studies, where a human test facilitator leads the participant through the task and can interject prompts ranging from “What are you thinking now?” to customized follow-up questions. Moderated sessions are thus more flexible and can probe deeper, but they come at the cost of needing an experienced usability professional for the duration of all sessions.

AI can enhance unmoderated usability studies by asking follow-up questions of the test participants. (Midjourney)

This research project added to twist to the concept of “unmoderated” sessions, by having an AI moderator ask follow-up questions to half the users. The control group (30 users) only received static, pre-written questions, as is traditional for non-AI-enhanced remote usability sessions. In the AI condition (30 users), the users first performed the pre-defined tasks, after which GPT-4 would generate between one and three follow-up questions on the fly, depending on each user’s answers to the predefined tasks.

The AI condition didn’t completely emulate a standard moderated usability study, because the AI would not provide revised tasks or branch through a more elaborate test plan to decide on subsequent tasks based on the participant’s previous actions. All the tasks were predefined and provided in an unvarying sequence. The AI also didn’t interject thinking-aloud prompts or interact with the test participants in any other way than asking follow-up questions after each task.

Most important, the AI couldn’t see how the users interacted with the prototype. It was restricted to analyzing their verbal comments. As I’ve said before, watching what users do is more important than listening to what they say. At the time of the research project, AI models couldn’t watch users’ actions. However, newer AI models have added such capabilities, meaning we should get more capable AI study moderators in the future.

The main finding from the research is that there were no statistically significant differences between the two study conditions in terms of usability problems identified in the prototype, which is the primary goal of most usability testing. To me, this is because AI was limited to asking follow-up questions rather than facilitating the users’ task performance. More capable, next-generation AI study facilitators will have the potential to do better.

The AI-generated dynamic follow-up questions did succeed in eliciting additional user feedback, but it was rare for this additional information to reveal deeper insights. This demonstrates that the AI questions were not as penetrating as those that a skilled human study facilitator would have likely generated. (However, since these were unmoderated sessions, no such human was available, and it would have been expensive to have included a skilled human to facilitate 60 sessions.)

A good human usability facilitator can currently ask more profound questions of the study participants than an AI facilitator can. This deeper human understanding of events in the session produces more insightful user feedback in response. Next-generation AI will hopefully do better in 2025. (Leonardo)

What can we conclude from this research? Mostly, more research is needed using the next generation of AI usability-facilitation capabilities. GPT-4 level AI capabilities are insufficient to add enough value to unmoderated test sessions to be worth deploying.

How does this new research match my analysis of User Research with Humans vs. AI from April 2024? I gave a combination of a skull and a thumbs-up for the ability of an AI to facilitate user research with human users. I said that it’s currently not a good idea (confirmed by the new research), but that it has potential after further AI advances (which I continue to believe).

For good measure, let me repeat one of the other conclusions from this article: I gave an unmitigated skull (meaning, don’t do it) for the notion of replacing the human participant in a usability study with an AI.

(See also my article, What AI Can and Cannot Do for UX, for my analysis of additional issues beyond user testing.)

Finally, even though AI should currently not be relied on to facilitate user research sessions on its own, you can employ one of the 4 metaphors for working with AI to improve your own facilitation skills. Use AI as a coach, where it listens to your interaction with the user. After the session, ask AI to suggest ways you could have asked better questions. Many of its ideas may be inane, but some will help you improve.

AI can watch over your shoulder as you facilitate a user session. Use AI as a coach to suggest ways you could have done better. Some of its ideas will be bad, so don’t have it do the job on its own yet. (Midjourney)

Top 3 UX Evaluation Methods

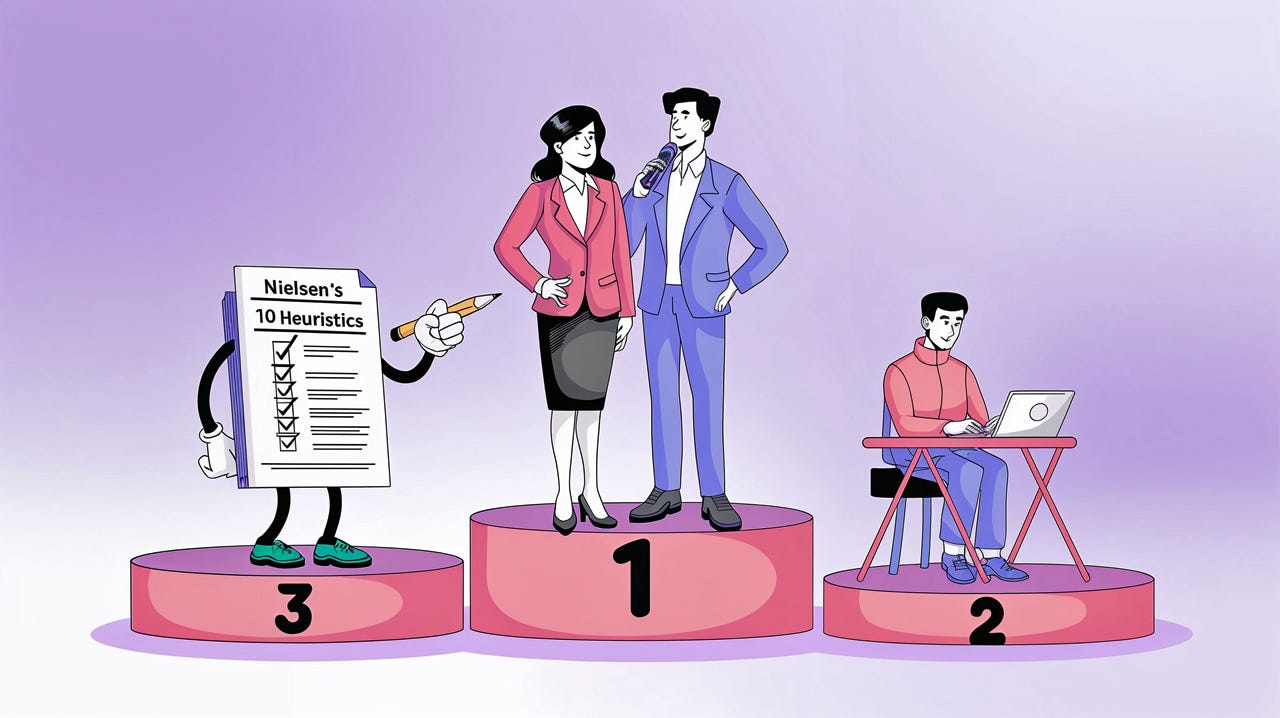

What design evaluation methods are used the most in industry? Koray Yitmen and Oğuzhan Erdinç from UXServices and Bahçeşehir University in Turkey surveyed 61 UX professionals to find out. Sorted by the number of professionals who use a method “frequently,” the top evaluation methods were:

User interviews: 74%

Remote user testing: 64%

Heuristic evaluation: 52%

Interestingly, this is pretty much the same medalists I found when I investigated the most popular usability methods in the 1990s, except that remote user testing is now at the top, whereas traditional lab-based user testing ranks very low, with only 18% using this method frequently. In-person testing used to be a very popular method, whereas remote research was rare because they were difficult to conduct before the emergence of current services like UserTesting.com, Optimal Workshop, and Userlytics.

The top UX evaluation methods in the most recent study remain the same as ever: (1) user interviews, (2) remote user testing, and (3) heuristic evaluation. (Ideogram)

Top Use of AI in UX

The Turkish study (see previous news item) also asked respondents about their use of AI in their design evaluation projects. Only 13% use AI “frequently”, whereas 41% use AI “occasionally.” These are disappointingly low percentages. (One would hope that many more UX professionals employ AI in other stages of the UX design lifecycle, such as ideation and prototyping, for which AI is ideal.)

To have any kind of UX career in a few years, you need extensive experience using AI in UX, or you will be brutally outcompeted by other professionals who 10x their productivity (when measured at a team level) with these tools. If you’re currently behind the program, read my article on the Top AI Tools Used by UX Professionals for ideas on AI use during various UX activities. Or see my report on the top 8 uses of AI in a Norwegian design team.

This recent research study only analyzed the use of AI in UX evaluation projects. The only use cases employed by more than 20% of respondents were:

Analyzing user test data: 30%

Developing use scenarios: 28%

Developing user test plans: 23%

Preparing reports: 21%

We have not yet derived a consensus on the AI uses everybody needs all the time, compared with more specialized uses for the occasional special case. This is a strong contrast to the top set of basic evaluation methods (see above), where the consensus is clear: use user testing, user interviews, and heuristic evaluation as your basic tools, with specialized methods to be taken out of the toolbox from time to time.

The AI toolbox for UX projects is not yet as settled as the toolbox of design evaluation methods. (Leonardo)

AI UI to Keep Users in the Flow

For about two years, the dominant way of interacting with AI tools has been linear: the user expresses his or her intent by typing a prompt, the tool responds with (hopefully) the desired outcome, and the dialog continues in a long scrolling list as the user refines that outcome, using the prompting diamond.

I have long said that usability would be enhanced by a hybrid UI that combined prompting with GUI controls and 2-dimensional use of the computer screen. (Voice dialogs are inherently linear — and worse, they are ephemeral, in that the words are gone as soon as they are uttered. That’s why I only believe in voice as a secondary interaction style, much as it has its place.)

Voice interfaces are ephemeral: it's gone as soon as a word is spoken. Voice is also inherently linear: we can’t scan it or easily return to a previous utterance. This limits the usability of voice UI for many practical applications, much as it’s probably a great UI for applications such as virtual girlfriends. (Leonardo)

Two recent examples show progress in AI UX beyond plain text prompts:

The “flow” feature in Leonardo (one of the leading image-generation products)

The timeline editing in Sora (OpenAI’s new video-generation product)

I used Leonardo’s flow feature to generate the above image of voice interfaces as ephemeral. Here’s a screenshot from the beginning of my process:

The “flow” feature in Leonardo.

You do have to start it out with a prompt, but then the new UI takes over. Leonardo generates an endless flow (thus the name) of thumbnail images that reflect various interpretations of the prompt. This happens very fast, thus overcoming the slow response times that plague the usability of many other image and video generation products.

As the user scrolls, endless additional images appear. Ideally, the new images would appear in less than 0.1 seconds, but anything less than a second is acceptable, given the relation between time and usability in user experience design. Response times less than 0.1 second would give the illusion that the infinitely many images had always been there and the user was just revealing them by scrolling. Subsecond response (but slower than 0.1 s) means that the user realizes that the AI is generating new images, but the delay isn’t annoying enough to impede exploration. Leonardo’s feature is aptly named because speed does keep users in their “flow” state of creation in Csikszentmihalyi’s sense of the word (a mental state of complete absorption and effortless concentration during creative activities).

(I don’t know how Leonardo can generate images much faster than anybody else. Possibly they only create the thumbnail versions as the user scrolls and defer generation of the full-sized images until the user clicks a thumbnail for closer inspection.)

As Stalin supposedly said, quantity has a quality of its own. Just seeing as many image variations as you care to scroll through provides additional creative inspiration. Leonardo further allows the user to steer the generation of additional images by clicking on thumbnails closest to his or her vision and asking for “more like this.”

OpenAI’s new Sora video product goes even further beyond prompting to offer new ways of timeline-based creation of videos. (Timelines are a standard UI element for video editing, also found in programs like CapCut and Adobe Premiere.) For example, the user can create cards with prompts that describe what should happen in the video at various points on the timeline. This is a superior UI than trying to put everything into a single text prompt (“First, make the tiger roar, and then after 5 seconds, he should walk off stage to the right”).

I was pleased to see the product designer for Sora (introduced as “Joey”) play a prominent part in the launch event where he was given significant time to explain the new UI features he had designed for the product. In fact, I’m pleased that they even have a dedicated professional designer on the project team — this is new in the AI world.

I think OpenAI may have recognized that the base level of AI is becoming a commodity, as anybody who can afford a few billion dollars on training compute can construct frontier-level AI capabilities. There’s certainly still product differentiation to be had by shipping faster which is why competing video products like Kling and Hailuo gained the advantage while OpenAI was dragging its feet.

But sustained product differentiation in AI will come from UX: make easier, integrated workflows based on a task analysis of user needs and design interactions that are simultaneously easy to use and powerful ways of achieving the user goals.

Sora’s new UI for AI-driven video generation looks like a big step up. That said, the launch event was a demo, and UI demos are often misleading in terms of usability, because the person driving the demo already knows exactly what to do at each step to make the product shine. We shall see how good the design actually is, once it’s more widely used for real projects.

Early AI user interfaces were strictly based on text prompts and offered a long scrolling list of past interactions. Newer AI UI, such as Leonardo’s Flow feature and OpenAI’s Sora video generation, attempt a more fluid interaction style that keeps users engaged and in the flow as they manipulate their creations. (Ideogram)

New Grok Image Model

Until recently, Grok used Flux as its image model. This week, they changed to using a new in-house image model code-named Aurora. While the new image model is good at certain photorealistic images, it’s lacking in other image styles and in prompt adherence.

I tried my usual test prompt, asking for a group of 3 K-Pop idols dancing on stage in a TV production, to be rendered in both a photorealistic style and as a color pencil drawing. Here are the results. Clearly, Aurora can’t count to 3.

Photorealistic (top) and color pencil drawing (bottom) of “3 K-Pop idols dancing on stage,” as drawn by Grok’s new image model. While all the characters do look like K-Pop idols, both images have more than 3 idols. And they look more like they’re posing than dancing. (Grok)

This new image model was supposedly built very fast by a tiny team. Maybe Black Forest Labs (the company behind Flux) had announced an unacceptable licensing fee increase. I hope/think xAI can do better in the next release.

For comparison, here’s Midjourney’s interpretation this week of the color-pencil version of my prompt. I dislike its strong preference for showing the dancers from the back. (See also my video reviewing Midjourney’s image quality from version 1 through 6 — YouTube, 4 min.)

Finally, here’s Ideogram’s version, to allow you to assess image generation as of December 2024. Ideogram continues to have the best understanding of the requested scene, even though it somewhat lacks image quality, especially in esoteric styles. Hands remains a weak point.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (27,821 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)