UX Roundup: AI Editing | Speaking This Week | Bad Innovation | Faster Midjourney | Feeling Less Rushed | Improving International Collaboration | OpenAI o1

Summary: From AI generation to AI editing | Jakob speaking at ADPList this week | Innovation can be harmful | Is Midjourney getting faster? | AI may make workers feel less time pressure | AI can help international collaboration | OpenAI o1

UX Roundup for September 16, 2024. (Midjourney)

From AI Generation to AI Editing

Generative AI is old hat: describe what you want, and AI makes corresponding text, images, or videos. Many observers claim this is the year of AI video and multi-modal AI.

However, we’re also seeing a trend to moving from having AI purely generate fresh content from scratch to using AI to edit content in a more deliberate way. These services use AI to recognize user-provided content and isolate relevant properties of that content so that they can be manipulated in an editor. This is far superior to traditional editing that takes place on the level of the manifestation of those properties.

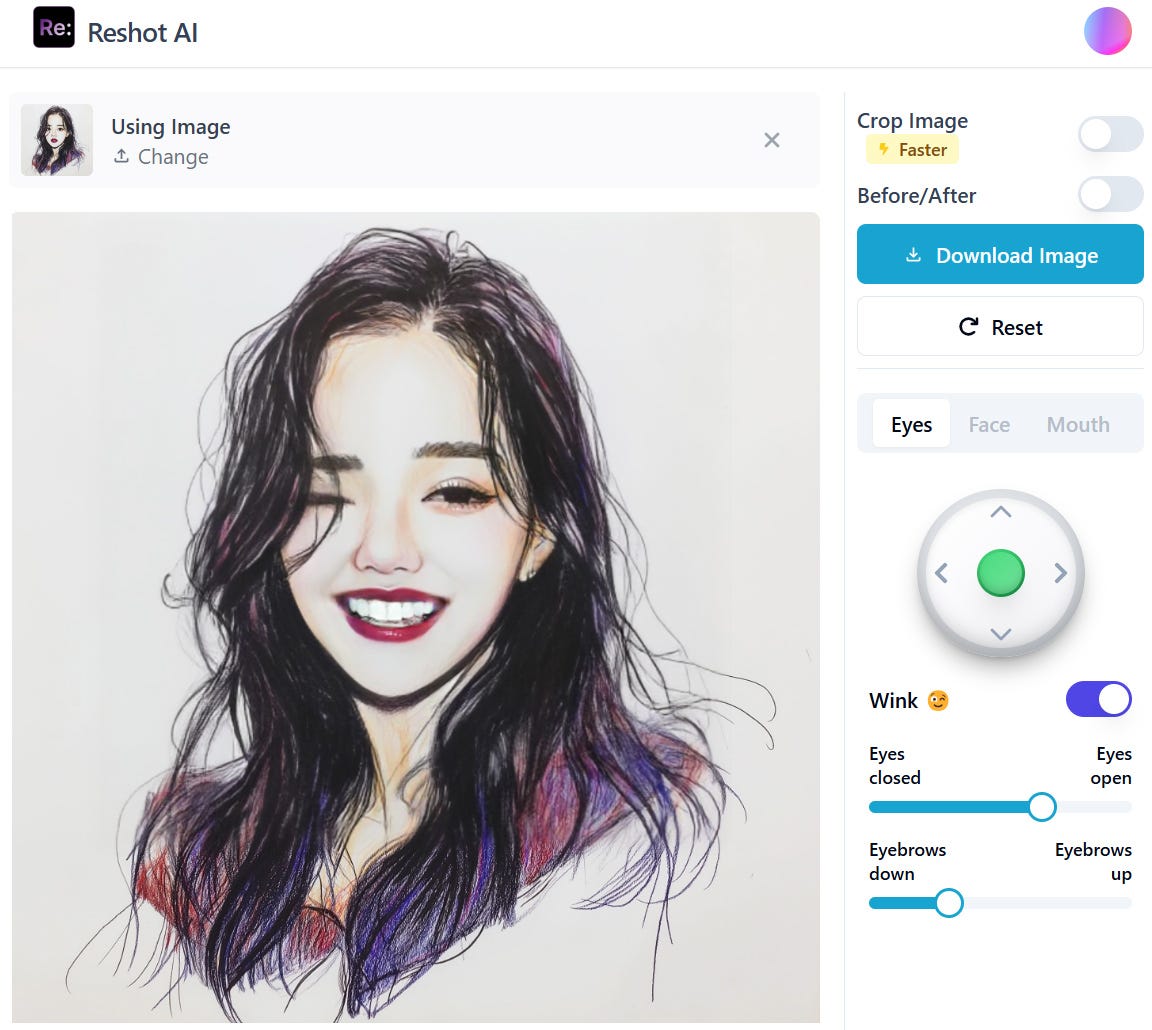

Take the example of portrait manipulation, as offered by the new Reshot AI application. As you can see in the example screenshot below, the user can edit facial features (such as eyes) in a semantically meaningful manner. For example, there’s a toggle for winking and you can make the eyes more or less open or closed. This is hugely more efficient than trying to, let’s say, make a wink in Photoshop by manipulating pixels.

Reshot AI is a new service that allows users to edit portraits (whether photos or drawings) with GUI controls that are specialized for manipulating an image of a human face based on understanding facial components. Here, I’m editing a drawing of a (hypothetical) K-pop influencer.

Midjourney and many other generative AI tools offer similar features to the extent that one can generate new images by using the previous generation (or uploaded image) as an image reference and changing the prompt to specify the desired changes. However, such remixes are on an all-or-nothing basis, and the new generation will often change additional elements of the image beyond those that were desired.

You wouldn’t believe what is supposedly the main current use case of Reshot: to make thumbnails for YouTube where the influencer has an exaggeratedly astonished facial expression. Basically, to create that “you wouldn’t believe what’s in this video” reaction shot that motivates clicks.

As an experiment, I tried going from a neutral face to an astonished face for two image styles: photorealistic and drawing.

Editing pictures of two stereotypical YouTube influencers.

Top row: Photorealistic portraits, similar to those you may get from a photoshoot of a real person.

Bottom row: Color pen drawings.

Left column: Original headshot with a neutral expression made with Midjourney.

Middle column: Astonished expression also made with Midjourney by using the left images as a character reference and changing the prompt to specifying “mouth wide open in a scream, eyebrows raised dramatically, eyes exaggeratedly wide, in an expression of extreme surprise and excitement.”

Right column: Images made with Reshot AI by uploading the original Midjourney neutral-expression faces and applying edits to the mouth, eyes, and eyebrows similar to those specified in the prompt used to make the middle column.

In this case, I have to say that I prefer the middle column for the “astonished” variations of both the photo and the drawing. Midjourney wins. However, even though I specified a high value of the “--cw” (character weight) parameter, Midjourney’s revised images don’t fully replicate the same person. This is most clearly seen in the hairstyle of the drawings. The astonished K-pop influencer has shorter hair than “the same” person with a neutral expression. In contrast, Reshot AI replicates the original image’s hairstyle.

Given the current quality of Reshot AI, it’s probably mostly useful in cases where you have decent photos but want to change them a little. Social media thumbnails may indeed be the main use case for now. However, Jakob’s First Law of AI says that “Today’s AI is the worst we’ll ever have,” and that certainly applies to face editing as well. Reshot AI launched a week ago, so it’s still early days. I assume that future versions will have better image quality and more controls. Maybe they’ll even add editing options at a higher level so that you can specify emotions as a starting point for tweaking more precise face elements.

Jakob Speaking at ADPList This Week

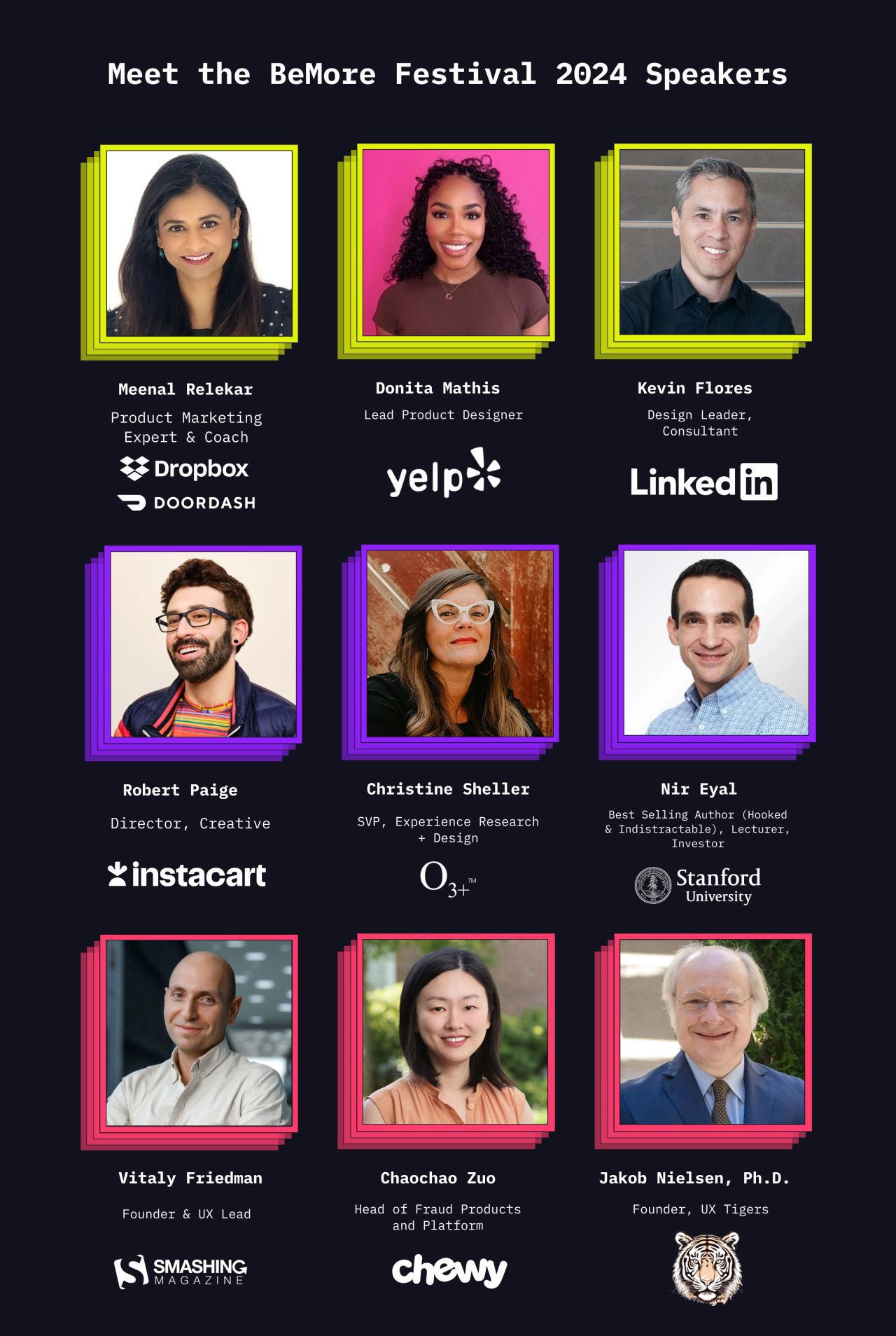

I’m presenting the closing session at ADPList’s BeMore conference this Thursday, September 19, at 9:00 AM US Pacific time. (Link to my session. Link to time zone converter for this time to see the equivalent time in your location.)

BeMore runs across two days (Sept. 18 and 19) and will likely be the main design conference of 2024, at least in terms of audience size and worldwide reach (it’s an online event). It has more than 100 speakers from 25 countries, and the registration fee is truly affordable at only US $15.

Here are some of the speakers (for the full list of 100 speakers, see the website):

Innovation Can Be Harmful

Interesting lecture by Johnathan Bi about the downside of innovation (1 hr. 11 min video, YouTube).

For most of human history, innovation was considered harmful, or even outright illegal. Stick to what we know and how things have always been done. Even the great thinker Plato viewed innovation with suspicion, only deeming it necessary in response to external changes or disasters. For more than a thousand years, the leading religious authorities in Europe defined innovation as synonymous with heresy and deviation from divine teachings.

For most of history, having new ideas could get you in trouble with the authorities. (Dall-E)

Obviously, those attitudes kept humanity in poverty for millennia. Today, we live in affluence because of a few hundred years’ worth of engineers and businesspeople who dared to invent new things.

Since the start of the Industrial Revolution, innovation by engineers and businesspeople has created the fantasyland of plenty we all inhabit today in industrialized countries. Our current lifestyle would be a fantasy for almost any human living from the dawn of time until the 1760s when the invention of the Spinning Jenny and the Watt Steam Engine started spinning affluence. (Dall-E)

On balance, I’m in favor of innovation and trying new things. But we should also acknowledge the downsides of innovation, especially the pursuit of novelty for novelty’s sake. Jakob’s Law of the Internet User Experience is a powerful argument for keeping most user interface designs conformant to what users already know.

Unchecked innovation has its downsides. This doesn’t mean that we should break our ideation lightbulb, but novelty for its own sake should be avoided, at least in user interface design. (Midjourney)

Bi lists the following downsides of innovation:

Disruption and Instability: Innovation can lead to unwanted social and political changes, creating instability. Technological advancements often force changes in other domains, disrupting existing structures and norms.

Fetishization and Pathology: Modern society tends to fetishize innovation, treating it as an end in itself. This obsession can lead to pursuing novelty for its own sake, rather than for substantive improvement or progress.

Erosion of Tradition: Excessive focus on innovation can lead to a disregard for the past and the valuable lessons it holds. This can result in losing touch with important cultural, social, and intellectual traditions.

Encouragement of Superficial Changes: The drive for constant innovation can lead to superficial changes that are valued only for their novelty, not for their actual usefulness or contribution to human welfare.

Potential for Harm: Innovation, especially in technology, carries inherent risks. Advances can lead to harmful or destructive outcomes, such as the development of dangerous weapons or technologies that exacerbate social inequalities.

Misplaced Optimism: There is often an unrealistic optimism about the benefits of innovation, ignoring the potential downsides and unintended consequences. This can lead to an uncritical acceptance of new technologies and ideas without adequate scrutiny.

Misdirection of Resources: The relentless pursuit of innovation can divert resources and attention from more stable, long-term improvements and solutions, leading to a focus on short-term gains over sustainable progress.

Narcissism and Ego: The modern emphasis on innovation often ties into personal ego and the desire for individual recognition, rather than a genuine commitment to collective improvement and wisdom.

While these are all good points, I remain on the side of innovation: the upside outweighs these downsides. Still, we do need to temper innovation. Bi’s points 3 and 4 (replacing tradition with superficial change) resonate particularly strongly with me, given my campaign against vocabulary inflation in UX, which has a large downside for those exact two reasons.

Pursuing superficial changes and fetishizing novelty can land you with an empty trophy case because you don’t make fundamental advances that last. (Midjourney)

I have always had an affinity for resisting orthodoxy — paradoxically, this attitude is a good source of innovation. That’s why I recommend Bi’s talk, which challenges the prevailing orthodoxy in favor of innovation, even though I mostly disagree with him.

Is Midjourney Getting Faster?

I feel that Midjourney has been faster the last week or so. Asking for a “subtle” variation of an image happens before I have scrolled up to the top of the “Create” screen, and even a “Strong” variation or a brand new prompt seems faster than before.

(I have not collected formal response time metrics, so I don’t know whether the speed improvement is real or just a feeling. But Midjourney does feel almost 50% faster than a month ago.)

Response times are incredibly important for generative AI (as they are for all user experiences): fast results encourage exploration, which again improves ideation and the quality of the final results.

Subsecond response times are ideal, and Midjourney isn’t there yet. But faster is better in this area of UX design. If Midjourney has in fact become faster, I don’t know whether this is simply due to more inference compute or whether they rewrote the code for efficiency.

Midjourney seems to be moving faster these days. Improved AI response time encourages exploration and iteration. (Midjourney)

AI Makes Users Feel Less Rushed

Small study included in a recent roundup of Microsoft research on AI: Madeline Kleiner and 3 coauthors asked 40 Microsoft employees to write a sales report in Word based on data in Excel. Half used AI to help with this task, and half performed it without help. (The AI was Microsoft Copilot, but even though Microsoft probably won’t like me saying this, I suspect that the results would have been much the same with any other frontier model since they are currently roughly equally good.)

Participants were asked to rate how demanding, hard, stressful, and rushed the task was. People using AI reported that the task was less mentally demanding than the ratings given by the control group for that same task: 30 out of 100 for participants using AI and 55 for people not using AI. The scores for perceived stress and difficulty were similar.

But the scores for feeling rushed differed dramatically: Again, on that 100-point scale, participants using AI gave a rating of 28 for being rushed, compared to a rating of 67 from users without AI. (All four ratings were different at p<0.05.)

It makes sense that getting help could make people feel that the task was less difficult and stressful. (At least if the AI was any help, which it seems it was.) But why were they less rushed? The Microsoft authors don’t say so, so I’ll have to speculate: other studies have shown that AI improves productivity and allows users to focus on the higher-level aspects of the task, while AI handles the more mundane aspects for them. It’s possible that when the basics are over with quickly, people feel that they have “time left” and don’t feel as rushed while they work on the higher-order aspects of the task. I would like to see this research question subjected to some more studies, though, before feeling confident in this conclusion.

If AI can make workers feel less rushed and less time pressure, that’s a mental health benefit on top of the economic benefit from the productivity improvements caused by AI. (Midjourney)

AI Improves International Collaboration

A second finding from that same Microsoft report is probably more fundamental and important. Benjamin Edelman and Donald Ngwe asked 77 native Japanese speakers to review the recording of a meeting in English. Half were allowed to use the Copilot Meeting Recap, both for an AI-generated meeting summary and to ask questions about the meeting. The other half just watched the meeting without AI help in understanding what had happened.

After reviewing the meeting, the Japanese speakers with AI help answered 16.4% more questions about the meeting correctly and were more than twice as likely to get a perfect score.

Again speculating on the reasons for the findings, it is likely that reading a meeting summary in your native language makes it easier for people to understand what meeting participants say in a foreign language. Having a good conceptual model substantially helps classifying specific details correctly.

Unfortunately, this research didn’t include a test condition with simultaneous translation of the English-speaking meeting participants into Japanese. If the translation quality is good (as it’ll be soon enough), this will likely be the preferred way to participate in or review a meeting in a foreign language. I still think that the meeting summary will likely help understand a translated meeting because of that conceptual model formation.

Reading an AI summary of a meeting in your own language before watching the recording makes you more likely to understand details presented in a language you don’t speak fluently. (Ideogram)

OpenAI o1

One more terrible product name from this company. At least it was available when announced (I got it two hours after seeing the X post), as opposed to advanced voice mode which is still only in limited release.

You’ve probably read about it already: OpenAI o1 is a new model that adds improved reasoning. This means the model performs better on many programming, math, and science tasks. However, according to OpenAI's own tests, it performs slightly worse on traditional language-based tasks (writing and editing text). I can confirm this latter point based on the extremely limited test case of asking o1 to suggest bullet points to promote this newsletter edition on LinkedIn. It did worse than Claude 3.5.

One interesting metric in the announcement is that o1 is better than human Ph.D.s at answering complicated science questions in their fields. The accuracy of o1 on these questions was 78%, whereas the human Ph.D.s scored an average of 70% correct answers. The test was “GPQA diamond, a difficult intelligence benchmark which tests for expertise in chemistry, physics, and biology.”

We don't expect Ph. D.-level performance from AI until around 2027 or 2028. A chemistry Ph.D. can do a lot more than answer factual questions about chemistry.

However, beating top human experts on difficult questions within their field is certainly an accomplishment. We used to say that current AI couldn’t do the math and was only as good as a smart high school student (it scored high on AP exams, but that was about it).

In general, though, I’m sad that this advance privileges software developers over normal business professionals. Yes, programming is super-important for the economy, as “software is eating the world.” Still, we also need to improve the performance of other fields so that we (including design and usability) can keep up with the new accelerated pace of shipping code.

The geeks may pull further ahead of design (symbolized by a sketching pencil) if AI continues to advance more for programming tasks than other tasks. (Grok)

Jason Wei from Open AI posted two important points:

Now that o1 does reasoning, avoid using the old prompt engineering technique of specifying chain of thought in the prompt. Better to let o1 figure out for itself how to solve the problem. In general, as AI models change, some of the old approaches to prompting will change.

Reasoning depends on inference compute, not training compute.

This latter point is important, because it is likely easier to scale inference compute (which can be distributed) than training compute (which must be centralized, or at least synchronized). It will be very hard to build training clusters that consume 10 nuclear power plants’ worth of electricity, but that’s likely what’s needed for ASI (artificial super-intelligence, or dramatically surpassing even the best human performance).

In contrast, we can allocate tens or thousands of times more inference compute to solving high-value problems. Eventually, much inference might run on the user’s local equipment, which can be scaled easily: pay a thousand dollars more for a PC with a high-end inference chip, just like gamers currently pay a thousand dollars more for a speced-out computer that can render games at a high frame rate. Paying $1,000 more for a computer is peanuts if it allows you to do your job 10 times better. (Assuming you make more than $100 per year.)

Training an advanced AI model requires an immense training cluster. Improved inference can be distributed across multiple computers and possibly run locally on the user’s PC. Much easier to scale inference than training, which may be a benefit of AI that relies more on inference-time reasoning. (Midjourney)

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (27,471 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)