AI Vastly Improves Productivity for Business Users and Reduces Skill Gaps

Summary: Using generative AI (ChatGPT etc.) in business vastly improves users’ performance: by 66% across 3 case studies. More complex tasks have bigger gains, and less-skilled workers benefit the most from AI use. AI tools are forklifts for the mind.

Permalink for this article: https://www.uxtigers.com/post/ai-productivity

Measurement data about the usability of generative AI systems like ChatGPT for real business tasks: 3 new studies tested very different types of users in different domains but arrived at the same conclusions. Significant productivity increases, with the biggest gains seen for the least-skilled users. Some of the studies also find improvements in the quality of the work products.

There have been endless discussions of AI in recent months, but almost all of it is speculation: the authors’ personal opinions and likes/dislikes. Bah, humbug. If the dot-com bubble taught us anything, it’s that such speculations are worthless for assessing business use: what deployments will be profitable and which will flop. Opinion-based guesses are often wrong and lead to massive waste when companies launch products that don’t work for real users. That’s why empirical data from hands-on use while users perform actual tasks (as opposed to watching demos) is so valuable.

Here, I discuss the findings from 3 studies, which are described in detail at the end of this article:

Study 1: customer service agents resolving customer inquiries in an enterprise software company.

Study 2: experienced business professionals (e.g., marketers, HR professionals) writing routine business documents (such as a press release) that take about half an hour to write.

Study 3: programmers coding a small software project that took about 3 hours to complete without AI assistance.

In all 3 cases, users were measured while they completed the tasks: always for task time and sometimes for quality. About half the users performed the tasks the old-fashioned way, without AI assistance, whereas the other half had the help of an AI tool.

Productivity Findings

The most dramatic result from the research is that AI works for real business use. Users were much more efficient at performing their job with AI assistance than without AI tools.

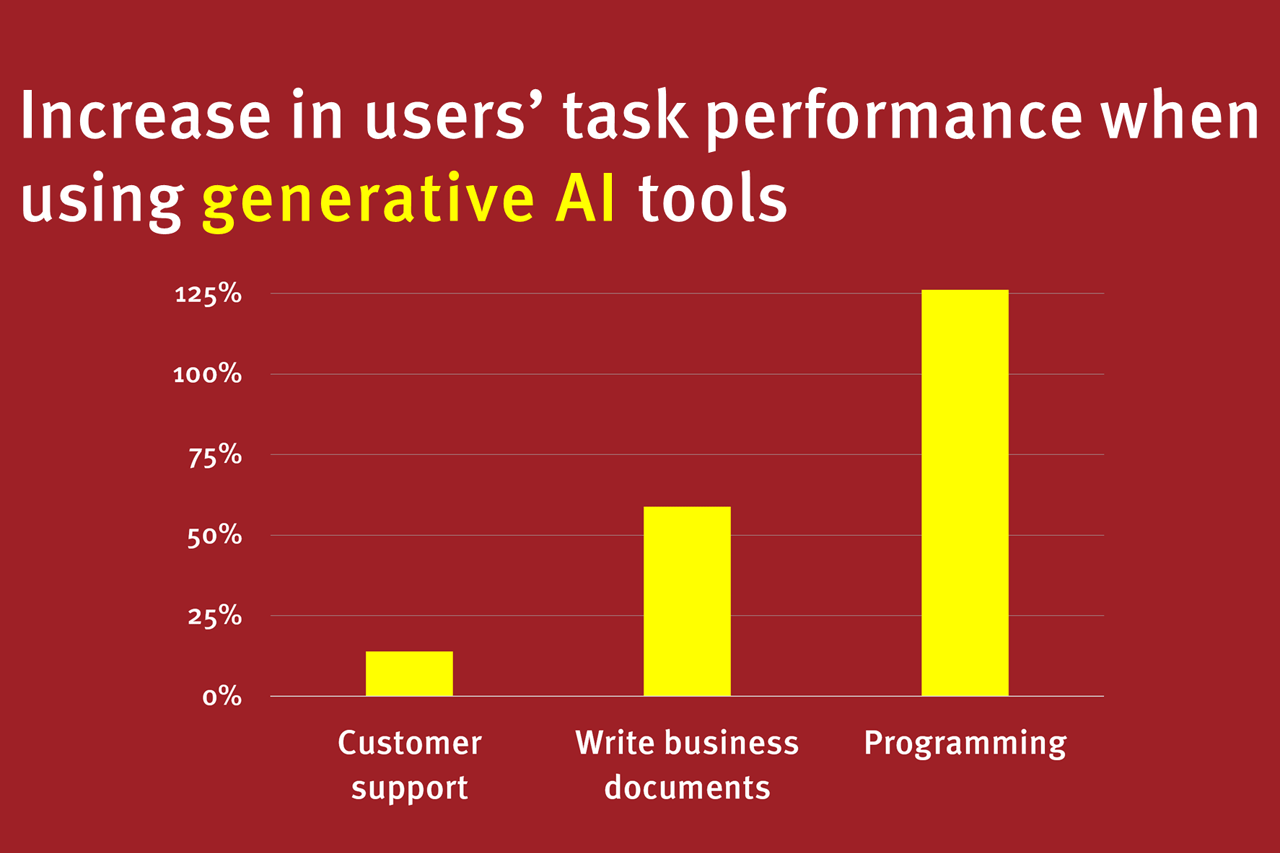

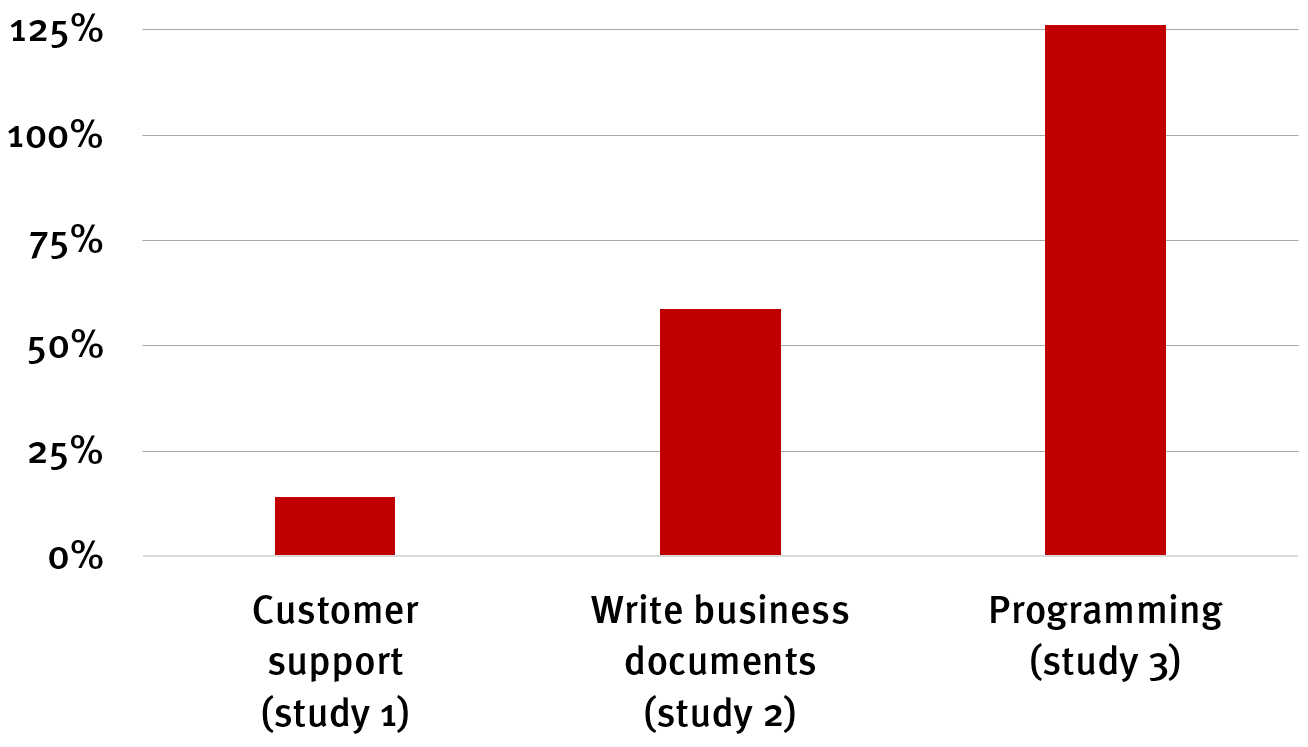

Productivity measures how many tasks a user can perform within a given time say a day or a week. If an employee can get twice as much work done, his or her productivity has increased by 100%. Here are the results:

Study 1: Support agents who used AI could handle 13.8% more customer inquiries per hour.

Study 2: Business professionals who used AI could write 59% more business documents per hour.

Study 3: Programmers who used AI could code 126% more projects per week.

The following chart summarizes the findings from the three research studies:

The measured increase in users’ task performance when using generative AI tools (relative to control groups of users who did not use AI), according to the three research studies discussed here.

In all 3 cases, the difference with the control group (who performed the work the traditional way, without AI tools) was highly statistically significant at the level of p<0.01 or better.

It’s clear from the chart that the change in task-throughput metrics is very different across the 3 domains studied. It looks like more cognitively demanding task lead to more substantial gains from AI helping the user.

Is the AI Productivity Lift a Big Deal?

On average, across the three studies, generative AI tools increased business users’ throughput by 66% when performing realistic tasks. How should we judge this number?

A number in itself is meaningless. Only when compared to other numbers can we conclude anything.

For comparison, average labor productivity growth in the United States was 1.4% per year during the 12 years before the Covid pandemic (2007–2019), according to the Bureau of Labor Statistics. In the European Union, average labor productivity growth was 0.8% per year during the same period, according to Eurostat. (Note that during these years, the EU included the UK.)

Both numbers measure the average value created by a worker per hour worked. If employees put in more hours or additional warm bodies join the workforce, that will increase GDP, but it doesn’t mean workers have become more productive in the sense discussed here. This article discusses how much value employees create for each work hour. If this is increased, standards of living will improve. Creating more value for each hour worked is the only way to improve living standards across society in the long run.

Now we have something to compare! The 66% productivity gains from AI equate to 47 years of natural productivity gains in the United States. And AI corresponds to 88 years of growth in the European Union, which is a third more time than has passed since the formation of the European Community (the precursor to the EU) when the Treaty of Rome was signed in 1957.

AI is a big deal, indeed!

Three caveats:

The 66% productivity gains come from the previous versions of generative AI (used when the data was collected), as represented by ChatGPT 3.5. We’re already on the next release, which is substantially better. I expect future products to improve even more, especially if they are developed from user-experience insights instead of purely being driven by geeks. (Current AI tools have big usability weaknesses.)

Only study 1 (customer support) followed workers across multiple months. Studies 2 and 3 (writing business documents and programming) only measured participants during a single use of the AI tool — often the first time a given person used AI. There’s always a learning curve in using any design, where users get better after repeated exposure to the user interface. Thus, I expect that the (already very high) gains measured in studies 2 & 3 will become much larger in real-world settings where employees keep using tools that make them substantially more effective at their job.

The productivity gains only accrue while workers are performing those tasks that receive AI support. In some professions, like UX design, many tasks may be unsuitable for AI support, and thus employees in those fields will only realize smaller gains when viewed across their entire workday.

These factors point in opposite directions. Which will prove the stronger remains to be seen. For now, since I have no data, I will just assume that they will be about equally strong. Thus, my early estimate is that deploying generative AI across all business users can potentially increase productivity by around 66%.

Productivity of UX Professionals

We currently only have minimal data on the potential benefits of AI used by UX professionals. One study suggests that ChatGPT can help with faster thematic analysis of questionnaire responses. There will likely be many other examples where we can productively employ these new tools for various UX processes.

How big can the improvements be expected to be for UX work? We can get an early, rough estimate from the data presented in this article. More complicated tasks lead to bigger AI gains. UX design is not quite as cognitively demanding as programming (which saw a 126% improvement), but it’s up there in complexity. So my guess is 100% productivity gains from AI-supported UX work.

While we await actual data, the current expectation is that UX professionals can double their throughput through AI tools. To be more precise, productivity would double for those UX tasks that lend themselves to AI support. Which ones might be a topic for a future post, but not all UX work will gain equally much from AI tools. For example, whether discovery studies or usability testing, observational user research still need to be conducted by humans: the AI can’t guess what users will do without watching real people doing actual tasks. Some of this “watching” might be automated, but I suspect that a human UX expert will still need to sit with users for many of the most valuable and essential studies.

Unfortunately, conducting a one-hour customer visit consumes an hour, so there’s no productivity speed-up there. (But summarizing and comparing notes from each visit might be expedited by AI.)

Again, I'm guessing at this early stage, but half of UX work will benefit from AI tools. If this half of our work can get 100% increased throughput, but the rest remains at its current pedestrian pace, UX work will only have a 33% productivity lift overall. Still good, but not as revolutionary due to the human-focused nature of our work.

Quality Improvements from the Use of AI

Efficiency is nice, but it does us no good in the bigger picture if AI use produces vastly more bad stuff. Quality is just important as quantity for the value-add of any innovation.

Luckily, the quality of workers’ output with AI assistance is better than that of the control groups, at least in studies 1 and 2. (Study 3, of programmers, didn’t judge the code quality produced under the two experimental conditions.)

In study 1 (customer support), the AI-using agents’ work quality improved by 1.3% compared to the non-AI-using agents, as measured by the share of customer problems that were successfully resolved. On the other hand, the customers gave identical subjective satisfaction scores for the problem resolutions under the two conditions. The small 1.3% lift was only marginally significant at p=0.1.

So in Study 1, we can conclude that AI assistance didn’t hurt work quality and likely improved it slightly.

In contrast, in study 2 (writing business documents), work quality shot through the roof when the business professionals received help from ChatGPT to compose their documents. On a 1–7 scale, the documents' average graded quality was 4.5 with AI versus 3.8 without AI. This difference was highly statistically significant at p<0.001.

Based on self-reported data from the participants, the quality improvement is likely to stem from the fact that the AI-using business professionals spent much less time producing the first draft of the document text (which was generated by ChatGPT) and much more time editing this text, producing more-polished deliverables.

Human-Computer Symbiosis

In 1960, computer pioneer J.C.R. Licklider wrote an influential paper titled “Man-Computer Symbiosis.” Licklider envisioned a future when people and computers would supplement each other in “an expected development in cooperative interaction between men and electronic computers” (my bolding added to Licklider’s quote). That day seems to have arrived, according to this early research on AI usability. Excellent results in task throughput and work-product quality come from such a symbiosis.

AI will not replace humans. The best results come when the AI and the person work together, for example, by expediting the production of draft text, leaving human professionals to focus on editing and polishing.

Narrowing Skill Gaps

The exciting findings from the research don’t stop with increased productivity and work quality.

Generative AI has a third effect: narrowing the gap between the most talented and the least talented employees.

Of course, individual differences will always exist, where some people perform better than others. But the magnitude of these differences is not a given, at least when we measure the real-world impact of inherent individual differences.

In study 1 (customer support), the lowest-performing 20% of the agents (the bottom quintile) improved their task throughput by 35% — two and a half times as much as the average agent. In contrast, the best-performing 20% of the agents (top quintile) only improved their task throughput by a few percent.

In study 2 (writing business documents), the professionals who scored the lowest when writing a document without AI help improved their scores much more than high-scoring participants when they received support from ChatGPT. The difference between good and bad writers was somewhere between 2 and 3 points (on the 1-7 quality rating scale) without using AI, and this difference narrowed to roughly a single point when using ChatGPT. (This difference assessment is my own, from eyeballing the figures in the original paper.) In the original paper, the narrowing of the skills gap is explained in terms of correlations which differ at p=0.004 but are hard to interpret for readers without good statistics skills.

In study 3 (programming), programmers with fewer years of experience benefited more from the AI tool, though the effect was only marginally significant, at p=0.06. Also, programmers who spent fewer hours per day coding benefited more from the AI tool than participants who coded for more hours per day. This second effect was quite significant, at p=0.02. Taken together, these two findings suggest that less-skilled programmers benefit more from AI than more-skilled programmers do.

While the detailed findings and statistics differ between the 3 studies, all 3 conclusions are the same: using AI narrowed the gap between the worst and the best performers.

Narrowing Skills Gap, But Biggest Productivity Gains in Cognitively Demanding Tasks

At first, I thought it to be a paradox that the worst performers within a domain are helped the most by AI, but that between domains, the gains are more prominent the more cognitively demanding the task — helping on the low end in one analysis and helping on the high end in the other analysis. How can that be?

As always, I would like to see more research, in more domains and with broader target audiences. But given the 3 case studies at hand, I have a tentative explanation for these two seemingly-opposite results.

My analysis is that generative AI takes over some heavy lifting in manipulating large amounts of data. This helps more in more cognitively demanding tasks, but it also helps more for humans who are less capable of holding many chunks of information in their brain at any given time and performing complex mental operations. By lifting some of the heavy data-manipulation burden, AI tools free up users to sprinkle on the unique human stardust of creativity, as exemplified by the editing component of the business-document task.

Creativity matters more in complex tasks than routine tasks, which is another reason AI helps more in advanced domains. Without AI, less-skilled users’ creativity is repressed by their need to devote most of their scarce cognitive resources to data handling. This explains the narrowing of the skills gap with the better performers when the less-skilled users’ brains are freed up to be more creative.

Steve Jobs famously called computers “bicycles for the mind” because they allow users to do things with less effort. Similarly, we might call AI tools forklifts for the mind because they carry the heavy lifting. In an actual warehouse, the forklift driver still needs to decide how to stack the pallets most efficiently, applying his or her human insights. But because the forklift does the lifting, humans no longer need to be musclebound beasts to move heavy pallets around. The same is true (metaphorically) when using AI.

Faster Learning

A final finding comes from study 1 (customer support), the only longitudinal research study in the current set. In this study, the support agents were followed over several months, showing that agents who used the AI tool learned faster than agents without AI support.

On average, new agents can complete 2.0 customer inquiries per hour. This increases to 2.5 inquiries per hour with experience, taking 8 months of work without using the AI tool. In contrast, the agents who started using the AI tool right off the bat reached this level of performance in only two months. In other words, AI used expedited learning (to this level of performance) by a factor of 4.

Research Weaknesses

It may seem very petty of me to complain of weaknesses in these pioneering research studies that are giving us sorely needed empirical data at a time when most analysts are blathering based on their personal opinions. And I am very grateful for this research, and I enthusiastically praise the authors of these three papers. Well done, folks! If anybody deserves more research funding, it’s you, and I look forward to reading your future, more in-depth findings.

That said, there are always areas for improvement in any research, especially in pioneering studies conducted early on tight timelines. My main complaint is that the research was conducted without applying well-known user-research methods, such as think-aloud protocols. While economists love quantitative research like that reported here, we UX researchers know that qual eats quant for breakfast regarding insights into user behaviors and why some designs work better than others.

The Three Research Studies

The rest of this article presents each of the 3 original research studies in more depth. You can stop reading now if you don’t care about the details but only want the conclusions, which I have already presented.

However, understanding the specifics of the research is valuable for interpreting the results. The detailed discussion is essential for realizing how much we still don’t know. There are unsolved questions enough for many master’s and Ph.D. theses and even some undergraduate papers if you’re in the top 1% of BS/BA students. If you want to do this work, let me know, and I might be able to mentor you.

The three studies were very different and yet arrived at the same results. This vastly increases my faith in the conclusions. Any single study can be wrong or misleading for several reasons. But credibility shoots through the roof when different people find the same thing in different domains with different research methods. The lead authors of these three case studies were from Stanford, MIT, and Microsoft Research, respectively. They studied customer support agents resolving customer inquiries, business professionals writing routine documents, and programmers coding an HTTP server. As you’ll see if you read on, each research team used different study protocols and different metrics.

Despite all these differences, the three studies still all arrived at roughly the same conclusions. Impressive!

Study 1: Customer Support

Erik Brynjolfsson and colleagues from Stanford University and MIT conducted the first study. Brynjolfsson is the world’s leading expert on the economic impact of technology use.

The study participants were 5,000 customer support agents working for a large enterprise software company. They were provided with an AI tool built on the large language models developed by OpenAI. (Further details are not provided in the paper.) The ecological validity of this study is enhanced by the fact that the agents were studied in their natural environment performing their everyday work as usual. (In contrast, studies 2 and 3 employed artificial settings where study participants performed assigned tasks instead of their usual work.)

It was possible to measure the impact of the AI tool because it was rolled out gradually to the various agents. Thus, at any given time, some agents used the AI tool, whereas others continued to work in the traditional way, without AI.

Support agents who used the AI tool could handle 13.8% more customer inquiries per hour. This difference is highly statistically significant at the p<0.01 level. Furthermore, work quality improved by 1.3%, as measured by the share of customer problems that were successfully resolved. This slight increase was only marginally significant at p=0.1. (A second measure of quality, the net promoter score reported by the customers, was not significantly different between the two conditions. The one thing I make of this finding is that customers could not tell whether or not the agent employed AI to solve their problem.)

The study also found that AI assistance was substantially more helpful for the less skilled and less experienced support agents. The lowest-performing 20% of the agents (the bottom quintile) improved their task throughput by 35% — two and a half times as much as the average agent. In contrast, the best-performing 20% of the agents (top quintile) only improved their task throughput by a few percent, and possibly not at all (because the margin of error included the zero point, where agents would perform the same with and without the AI tool).

One possible explanation of the fact that the best agents didn’t improve with the use of AI comes from a more detailed analysis of the support sessions, which found that productivity increased the more an agent followed the recommendations from the AI, as opposed to relying on their own personal ideas. Unfortunately, the most experienced agents were the least likely to follow the AI’s recommendations — probably because they had learned to rely more on their own insights. In other words, experience backfired because the best agents were more likely to dismiss the AI, even when it was right.

The AI tool also accelerated the learning curve for new agents. On average, new agents can complete 2.0 customer inquiries per hour. This increases to 2.5 inquiries per hour with experience, taking 8 months of work without using the AI tool. In contrast, the agents who started using the AI tool right off the bat reached this level of performance in only two months. Even better, the AI-using agents reached a performance of 3.0 inquiries per hour after 5 months of experience, meaning that they had already become substantially better than the non-AI users with 8 months of experience.

Study 2: Business Professionals Writing Business Documents

Shakked Noy and Whitney Zhang from MIT conducted the second study. The study participants were 444 experienced business professionals from a variety of fields, including marketers, grant writers, data analysts, and human-resource professionals. Each participant was assigned to write two business documents within their area. Examples include press releases, short reports, and analysis plans — documents that were reported as realistic for these professionals’ writing as part of their work.

All participants first wrote one document the usual way, without computer aid. Half of the participants were randomly assigned to use ChatGPT when writing a second document, while the other half wrote a second document the usual way, with no AI assistance. Once the business documents had been written, they were graded for quality on a 1–7 scale. Each document was graded by three independent evaluators who were business professionals in the same field as the author. Of course, the evaluators were not told which documents had been written with AI help.

In the second round, the business professionals using ChatGPT produced their deliverable in 17 minutes on average, whereas the professionals who wrote their document without AI support spent 27 minutes. Thus, without AI support, a professional would produce 2400/27 = 88.9 documents in a regular 40-hour (2,400-minute) work week, whereas with AI support, that number would increase to 2400/17= 141.2. This is a productivity improvement of 59% = (141.2-88.9)/88.9. In other words, ChatGPT users would be able to write 59% more documents in a week than people who do not use ChatGPT — at least if all their writing involved only documents similar to the ones in this study. This difference corresponds to an effect size of 0.83 standard deviations, considered large for research findings.

Generating more output is not helpful if that output is of low quality. However, according to the independent graders, this was not the case. (Remember that the graders did not know which authors had received help from ChatGPT.) The average graded quality of the documents, on a 1–7 scale, was much better when the authors had ChatGPT assistance: 4.5 (with AI) versus 3.8 (without AI). The effect size for quality was 0.45 standard deviations, which is on the border between a small and a medium effect for research findings. (We can’t compute a percentage increase since a 1–7 rating scale is an interval measure, not a ratio measure. But a 0.7 lift is undoubtedly good on a 7-point scale.)

Thus, the most prominent effect was increased productivity, but there was also a nice effect in improved quality. Both highly statistically significant differences (p<0.001 for both metrics).

Another interesting finding is that the use of ChatGPT reduced skill inequalities. While in the control group, which did not use AI, participants’ scores for the two tasks were fairly well correlated at 0.49 (meaning that people who performed well on the first task tended to perform well on the second, and people who performed poorly on the first also did so on the second), in the AI-assisted group, the correlation between the performance in the two tasks was significantly lower at only 0.25. This lower correlation was primarily because users who got lower scores in their first task were helped more by ChatGPT than users who did well in their first task.

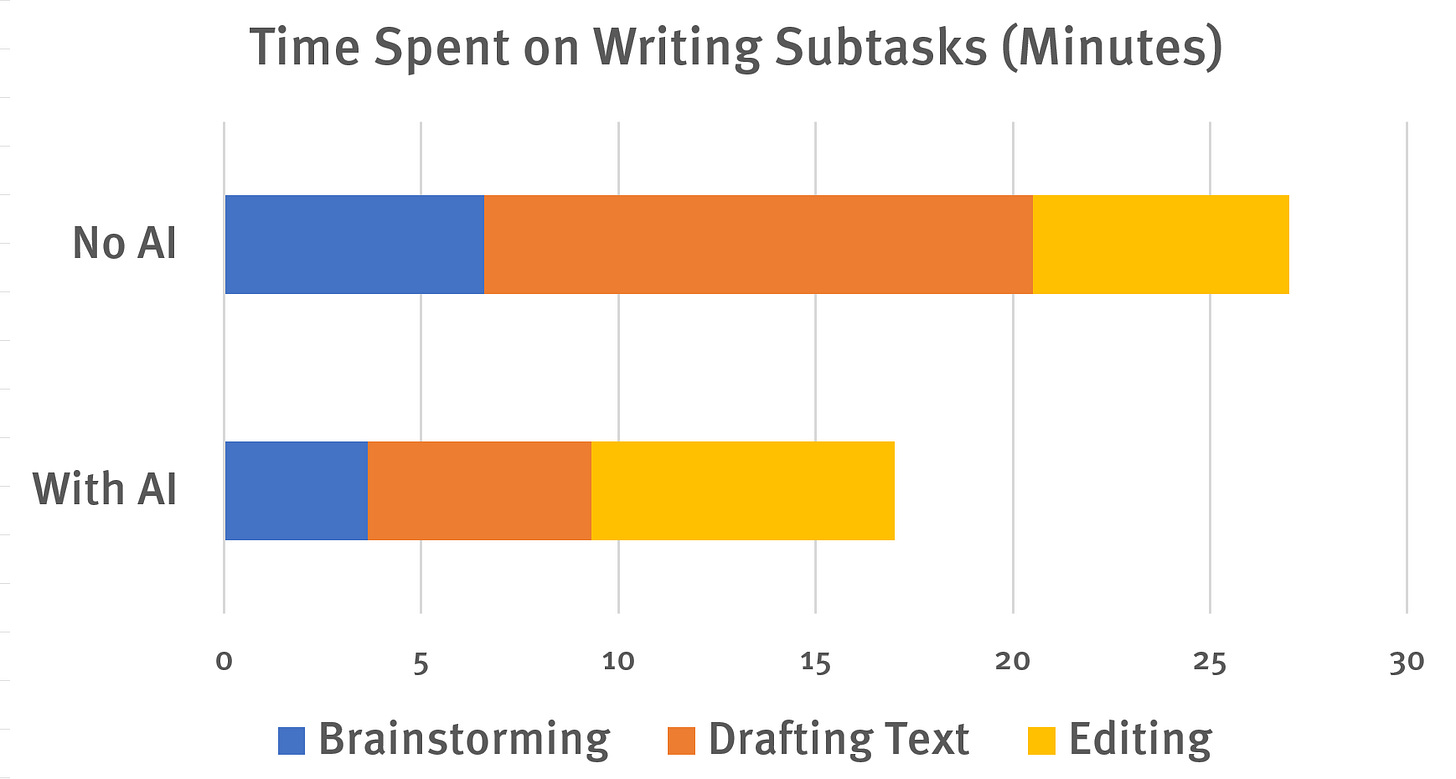

The following chart shows how business professionals in the two study groups used their time while writing the assigned business documents:

Bar chart showing the average time in minutes spent on the three stages of writing a document: [1] deciding what to do (called “brainstorming” by the researchers), [2] generating the raw text for the first draft, and [3] editing this draft to produce the final polished deliverable. The top bar shows the average times for users who wrote their document the usual way, without AI assistance, whereas the bottom bar shows the average times spent by users who employed ChatGPT.

The critical difference between the two conditions is that participants without AI assistance spent much more time generating their initial draft text. This left them relatively little time for editing. In contrast, the participants who received help from ChatGPT were very fast in generating the draft text because most of that work was done by ChatGPT. Faster text entry allowed the AI-using participants the luxury of substantially more thorough editing. This additional editing is likely the explanation for the higher-rated quality of the documents produced by these users.

Study 3: Programmers

Sida Peng and colleagues from Microsoft Research, GitHub, and MIT conducted the third study. In this study, 70 programmers were asked to implement an HTTP server in JavaScript. Half were in a treatment group and used the GitHub Copilot AI tool, whereas the others formed the control group that didn’t use any AI tools. The participants had an average coding experience of 6 years and reported spending an average of 9 hours per day coding. So while they were not superprogrammers, it would be reasonable to consider the participants to be experienced developers.

The main finding was that programmers who did not use AI completed the task in 160.89 minutes (2.7 hours) on average, whereas the programmers who had AI assistance completed the job in 71.17 minutes (1.2 hours). The difference between the two groups was statistically significant at the level of p=0.0017, a very high significance level.

This means that without AI, the programmers would be able to implement 14.9 problems like the sample task in a 40-hour work week, but the programmers who used the Copilot AI tool would be able to implement 33.7 such problems in the same time. In other words, productivity in the form of task throughput increased by 126% for these developers when they used the AI tool.

The difference between the two groups regarding their ability to complete the assignment was not statistically significant, so I won’t discuss it further.

Compared with Study 2, study 3 has two advantages:

Study 3 tested a more advanced type of business professional, performing a more complicated task than the mainstream office workers who authored business documents in Study 2.

The tasks were more substantial, taking almost 6 times longer to perform for the participants in the control group who didn’t use AI. A classic weakness of most research is that it studies small tasks that are not representative of more substantial business problems. I still won’t say that a programming assignment that can be completed in less than 3 hours is a true challenge for professional developers, but at least it’s not a toy problem to build an HTTP server.

The study participants’ subjective estimate was that using the AI tool decreased their task time by 35% relative to the time they thought it would have taken them to code the server without the tool. This corresponds to a 54% guessed increase in productivity in the form of the number of coding problems each programmer can implement in a week.

As we know from the data, the actual productivity improvement was 126%, so why did the participants only think they had experienced a 54% productivity gain? Well, as always, we can’t rely on what people say or what users guess would happen with a different user interface than the one they used. We must watch what actually happens. Only observational data is valid. Guessed data is just that: guesses.

Detailed data analysis shows that programmers with fewer years of experience benefited more from the AI tool, though the effect is only marginally significant, at p=0.06. Also, programmers who spent fewer hours per day coding benefited more from the AI tool than participants who coded for more hours per day. This second effect is quite significant, at p=0.02.

Taken together, these two findings suggest that less-skilled programmers benefit somewhat more from AI than more-skilled programmers do. (To be precise: the study didn’t truly measure programming skills, but it’s reasonable to assume that people with more years of experience and more hours of daily practice will indeed be better on average than people with less experience.)

A downside of Study 3 is that the researchers did not assess the code quality produced by the two groups. This would certainly be possible to do, both with objective metrics (say, how efficiently each implementation runs in terms of computer resources consumed) and estimated metrics (say, how easy the code would be to maintain, as estimated by a few expert programmers). Of course, I can (and will!) always ask for more from any research study. No research is perfect. As with studies 1 and 2, I applaud Peng et al. for their work.

References

[Study 1] Erik Brynjolfsson, Danielle Li, and Lindsey R. Raymond (2023): Generative AI at Work. National Bureau of Economic Research working paper 31161. https://www.nber.org/papers/w31161

[Study 2] Shakked Noy and Whitney Zhang (2023): Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence. Available at SSRN: https://ssrn.com/abstract=4375283 or http://dx.doi.org/10.2139/ssrn.4375283 (for a detailed analysis of this study, see https://www.nngroup.com/articles/chatgpt-productivity/)

[Study 3] Sida Peng, Eirini Kalliamvakou, Peter Cihon, and Mert Demirer (2023): The Impact of AI on Developer Productivity: Evidence from GitHub Copilot. Available at Arxiv: https://arxiv.org/abs/2302.06590 or https://doi.org/10.48550/arXiv.2302.06590

Quiz: Check Your Understanding of This Article

Check your comprehension. Here are 8 questions about ideas and details in this article. The correct answers are given after the author’s biography.

Question 1: What is the potential benefit of AI tools in UX work?

A. AI can replace human workers

B. AI can increase productivity in half of UX work

C. AI can decrease productivity in UX work

D. AI has no impact on UX work productivity

Question 2: What is the explanation for the narrowing of the skills gap when using AI tools?

A. The paper doesn't provide any explanation.

B. AI tools help less-skilled staff more than more-skilled staff.

C. The paper suggests that the skills gap is not narrowing.

D. The paper suggests that there is no difference in the way people at different skill levels benefit from AI.

Question 3: According to the U.S. Bureau of Labor Statistics, what was the average annual labor productivity growth in the U.S. during 2007-2019?

A. 0.8%

B. 1.4%

C. 3.1%

D. 47%

Question 3: How widespread are productivity increases from using AI tools according to the studies in the article?

A. There were no significant productivity increases seen with AI tools.

B. AI tools only helped the most skilled users.

C. AI tools led to significant productivity increases, with the biggest gains seen for the least-skilled users.

D. AI tools only helped in one of the domains studied.

Question 4: Why does AI help more for humans who are less capable of holding many chunks of information in their brain at any given time and performing complex mental operations?

A. Because AI tools are better at creativity.

B. Because AI tools make tasks easier to complete.

C. Because AI tools take over some heavy lifting in manipulating large amounts of data.

D. The article doesn't provide any explanation.

Question 5: What is labor factor productivity?

A. The number of hours an employee works in a day.

B. The number of tasks an employee can perform within a given time.

C. The amount of time it takes an employee to complete a task.

D. The amount of money an employee earns.

Question 6: What is the main point of the article?

A. AI tools can help improve productivity in a variety of fields.

B. The productivity gains from AI tools are limited and have not been fully studied yet.

C. Increasing the number of employees is the only way to improve living standards.

D. The use of AI tools in business is not recommended due to their usability weaknesses.

Question 7. How much did programmers improve their productivity in the study cited in this article?

A. 59%

B. 126%

C. 1.4%

D. 0.8%

Question 8: What was the change in the quality of the user's work products (deliverables) when using AI tools?

A. Quality went down by much more than output went up.

B. Quality declined a little, but that was more than made up for by a huge increase in output.

C. Quality was unchanged, so people produced equally good results with and without AI; just more of that same quality when using AI tools.

D. The work product quality improved in 2 out of 3 studies, and the third study didn't measure quality.

More on AI UX

This article is part of a more extensive series I’m writing about the user experience of modern AI tools. Suggested reading order:

AI Vastly Improves Productivity for Business Users and Reduces Skill Gaps

Ideation Is Free: AI Exhibits Strong Creativity, But AI-Human Co-Creation Is Better

AI Helps Elite Consultants: Higher Productivity & Work Quality, Narrower Skills Gap

The Articulation Barrier: Prompt-Driven AI UX Hurts Usability

UX Portfolio Reviews and Hiring Exercises in the Age of Generative AI

Navigating the Web with Text vs. GUI Browsers: AI UX Is 1992 All Over Again

UX Experts Misjudge Cost-Benefit from Broad AI Deployment Across the Economy

ChatGPT Does Almost as Well as Human UX Researchers in a Case Study of Thematic Analysis

“Prompt Engineering” Showcases Poor Usability of Current Generative AI

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 40 years experience in UX. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability" by The New York Times, and “the next best thing to a true time machine” by USA Today. Before starting NN/g, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including Designing Web Usability: The Practice of Simplicity, Usability Engineering, and Multimedia and Hypertext: The Internet and Beyond. Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI.

Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

Quiz Answers

Question 1: What is the potential benefit of AI tools in UX work?

Correct Answer: B. AI can increase productivity in half of UX work

Question 2: What is the explanation for the narrowing of the skills gap when using AI tools?

Correct Answer: B. AI tools help less-skilled staff more than more-skilled staff.

Question 3: According to the U.S. Bureau of Labor Statistics, what was the average annual labor productivity growth in the U.S. during 2007-2019?

Correct Answer: B. 1.4%

Question 3: How widespread are productivity increases from using AI tools according to the studies in the article?

Correct Answer: C. AI tools led to significant productivity increases, with the biggest gains seen for the least-skilled users.

Question 4: Why does AI help more for humans who are less capable of holding many chunks of information in their brain at any given time and performing complex mental operations?

Correct Answer: C. Because AI tools take over some heavy lifting in manipulating large amounts of data.

Question 5: What is labor factor productivity?

Correct Answer: B. The number of tasks an employee can perform within a given time.

Question 6: What is the main point of the article?

Correct Answer: A. AI tools can help improve productivity in a variety of fields.

Question 7. How much did programmers improve their productivity in the study cited in this article?

Correct Answer: B. 126%

Question 8: What was the change in the quality of the user's work products (deliverables) when using AI tools?

Correct Answer: D. The work product quality improved in 2 out of 3 studies, and the third study didn't measure quality.