UX Roundup: UX Superhero | Pivot to AI-UX | Luma Dream Machine | Midjourney Niji | Apple AI | Leonardo Phoenix

Summary: When UX professionals gain superpowers | The UX-STRAT conference makes a hard pivot to the AI-driven future of UX | Luma Dream Machine generates better AI video | Midjourney updates its Niji image model | Apple AI: Maybe good, maybe not | Leonardo launches ‘Phoenix’ image-generation model with improved prompt adherence

UX Roundup for June 17, 2024. (Ideogram)

When UX Professionals Gain Superpowers

I was on UserTesting’s podcast (35 min. video), hosted by UserTesting’s CEO, Andy MacMillan.

I predicted that AI will transform UX teams into small, highly efficient units. AI’s ability to enhance individual productivity means that UX teams can become smaller and more efficient. This shift will lead to the creation of “super teams” where each member is highly empowered and augmented by AI tools. These teams will be capable of delivering high-quality work at an unprecedented pace.

This “UX pancaking” flattens traditional team structures, reducing management layers and enabling faster, more effective workflows. For UX professionals, this means working in highly empowered teams with greater autonomy and agility.

The key to thriving in this new environment is to embrace AI tools and focus on enhancing your individual skills. By leveraging AI to handle routine tasks, you can concentrate on the creative and strategic aspects of your work. This shift promises to make UX design more efficient, innovative, and rewarding for all involved.

AI will effectively grant superpowers to UX professionals in 5-10 years, with each of us able to see through walls — or, more accurately, see through overwhelming piles of data to identify the actionable insights for better design. Soon, we’ll be able to accomplish the UX equivalent of jumping tall buildings: perform seemingly impossible tasks with seemingly impossible speed. Flash as a technology was bad for usability, but The Flash as a superhero may turn out to be a role model for UX. (Ideogram)

We also discussed why UX is pivotal in shaping AI’s future. We can drive technology forward by ensuring it's innovative and human-centered.

AI development has often overlooked the importance of usability and user experience. This oversight has led to missed opportunities in creating technology that truly serves humanity. It’s time for UX professionals to take an active role in AI development, guiding its growth in a user-centric direction.

By integrating UX principles with AI capabilities, we can unlock new potential in various fields, from lifting people out of poverty to enhancing healthcare and education. Let's harness the power of AI, not resist it, and work towards a future where technology uplifts and empowers everyone.

Unfortunately, many UX professionals currently have a skeptical or decel attitude towards AI. They are more interested in holding AI back than in exploiting the new opportunities it creates for the world. This is because UX people inherently have a healthy skepticism about new technologies. This skepticism stems from a desire to ensure that tech does not compromise usability, because new tech usually launches with bad design. However, this cautious approach hinders progress and innovation. Rather than resist new tech, it’s better for us to focus on how to design it better.

Watch the full video of this conversation (YouTube)

Pivoting to AI-UX

Now that we’re in Year 2 of the AI revolution (counting from the release of GPT-4 in 2023), it should be obvious that there is no future for UX without AI. Two sides to this coin:

Most user interfaces will come with a heavy dose of AI, preferably in the form of new features that are deeply integrated with the user experience, and not just bolted on as a superfluous chat. Such AI-infused features and UI will need user-centered design just as much as any pre-AI UI did. More, actually, because AI-UX will need a better understanding of user needs when AI is in the driver’s seat of executing the user’s intent to fulfill the goal of intent-based outcome specification, as opposed to old-school command-driven UI where the user could issue a new command to correct mismatches between their goals and the system features.

All UX processes will be heavily supported by AI. I absolutely believe in human-AI synergy, so we’ll still need human UX specialists. In fact, more of them than before because software and user experience are eating the world: the more so, the cheaper these two functions become to execute. In 5–10 years, a UX professional with strong AI support will be twice as productive as a UX professional who remains in denial and refuses to use our new tools. Nobody will hire staff who only gets half as much done as their AI-supported staff.

We can’t just add AI to an existing design as an afterthought — especially not by simply adding natural-language chat. An AI-infused UX must be integrated and offer new features. This requires extensive user-centered design and deep understanding of user needs. (Ideogram)

Despite these points, there is still very little good work on how to achieve these two integrations between AI and UX.

AI and human factors must work together for great UX. (Midjourney)

Luckily, Paul Bryan recently announced that he’s pivoting to go all-in on AI-UX for his conferences. Paul is a great organizer of superb UX events, and I had the privilege of being the keynote speaker for one of his conferences several years ago. It was a wonderful UX conference, but (necessarily, given the year), it was based on pre-AI content. Given Paul’s skills, I have high hopes for his upcoming events, now that they have pivoted to covering the future of UX instead of the past:

Cre8 is “a single-day conference that highlights the latest AI-powered product designs in innovation hub cities around the world.” The next one-day event is in Berlin on July 3, with remote attendance available for only US $95.

STRAT is a 3-day conference “about strategic design methodologies and frameworks.” The next two events are in Amsterdam on June 23-25 and in Boulder, CO on September 9-11.

Whereas Cre8 seems fully focused on AI-UX, looking at the program for the Amsterdam STRAT conference, it only has 2/3 AI-UX content and 1/3 old-school UX content (e.g., “Making the Case for Human-Centered Design to Tech Leaders” which I am appalled to still see at a UUX conference, though I’m sure Paul Bryan is correct in programming this session because the need sadly still exists). Bottom line: 2/3 of a UX conference focusing on our future is better than I see at any other major UX conferences, so well done, Paul.

The conference website has a few videos of past speakers addressing AI-UX. They were recorded at last year’s STRAT conference, so during Year 1 of the AI era and thus already somewhat outdated. (I can’t blame STRAT for delaying the publication of free content when they live from charging for their event. Only suckers like me publish for free without a business model.)

Great news: the leading UX-strategy conference has made a hard pivot to focus on AI-UX. (Ideogram)

Luma Dream Machine: Decent AI Video

I made a new song with Suno version 3.5, which is much improved. For example, you can generate an entire song of up to 4 minutes in one action without the need to extend the generation, as in the past (this is still needed when making longer music sequences with Udio).

I then generated animations with the newly-released Luma Dream Machine. While this service is currently slow and can only create clips of 5 seconds each, the video quality is dramatically better than anything currently available to the public (outside China, where Kling is reportedly also very good).

Here’s the song: Future-Proofing Education in the Age of AI, AI-generated song (YouTube, 2:33 min.). Watch the music video to the end for a bit of a nasty surprise when Luma Dream Machine lost it. I kept this clip in my video to show the limits of current AI.

Some creators report getting better results with Luma Dream Machine when using pure image-to-video generation without any guiding text prompts. I used very sparse text prompts, but mainly let Luma “dream” from the still image I supplied it. For example, when I prompted for a “zoom-out” cut, it took it upon itself to use a drone shot that also panned around to show the audience, which is not included in the still image.

(To judge the video quality in Luma’s music video, compare it with the video I made for the same song a week earlier, using the then-leading AI video tool Runway: Music video animated with Runway, same song.)

The lyrics are based on my recent article about the same topic.

I used Ideogram’s still image for the song as the base for generating animations with Luma Dream Machine and Runway. Even the Luma animations are not up to the standards of the still image, but they come close.

Updated Midjourney Niji Image Model

Midjourney has released an updated version of its “Niji” image model, which is used to create Anime-inspired images and other images with a Japanese feel. Niji now includes better rendering of the various Japanese character sets.

Only minor tweaks to the main image model. We’re still waiting for Midjourney 7!

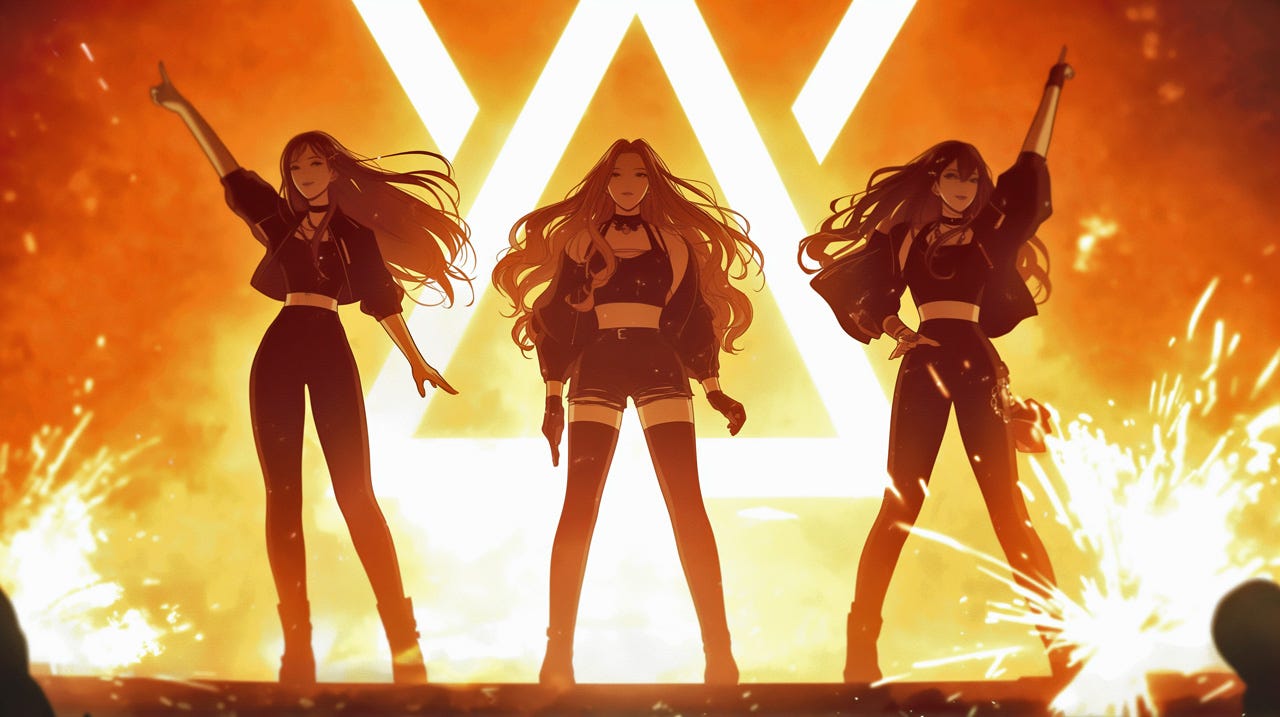

I don’t think the images were particularly improved in my experiments that admittedly didn’t include Japanese text. Here’s an example of my standard prompt for a 3-member K-pop group dancing on stage in a TV production. (I’m showing you the “color drawing” version. I also prompted for photoreal images, but they aren’t as interesting.) The main difference is that the newer image seems to have better prompt adherence in that it looks more like a TV performance, whereas the February image looks more like the group is posing rather than dancing.

Niji 6 image generated February 2024. (Midjourney)

Niji 6 image generated June 2024 after an upgrade to the image model. (Midjourney)

Before we get too carried away with praising Midjourney’s prompt adherence, let me show you this image, which I also got when prompting for a “3-member K-pop group.”

Apple AI: A Resounding Maybe

Last week, Apple announced several new AI-based products, including some underwhelming image-generation features and a promising improvement of its AI-agent Siri, which was previously severely underpowered but supposedly will get substantially better language skills and integration with the apps and data on the phone.

This seems to fulfill many of the UX advances I discussed in April for AI as an integration glue between previously fragmented individual apps.

Certainly, the point that you say what you want but not how to achieve it is part of the shift from command-based to intent-based outcome specification, which I described as the foundation for AI-based user experience in May 2023.

Apple's approach seems promising for usability. However, for all AI-based interactions, the proof is in the pudding: details matter. Of course, these features work in demos. How well will they work for real users? That remains to be seen. Old Siri makes me skeptical: for so many years, Apple could never make it good.

I want to see real users perform real tasks in a real-world context before I make my final judgment on Apple’s new AI.

Will the new, smarter Siri finally work well for users? Remains to be seen whether Apple’s AI strategy will deliver a nutritious user experience or a spoiled one. (Ideogram)

Leonardo Phoenix: Much Improved Prompt Adherence

Leonardo has a new image-generation model out in preview. It is touted as having much improved prompt adherence, which I welcome since complex scenes with multiple elements have been a very weak point of Leonardo in the past.

As an experiment, I tried to make images of a darts game. I could never get good drawings of this from Midjourney or Ideogram for my article last week about the benefits of extensive experimentation in design. (Follow this link to see the Ideogram image I ended up using.)

The following examples show that Leonardo does adhere to many aspects of my prompt: the two dart players are a man in a blue suit and a woman in a green blouse and red skirt. Check, in every image. They are in a traditional English pub with a dartboard hanging on the wall. Double-check, except for one image where the dartboard is standing in a spot that would severely endanger a pub patron sitting behind it. The other patrons are drinking. Check (though too many of them wear the same blue suit as the male darts player). The couple is playing darts. Almost never. The woman is pointing to the dartboard. Sometimes. A dart has hit the bullseye. Never.

Good, but not perfect, prompt adherence when trying to get Leonardo’s new Phoenix model to draw a game of darts.

OK, no current AI image generators do well with darts. For my second experiment, I turned to the highly-photographed game of soccer for which any AI model should have digested plenty of training images. (You can also see the soccer images I got from Midjourney in the linked article, where I used them to illustrate the benefits of taking many shots on goal.)

Leonardo’s images of a soccer match where one player is about to take a shot at goal. It has good prompt adherence for the players’ uniforms, but doesn’t always seem to understand game dynamics. And there’s an uncalled-for “high fashion” label in one image. Here, I also experimented with Leonardo’s preset creative styles, which work well.

Ideogram and Leonardo Phoenix are now about equal in prompt adherence for complex scenes. On the other hand, Leonardo makes much prettier images, especially after upscaling. (Ideogram doesn’t have an upscaler, so my usual workflow takes Ideogram’s images and upscales them with Leonardo.) Finally, Leonardo can create higher resolution images and has an abundance of features that are missing in Ideogram. For novice users, this is to Ideogram’s advantage (fewer features = easier UI), but for skilled users, Leonardo can do more. Image iteration is also easier in Ideogram.

Now that Leonardo is back in business with good prompt adherence and strong text typesetting, they need a few rounds of usability testing and UX enhancements.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 41 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today.

Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), the foundational Usability Engineering (27,129 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched).

Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI and was named a “Titan of Human Factors” by the Human Factors and Ergonomics Society.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

· Read: article about Jakob Nielsen’s career in UX

· Watch: Jakob Nielsen’s 41 years in UX (8 min. video)

Jakob Nielsen, your article was incredible.