UX Roundup: OpenAI Debacle | Productive AI in UX | Better Headlines | Size Matters | Faster Horses | Solo UXer

Summary: Improving productivity of UX work by using AI | Writing good headlines for UX research slides | Sometimes you should build what people tell you they want | 6 Steps to professional growth for a solo UX practitioner

UX Roundup for November 27, 2023.

Implications of OpenAI’s Near-Death Debacle

10 days ago, OpenAI was close to being destroyed by its own board, in a truly mismanaged clown show. (The Financial Times has a great analysis of the developments.)

OpenAI’s old board was a clown show, consisting of only 4 people, who would rather destroy their own company than see it introduce improved products for the benefit of humanity. (Dall-E)

As far as the story has leaked to the outside, the problem is that the old OpenAI board was a group of crazy Doomer zealots, who took it upon themselves to judge what would be good for humanity. Speaking as a member of humanity, I prefer not to have such zealots decide what software I am allowed to use.

It is distinctively unhealthy for important products to be under the control of a small board of unaccountable elitists. It is much better to have the future of AI and the future of the world economy decided by millions of developers who invent new ideas and introduce new services and billions of users who buy those services they find useful and discard those services they don’t like.

The old OpenAI board was telling 3rd party developers that it didn’t want them, didn’t want to support them, and didn’t want the GPT store to succeed (or at least that no independent products would be allowed to succeed on their platform). Imagine if Apple said, “We are committed to very slow development of mobile computing to ensure that nothing ever goes wrong.” If so, who would want to rely on them for app success? Everybody would go Android-only. So naturally, Apple doesn’t say that, but advances iOS and iPhone hardware as aggressively as it can! (Even though mobile phones have killed many more people than AI ever did, by causing driver distraction. Mobile phones kill about half a million people per decade worldwide.)

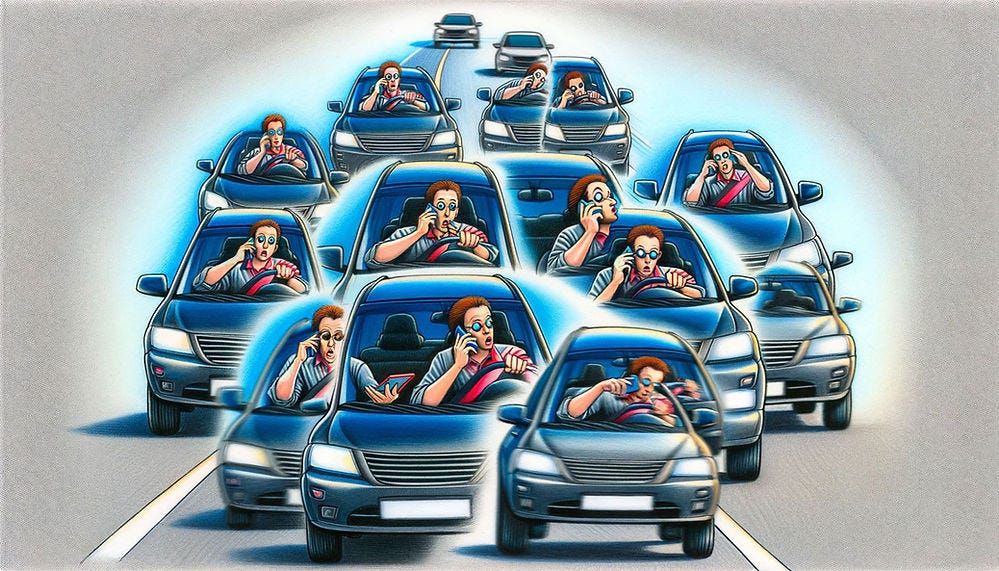

Driver distraction caused by mobile phones causes about 0.5M traffic deaths per decade worldwide. Even though this Dall-E-generated image depicts drivers holding their phones, note that hands-free use of mobile phones is just as dangerous. The reason it’s dangerous to be on the telephone while driving is not because you have one hand on the phone instead of the steering wheel but because of the cognitive load of conversing with a remote party who’s not present in the vehicle.

Luckily, the Board of Clowns failed in their attempt to destroy advances in AI, probably because of the unprecedented vote of no confidence in their leadership by 97% of the company’s employees. Most of the Board resigned, the old CEO was brought back, and OpenAI and ChatGPT are still in business.

The new OpenAI board consists of one of the clowns from the old board plus two seemingly sensible new members. (Gencraft)

However, the debacle has shown the risk for outside developers of relying on a company with unreliable governance. Unless OpenAI becomes a normal company with a board that’s accountable to shareholders, customers, and employees, there’s a risk of another blowup.

I would like to have non-profit organizations conduct AI research; we need more such research, and we need it to be independent of the product companies. But these non-profits can’t themselves be the product companies behind major platforms. We need stability in the platforms for 3rd party developers to produce the next generation of innovations beyond the large-language models, image-generation models, and the like.

Most of the value in AI will come from specialized applications, vertical applications, and internal enterprise applications. The platforms will be worth plenty, but only on the order of hundreds of billions of dollars, whereas the contribution of AI to the world population’s standard of living will be many trillions of dollars. Most of this value will be consumer surplus, but there will probably also be one or two trillion dollars realized by application developers.

Consumer surplus is a basic concept in economics, driven by the price elastic curve: many customers would have been willing to pay more for a product (or service) because they gain more value from it than the price. The difference between the value realized and the price charged is the consumer surplus. Conversely, some potential customers would gain less from a product than the price, and so they don’t buy. The pricing challenge for a vendor is to set the price low enough to not lose too many customers but high enough to still make money. The difference between the price and the cost of providing the product is the vendor’s profit.

My prediction is that in AI, the consumer surplus will often be much larger than the vendor profit. For example, in one case study, the estimated value of using the AI copilot for Microsoft Office was $750 per user per month, and yet Microsoft only charges $30 per month per seat. In this example, the consumer surplus is $720. We don’t know Microsoft’s cost of providing the AI copilot, but since inference compute is known to be expensive for GPT 4, I’ll just estimate $15. If this is true, MS has a 50% profit margin. I actually think their true profit is likely smaller. Under these rough assumptions, the consumer surplus is 48 times bigger than the vendor profit for AI use in the enterprise.

Rough estimates for AI once it has matured in one to two decades:

Consumer surplus: $40 trillion (that’s what you and I are getting)

Application vendor profits: $2 trillion (that’s what’s up for grabs)

Platform vendor profits: $200 billion (that's what OpenAI and the like will be getting)

The OpenAI debacle has shown the danger of relying too closely on a single platform. I hope we will soon get middleware solutions that abstract away the individual AI platform APIs, so that a solutions provider can swap out AI platforms if one becomes unreliable (as almost happened with GPT) or falls behind in offering the best technology (which seems to have been the case with Google, which dropped from world-leader status in AI to currently being an also-ran.)

Let a thousand flowers bloom in AI so that humanity benefits from rapid innovation in a wide range of fields. The prudent approach is to develop these solutions to be as independent as possible of the underlying general AI models so that each application can swap out the model as needed. (Dall-E)

Productive Use of AI In UX Work

Annie Wang, who is the UX Lead & Manager at Zoocasa in Canada, posted a comment on one of my articles. She mentioned that “having introduced AI software into my UX team, I've been thrilled to see our productivity soar over the year.” Naturally, I was curious and asked Wang what in particular made AI so productive for her team. Here’s what she said:

“In the past, we had to spend a lot of time summarizing user insights, clipping videos, and presenting them to stakeholders. It was a labor-intensive task. With AI tools, we’ve seen a remarkable improvement. A platform I’ve found useful is Grain, which has increased our productivity by approximately four times.

We also use AI to improve our UXR documentation. This has made finalizing our documentation easier and allowed us to concentrate on the content rather than on the wording.

Overall, AI has been a game-changer in many respects, but there’s still room for growth, particularly for handling large datasets effectively.”

Wang warned that AI has failed by omitting significant amounts of input data, when asked to analyze large volumes of data.

See also the article on how to get started with using AI in UX work for more ideas of UX activities that benefit from AI.

AI use enhanced the productivity of a UX team in several ways. (Dall-E)

Landing Big Ideas: Crafting Captivating Headlines for UXR Slides

Mani Pande, Research Director at Cisco, provides 11 good guidelines for writing better headlines in slide decks that present user research findings. She advises treating your slides as newspaper articles when it comes to the headline. It’s a separate job to write headlines, and this should be done after completing the content. Use the same workflow as a newspaper, where specialist editors craft the headlines after the journalists are done.

I would add one piece of advice: Use AI as your subeditor. Paste in your content and ask for 10 headlines. Then, ask for 10 more creative ones! Here are 20 alternate headlines for this short piece I received in about 10 seconds. First, the “regular” headlines:

The Power of Headlines: Why UXRs Should Pay Attention

Crafting Catchy Headlines: A UXR's Guide to Slide Deck Success

Headlines That Hook: Tips for UXRs to Make Slides Pop

Beyond the Byline: Why UXRs Should Channel Their Inner Editor

From Newsroom to Boardroom: A UXR's Guide to Headline Mastery

Words that Work: Headline Tips to Enhance UXR Presentations

The Art of Brevity: UXR Slide Headlines Done Right

Stand Out Slides: Headline Hacks for UXRs

Clickworthy Slides: Writing Headlines that Captivate UXR Audiences

The Power of Persuasion: Headlines to Capture UXR Stakeholders

And then the “creative” headlines:

Hook, Line and Sinker: Crafting Killer Headlines for UXR Slides

Baiting Your Audience: Seductive Headlines for UXR Presentations

Luring Your Stakeholders: Magnetic Headlines for UXR Slides

Reeling Them In: How to Write Irresistible Headlines as a UXR

Take the Bait: Compelling Headlines for UXR Decks that Draw Audiences In

Baiting the Hook: Tactics for Seductive UXR Headlines

Luring Your Audience: The Art of Persuasive Headlines for UXRs

Setting the Hook: How to Grip UXR Stakeholders with Headlines

Landing Big Ideas: Crafting Captivating Headlines for UXR Slides

Master Baiter: A UXR's Guide to Irresistible Headlines

A little too heavy on the fishing metaphors, so AI disappointed me with its creativity game this time. But usually, I get something useful from this exercise. As you can see, I used Creative option 9 for my actual headline.

Mani Pande exercising her fishing skills in preparation for writing her next headlines. (For the 3 readers who can’t get a joke, I want to clarify that this is not actually her, just what Midjourney dreamt up when I asked for an image of a woman gone flyfishing.)

Sometimes You Should Build What People Tell You They Want

I always say to ignore what customers tell you. Pay attention to what users do, not what they say.

I stand by this advice, but as always in UX, there are modifications. Sometimes, you are better off just giving users what they want. Ben Dressler from Sweden put it this way in a provocative post: “90% of the time, you should build faster horses.” (This is an allusion to the famous quote from Henry Ford that “If I would have asked people what they wanted, they would have said faster horses.”)

If we always delivered faster horses, we would never get the motor car. But big innovations are the exception. Most of the time, incremental improvements are what create GDP growth and increase company profits. And what users want! Even so, I still think you are better off watching users before deciding what incremental improvements to build.

A faster horse or an automobile? It’s not always clear what to deliver to customers. (Dall-E.)

6 Steps to Professional Growth for a Solo UX Practitioner

Strategy for a UX professional to gain skills and professional acumen despite being the only UX specialist in a company: independent study, professional reflection, guerilla usability, interns, and portfolio maintenance since a new job will be required. Don’t be raised by wolves.

The full article has much more detail, but here’s an infographic summarizing the 6 steps:

Feel free to copy or reuse this infographic, provided you give https://jakobnielsenphd.substack.com/p/solo-ux-professional as the source.

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 40 years experience in UX. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability" by The New York Times, and “the next best thing to a true time machine” by USA Today. Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), Usability Engineering (26,283 citations in Google Scholar), and the pioneering Hypertext and Hypermedia. Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.

Much to like about user research synthesis using AI, but what are the ethics and/or privacy considerations of uploading data that feeds an LLM?

you are very quick to dismiss the caution coming from the OpenAI Board, drawing comparisons to Apple, if they decided to be slow and cautious everyone would be using Android phones. I would argue AI is fundamentally different from smart phones. Namely, smart phones don't have the potential to eradicate the human race.

Additionally, perhaps if Apple was a bit more cautious in their rollout they could have explored preventative measures to reduce the .5M driving deaths per decade.