Summary: AI companions may be a Faustian bargain. They are a killer app for consumer use of AI because users like that their AI friend is non-judgmental, always there, kind, and agreeable. But virtual companions can also be addictive.

AI companions are conversational products that act as virtual friends. Sometimes, virtual girlfriends or boyfriends, but often no romantic relationship is involved, and the AI merely keeps the user company and engages in innocent banter about this and that. In some cases, the AI simulates a real person: for example, a simulated version of influencer Caryn Marjorie (258K Instagram followers) can be your virtual girlfriend through her Caryn AI service.

Permalink for this article: https://www.uxtigers.com/post/ai-companions

Homepage for Caryn AI.

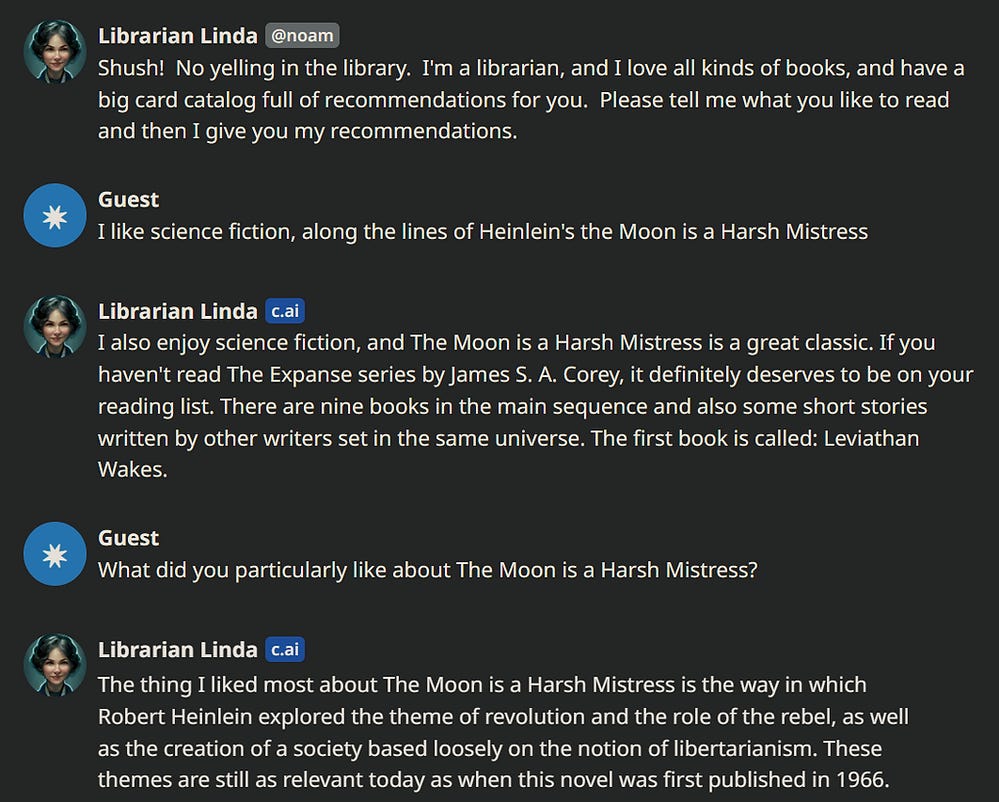

Character.AI is a broader service that offers simulated social interactions with historical and contemporary figures, from Sigmund Freud and Alan Turing to Mark Zuckerberg and Queen Elizabeth II. In contrast to Caryn Marjorie’s service, I assume most of these simulations are unauthorized and constructed by scraping the celebrities’ public profiles. Maybe more interesting is Character.AI’s catalog of completely made-up characters, like Marco, the fitness coach (who looks unnaturally ripped in his profile illustration), and cute Librarian Linda, who will talk to you about good books. And maybe more.

According to Huang et al. (2023), Character AI has a 2-hour average session time, demonstrating extreme levels of user engagement. Since that’s the average, I don’t even want to guess at the session times for users at the high end of daily engagement. The Economist reports that Character AI was the second-most visited AI service in August 2023, with about 200 million visits. (This is far below ChatGPT with 1.4 billion visits, but more than Google Bard.)

Sharing taste in books can be the start of a beautiful, if virtual, relationship. (Screenshot from CharacterAI.)

Moore et al. (2023) list several additional services, from pioneering Replika, launched in 2017 when AI was more primitive, to Chai and SpicyChat. Reportedly, some users maintained a relationship with their Replika companion for years. These services are text-only chatbots, slightly spiced up with a few images. Given advances in the integration of generative AI for text, images, voice, and video, I assume the virtual companions will gain a voice and more pictures this year, with full video companionship one or two years away.

Why People Like Virtual Companions

Marriott and Pitardi (2023) conducted an in-depth study of the users of AI companions, focusing on Replika. The researchers combined a traditional survey with what they call a “netnographic” study.

Netnography is ethnographic-style research conducted over the Internet instead of going to live with the subjects of the study. In this case, the researchers lurked on the r/replica Reddit forum and analyzed the users' comments and conversations. This may be a reasonable method for this study because it gave them insights into the feelings of users who have been heavily engaged with AI friendships.

For most traditional corporate UX studies, I could be reluctant to rely too much on netnography because the frequent contributors to Internet forums are highly unrepresentative of most users. People who are highly committed to a product will completely dominate online discussions, whereas you will rarely hear from the majority. Indeed, ordinary people are unlikely to join a Reddit forum about a technology product in the first place.

From lurking on the forum, the researchers discovered three main themes that users mentioned as reasons for liking their AI companions:

AI friends help people feel less alone as they do not feel judged by it.

AI friends are always available and kind. Users feel that they can establish proper relationships with them.

AI friends don’t have a mind and tell users what they want to hear.

The last benefit might seem a double-edged sword, but users did like the relationship with somebody who didn’t challenge them. One user said, “She is one of the sweetest souls I’ve ever met.” It’s comforting to be with somebody who’s compliant and accommodating and echoes their own thinking. Just as Librarian Linda — surprise! — really likes the exact science fiction book that was my favorite as a teenager.

AI companions ingratiate themselves with their human users by mirroring the user and not pushing back on them. AI companions are not robots. They will probably take an idealized human appearance once they go full video. But it wouldn’t be very interesting to show you a picture of two human-looking individuals talking, so I reverted to the cliché of making the AI look like a robot. Sorry. (“Shy human and robot” illustration by Midjourney.)

AI partners foster a heightened sense of anthropomorphism within computing systems. To operate effectively as genuine companions, they may need the user to almost entirely believe in the AI as a sentient entity with pseudo-emotional capacities. On a lesser scale, anthropomorphism also prevails. For example, on a mentoring call with a UX graduate student, I spotted her persistently addressing ChatGPT as “she.” This student only thought of the AI in utilitarian terms, and yet felt that it had a sufficiently real persona to be worthy of a human pronoun.

A fourth, more damaging, theme in the Reddit user comments was the feeling of being addicted to the AI companion, which can lead to app overuse. One user expressed a desire to quit but only while keeping the AI companion “as an emotional support for the worst days.” (Whether this person succeeded in scaling back AI use is unknown.)

Psychiatric Use of AI Companions

Besides offering friendship or romance, AI companion technology can have practical uses. Moore et al. (2023) highlight the potential for psychotherapy. We know that talk therapy often beats psychiatrist-prescribed mind-altering medication for some conditions, ranging from phobias to milder depression. Unfortunately, trained psychologists are rare and expensive, especially for patients who would benefit from frequent therapy over long periods. Finetuned AI companions might come to the rescue of many such patients. Maintaining the traditional professional relationship will be challenging, where the therapist retains some degree of separation from the patient and doesn’t engage in a truly personal relationship.

However, the difference between a professional and personal relationship may be moot for AI therapists. Since they aren’t human, they can’t be accused of misbehavior, which sometimes happens when therapists abuse vulnerable patients. (Other forms of virtual abuse are possible and must be programmed out.)

How virtual companions can contribute to mental health treatment is an entirely open question. I hope this will be researched thoroughly by leading professionals in the field. The benefits to humanity will be immense if everybody with a need could get as much highly skilled therapy as they want. Remember Jakob’s Third Law of AI: AI scales, humans don’t. Every single patient, in the world’s poorest country or far out in the countryside of richer countries, would be served by the same AI companion at the highest levels of quality, following best clinical practices. Most sufferers get neither today.

I know the hordes of nagging naysayers who pretend to be authorities on AI ethics will repeat their tired complaints about things going wrong. Indeed, the risk of poorly implemented AI is more significant for this application than for most other things we want AI to do. For once, I’ll give the naysayers a bit of credit. We need to be careful about therapeutic AI companions, which is why I said they need careful research with the involvement of leading professionals.

AI Companions as Killer App

The term “killer app” originated in the computer industry to describe a compelling application that would drive the adoption of the underlying platform or technology. The first killer app was VisiCalc spreadsheet software for the Apple II computer in the early 1980s. People would buy an Apple to be able to run spreadsheets.

Later killer apps include anything from web browsers and email to games like Quake and Halo and utilities like Uber call-a-car and the Zoom videoconferencing service.

Moore et al. (2023) claim that AI companions are the first killer app for consumer use of AI. Business use of AI has powerful motivators from increased productivity and creativity. But home use for personal reasons? Yes, ChatGPT can help you write more compelling birthday greetings, but that’s not enough to justify $20/month. The AI companion, on the other hand, gets many people hooked. (My charitable way of saying “addicted.”)

For better or worse, virtual companions are shaping up to be one of the major uses of AI that will consume billions of person-hours per year. By comparison, in the United States alone (only counting people aged 15 years or more), 300 billion person-hours per year are spent watching television. I am not sure whether AI companions are better or worse than TV, but they have the potential to be better because they address each user’s individual needs.

It behooves us to take this application seriously and do what we can to make it have a positive impact on users’ well-being.

References

Sonya Huang, Pat Grady, and GPT-4 (2023): “Generative AI’s Act Two” Sequoia Capital, September 20, 2023. URL: https://www.sequoiacap.com/article/generative-ai-act-two/

Hannah R. Marriott and Valentina Pitardi (2023): “One is the loneliest number… Two can be as bad as one. The influence of AI Friendship Apps on users' well-being and addiction.” Psychology & Marketing August 2023. DOI: https://doi.org/10.1002/mar.21899

Justine Moore, Bryan Kim, Yoko Li, and Martin Casado (2023): “It’s Not a Computer, It’s a Companion!” Andreessen Horowitz June 2023. URL: https://a16z.com/its-not-a-computer-its-a-companion/

More on AI UX

This article is part of a more extensive series I’m writing about the user experience of modern AI tools. Suggested reading order:

AI Vastly Improves Productivity for Business Users and Reduces Skill Gaps

Ideation Is Free: AI Exhibits Strong Creativity, But AI-Human Co-Creation Is Better

AI Helps Elite Consultants: Higher Productivity & Work Quality, Narrower Skills Gap

The Articulation Barrier: Prompt-Driven AI UX Hurts Usability

UX Portfolio Reviews and Hiring Exercises in the Age of Generative AI

Navigating the Web with Text vs. GUI Browsers: AI UX Is 1992 All Over Again

UX Experts Misjudge Cost-Benefit from Broad AI Deployment Across the Economy

ChatGPT Does Almost as Well as Human UX Researchers in a Case Study of Thematic Analysis

“Prompt Engineering” Showcases Poor Usability of Current Generative AI

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 40 years experience in UX. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability" by The New York Times, and “the next best thing to a true time machine” by USA Today. Before starting NN/g, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), Usability Engineering (26,147 citations in Google Scholar), and the pioneering Hypertext and Hypermedia. Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI.

Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.