The Articulation Barrier: Prompt-Driven AI UX Hurts Usability

Generative AI systems like ChatGPT use prose prompts for intent-based outcomes, requiring users to be articulate in writing prose, which is a challenge for half of the population in rich countries.

Permalink for this article: https://www.uxtigers.com/post/ai-articulation-barrier

Current generative AI systems like ChatGPT employ user interfaces driven by “prompts” entered by users in prose format. This intent-based outcome specification has excellent benefits, allowing skilled users to arrive at the desired outcome much faster than if they had to manually control the computer through a myriad of tedious commands, as was required by the traditional command-based UI paradigm, which ruled ever since we abandoned batch processing.

But one major usability downside is that users must be highly articulate to write the required prose text for the prompts. According to the latest literacy research (detailed below), half of the population in rich countries like the United States and Germany are classified as low-literacy users. (While the situation is better in Japan and possibly some other Asian countries, it’s much worse in mid-income countries and probably terrible in developing countries.)

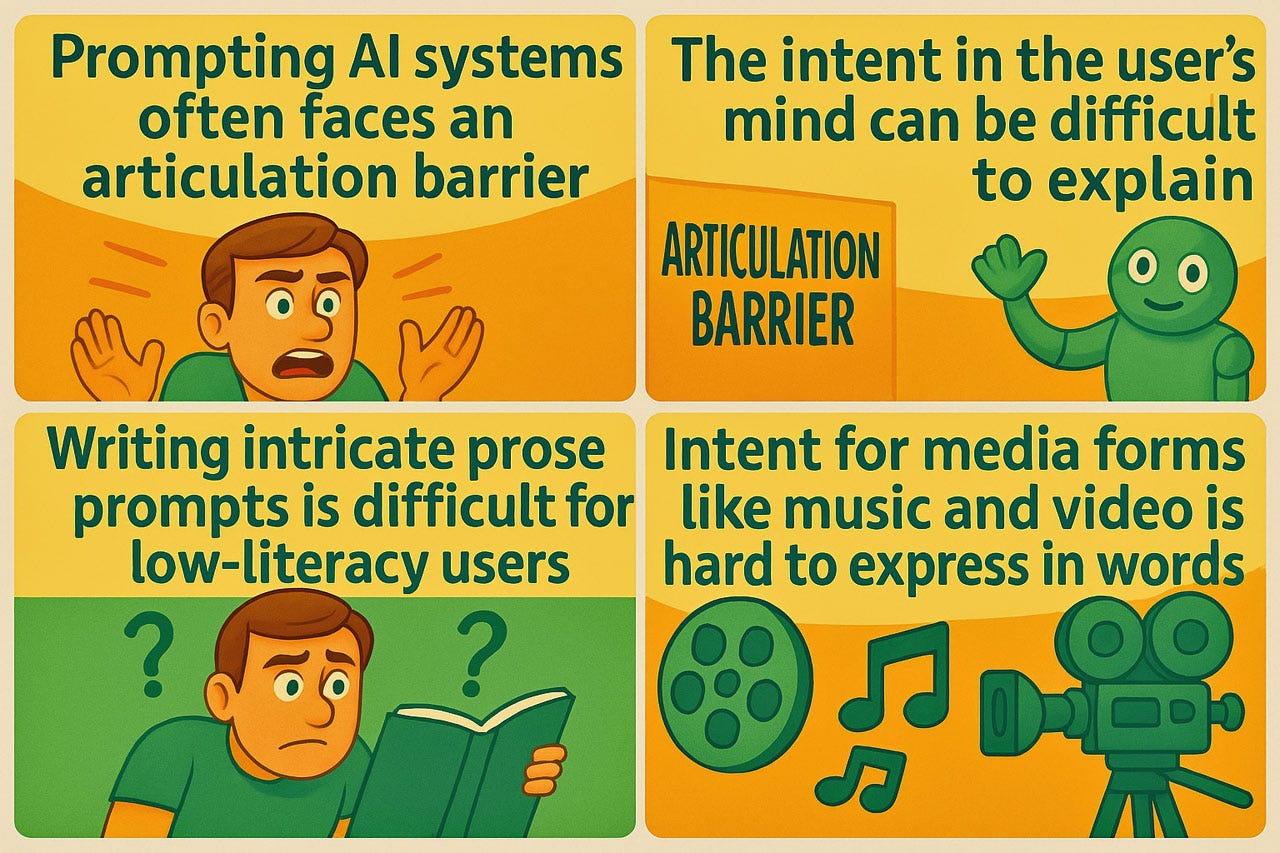

The articulation barrier in communicating user intent to AI systems. (ChatGPT)

I have been unable to find large-scale international studies of writing skills, so I rely on studies of reading skills. Generally, writing new descriptive prose is more challenging than reading and understanding prose already written by somebody else. Thus, I suspect that the proportion of low-articulation users (to coin a new and unstudied concept) is even higher than that of low-literacy users.

A small piece of empirical evidence for my thesis is the prevalence of so-called “prompt engineers” who specialize in writing the necessary text to make an AI cough up the desired outcome. That “prompt engineering” can be a job suggests that many business professionals can’t articulate their needs sufficiently well to use current AI user interfaces successfully for anything beyond the most straightforward problems.

Articulating your needs in writing is difficult, even at high literacy levels. For example, consider the head of a department in a big company that wants to automate some tedious procedures. He or she goes to the IT department and says, “I want such and such, and here are the specs.” What’s the chance that IT delivers software that actually does what this department needs? Close to nil, according to decades of experience with enterprise software development. Humans simply can’t state their needs in a specification document with any degree of accuracy. Same for prompts.

Articulating prompts is difficult for many users, especially because AI responses don’t provide help for better prompting. Cartoon by Dall-E.

Why is this serious usability problem yet to be discussed, considering the oceans of analysis spilled during the current AI gold rush? Probably because most analyses of the new AI capabilities are written by people who are either academics or journalists. Two professions that require — guess what — high literacy. Our old insight you ≠ user isn’t widely appreciated in those lofty — not to say arrogant — circles.

I expect that in countries like the United States, Northern Europe, and East Asia, less than 20% of the population is sufficiently articulate in written prose to make advanced use of prompt-driven generative AI systems. 10% is actually my maximum-likelihood estimate as long as we lack more precise data on this problem.

For sure, half the population is insufficiently articulate in writing to use ChatGPT well.

Overcoming the Articulation Barrier

How to improve AI usability? We first need detailed qualitative studies of a broad range of users with different literacy skills using ChatGPT and other AI tools to perform real business tasks. Insights from such studies will give us a better understanding of the issue than my early, coarse analysis presented here.

Second, we need to build a broader range of user interface designs informed by this user research. Of course, these designs will then require even more research. Sorry for the trite conclusion that “more research is needed,” but we are at the stage where almost all work on the new AI tools has been driven purely by technologists and not by user experience professionals.

I don’t know the solution, but that won’t stop me from speculating. My best guess is that successful AI user interfaces will be hybrid and combine elements of intent-based outcome specification and some aspects of the graphical user interface from the previous command-driven paradigm. GUIs have superior usability because they show people what can be done rather than requiring them to articulate what they want.

While I don’t think it’s great, one current design that embodies some of these ideas is the AI feature of the Grammarly writing assistant (shown below). In addition to spelling out what they want, users can click buttons for a few common needs.

Adult Literacy Research Findings: Half the Populations Are Poor Readers

The best research into the reading skills of the broad population is conducted by the OECD, a club of mostly rich countries. The latest data I have found is from the Program for the International Assessment of Adult Competencies (PIAAC) and was collected from 2012 to 2017. The project tested a little less than a quarter of a million people, though the exact number is impossible to discern due to poor usability on the various websites reporting the findings. Anyway, it was a huge study. As an aside, it’s a disgrace that it’s so hard to find and use this data we taxpayers have paid dearly to have collected and that the data is released with massive delays.

Children’s literacy is measured in a different set of research studies, the PIRLS (Progress in International Reading Literacy Study). I will not discuss this here since the skills of adult users determine the question of AI usability in business settings.

PIAAC measures literacy across each country’s entire population of people aged 16–65. For my goal of analyzing business usability, the lower end of this age range is problematic since most business professionals don’t start working until around age 22. However, PIAAC is the best we have, and most of their study participants qualify as working age.

The following chart shows the distribution of adult literacy in 20 countries, according to PIAAC. The red and orange segments represent people who can read (except for a few at the bottom of the red zone) but not very well. At level 1, readers can pick out individual pieces of information, like the telephone number to call in a job ad, but they can’t make inferences from the text. Low-level inferences become possible for readers at level 2, but the ability to construct meaning across larger chunks of text doesn’t exist for readers below level 3 (blue). Thus, level 3 is the first level to represent the ability to truly read and work with text. Levels 4 and 5 (bunched together as green) represent what we might call academic-level reading skills, requiring users to perform multi-step operations to integrate, interpret, or synthesize information from complex or lengthy continuous, noncontinuous, mixed, or multiple-type texts. These high-literacy users are also able to perform complex inferences and apply background knowledge to the interpretation of the text, something that the mainstream level 3 readers can’t do.

Level 5 (very high literacy) is not quite genius level, but almost there: in most rich countries, only 1% of the population has this level of ability to understand a complex text to the fullest.

We see from the chart that Japan is the only country in the study with good reading skills, and even they have a quarter of the population with low literacy. The Netherlands, New Zealand, and Scandinavia also have good scores. But most rich countries have more or less half the population in the lower-literacy range, where I suspect that people’s ability to articulate complex ideas in writing will also be low.

Since this research was run by the OECD (a club of mostly rich countries), there’s no data from poor countries. But the data from three middle-income countries (Chile, Mexico, and Türkiye) is terrible: in all three, the low-literacy part of the population accounts for more than 85%. I can only speculate, but the scores from impoverished developing countries with deficient school systems would probably be even worse.

Data collected by the OECD. Countries are sorted by the percentage of their adult population within literacy levels zero through two. “Scandinavia” is the average of Denmark, Finland, Norway, and Sweden.

Infographic to Summarize This Article

Feel free to copy or reuse this infographic, provided you give this URL as the source: https://jakobnielsenphd.substack.com/p/prompt-driven-ai-ux-hurts-usability

More on AI UX

This article is part of a more extensive series I’m writing about the user experience of modern AI tools. Suggested reading order:

AI Vastly Improves Productivity for Business Users and Reduces Skill Gaps

Ideation Is Free: AI Exhibits Strong Creativity, But AI-Human Co-Creation Is Better

AI Helps Elite Consultants: Higher Productivity & Work Quality, Narrower Skills Gap

The Articulation Barrier: Prompt-Driven AI UX Hurts Usability

UX Portfolio Reviews and Hiring Exercises in the Age of Generative AI

Analyzing Qualitative User Data at Enterprise Scale With AI: The GE Case Study

Navigating the Web with Text vs. GUI Browsers: AI UX Is 1992 All Over Again

UX Experts Misjudge Cost-Benefit from Broad AI Deployment Across the Economy

ChatGPT Does Almost as Well as Human UX Researchers in a Case Study of Thematic Analysis

“Prompt Engineering” Showcases Poor Usability of Current Generative AI

About the Author

Jakob Nielsen, Ph.D., is a usability pioneer with 40 years experience in UX and the Founder of UX Tigers. He founded the discount usability movement for fast and cheap iterative design, including heuristic evaluation and the 10 usability heuristics. He formulated the eponymous Jakob’s Law of the Internet User Experience. Named “the king of usability” by Internet Magazine, “the guru of Web page usability” by The New York Times, and “the next best thing to a true time machine” by USA Today. Previously, Dr. Nielsen was a Sun Microsystems Distinguished Engineer and a Member of Research Staff at Bell Communications Research, the branch of Bell Labs owned by the Regional Bell Operating Companies. He is the author of 8 books, including the best-selling Designing Web Usability: The Practice of Simplicity (published in 22 languages), Usability Engineering (26,415 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (published two years before the Web launched). Dr. Nielsen holds 79 United States patents, mainly on making the Internet easier to use. He received the Lifetime Achievement Award for Human–Computer Interaction Practice from ACM SIGCHI.

· Subscribe to Jakob’s newsletter to get the full text of new articles emailed to you as soon as they are published.